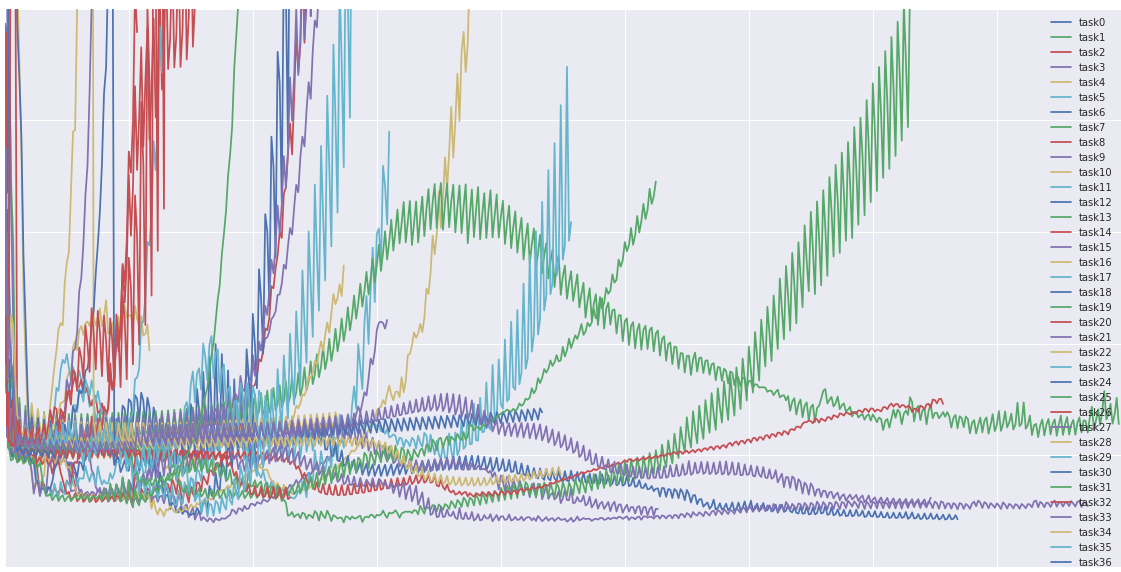

relation between batch size and learning rate

|

DONT DECAY THE LEARNING RATE INCREASE THE BATCH SIZE

Earlier Goyal et al. (2017) exploited a linear scaling rule between batch size and learning rate to train ResNet-50 on ImageNet in one hour with batches of |

|

Control Batch Size and Learning Rate to Generalize Well

has a positive correlation with the ratio of batch size to learning rate which suggests a negative correlation between the generalization ability of neural |

|

Three Factors Influencing Minima in SGD

13 Sept 2018 Charac- terizing the relation between learning rate batch size and the properties of the final minima |

|

AdaBatch: Adaptive Batch Sizes for Training Deep Neural Networks

14 Feb 2018 We will illustrate that the relationship between batch size and learning rate extends even further to learning rate decay. The following ... |

|

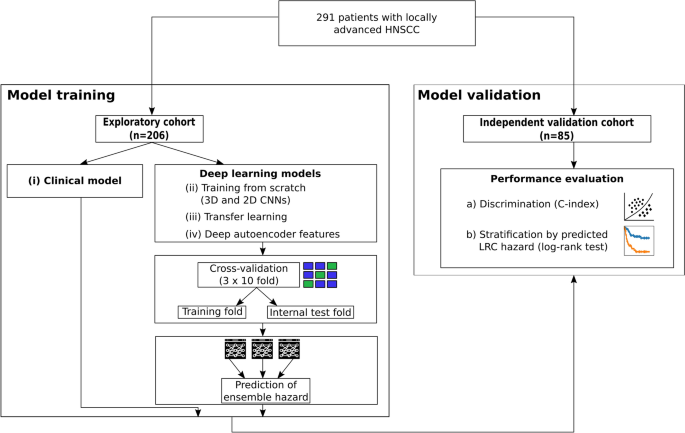

An Empirical Model of Large-Batch Training

14 Dec 2018 period or an unusual learning rate schedule) so the fact that it is ... Changing the batch size moves us along a tradeoff curve between the ... |

|

Edinburgh Research Explorer - Width of Minima Reached by

4 Oct 2018 Influenced by Learning Rate to Batch Size Ratio ... We derive a relation between LR/BS and the width of the minimum found by SGD. |

|

Measuring the Effects of Data Parallelism on Neural Network Training

19 Jul 2019 What is the relationship between batch size and number of training ... and data sets while independently tuning the learning rate momentum |

|

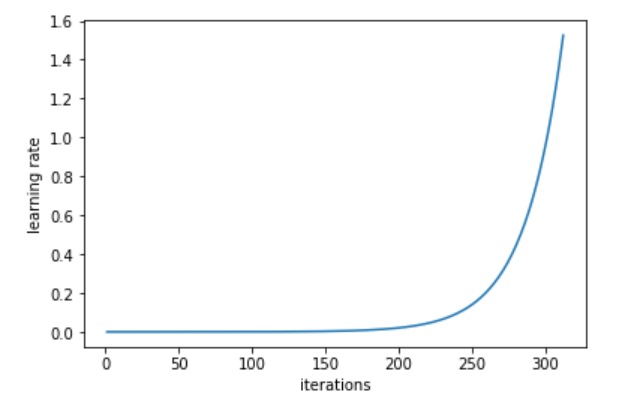

Dynamic Batch Adaptation

1 Aug 2022 Motivated by the known inverse relation between batch size and learning rate on update step magnitudes we introduce a novel training ... |

|

The Effect of Network Width on Stochastic Gradient Descent and

9 May 2019 is a function of the batch size learning rate |

|

Coupling Adaptive Batch Sizes with Learning Rates

28 Jun 2017 work our algorithm couples the batch size to the learning rate |

|

Relation Between Learning Rate and Batch Size - Baeldung

16 mar 2023 · The learning rate indicates the step size that gradient descent takes towards local optima: · Batch size defines the number of samples we use in |

|

Control Batch Size and Learning Rate to Generalize Well

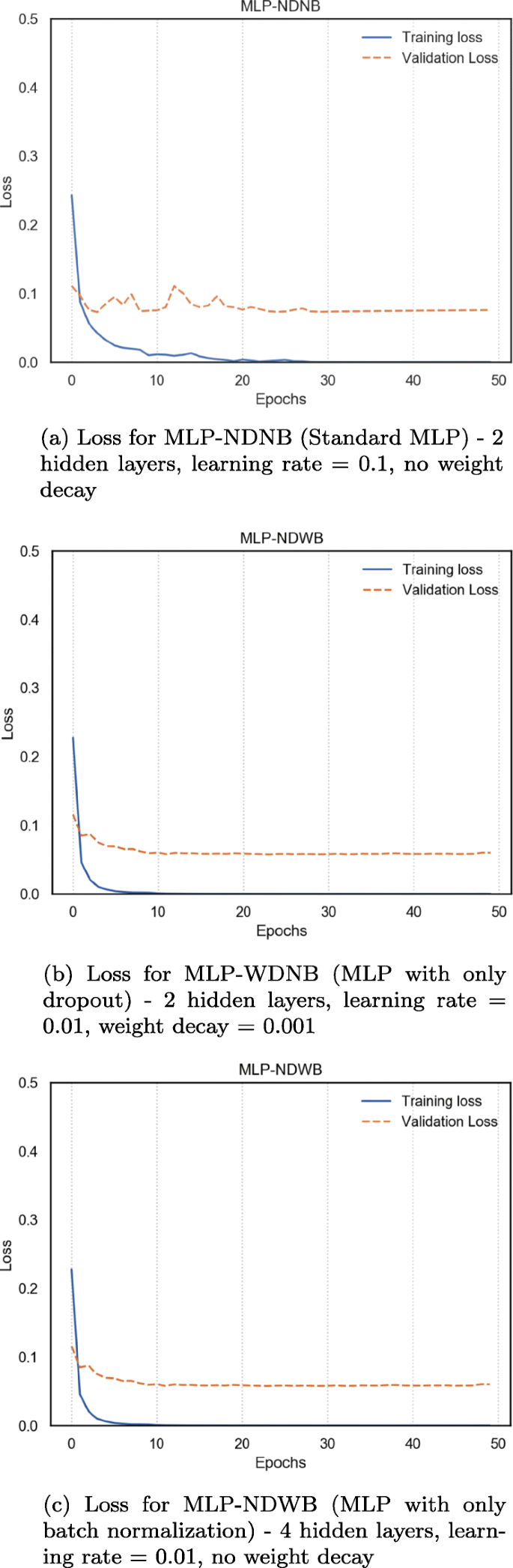

This paper reports both theoretical and empirical evidence of a training strategy that we should control the ratio of batch size to learning rate not too large |

|

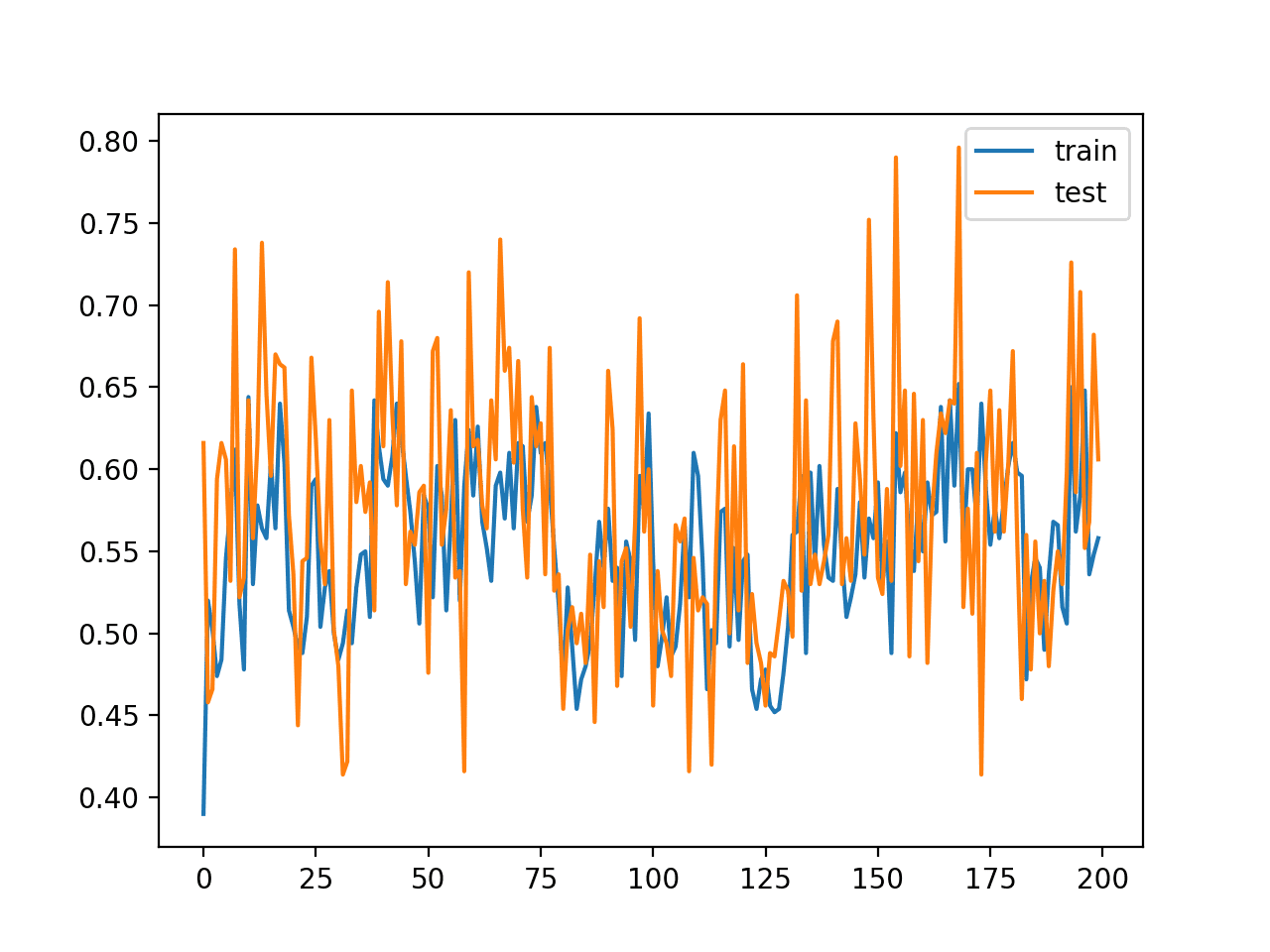

DONT DECAY THE LEARNING RATE INCREASE THE BATCH SIZE

This procedure is successful for stochastic gradi- ent descent (SGD) SGD with momentum Nesterov momentum and Adam It reaches equivalent test accuracies |

|

Interactive Effect of Learning Rate and Batch Size to Implement

15 fév 2023 · The learning rate and batch size had a high correlation: when the learning rates were high bigger batch sizes performed better than those with |

|

[200609092] Learning Rates as a Function of Batch Size - arXiv

16 jui 2020 · Abstract: We study the effect of mini-batching on the loss landscape of deep neural networks using spiked field-dependent random matrix |

|

ArXiv:171104623v3 [csLG] 13 Sep 2018

13 sept 2018 · Charac- terizing the relation between learning rate batch size and the properties of the final minima such as width or generalization remains |

|

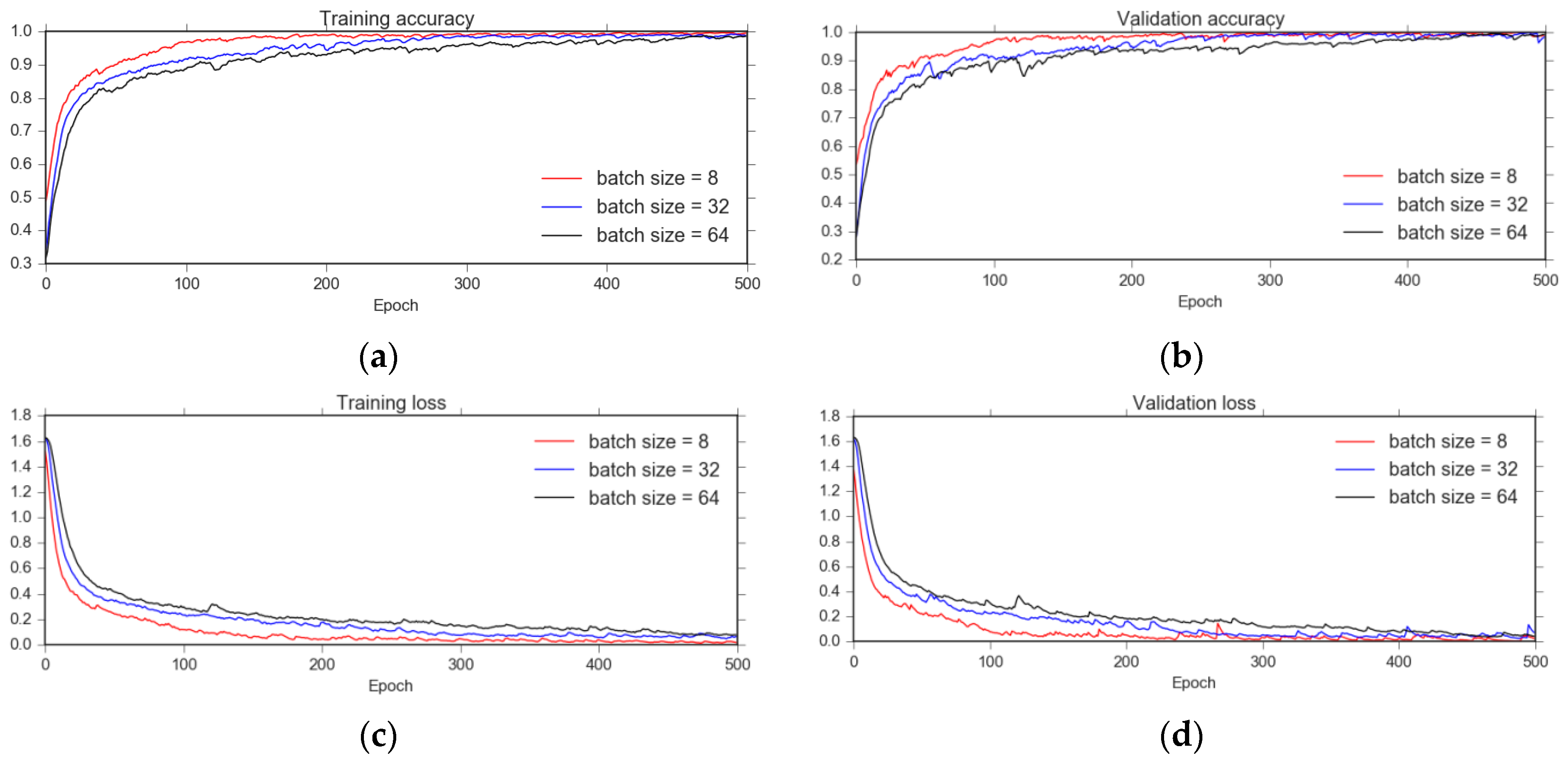

The effect of batch size on the generalizability of the convolutional

There is a high correlation between the learning rate and the batch size when the learning rates are high the large batch size performs better than with small |

|

Coupling Adaptive Batch Sizes with Learning Rates

Small batch sizes require a small learning rate while larger batch sizes enable larger steps We will exploit this relationship later on by explicitly coupling |

|

Dont Decay the Learning Rate Increase the Batch Size Request PDF

Previous work [20] has demonstrated empirically a relationship between the optimal hyper-parameters of learning rate (LR) weight decay (WD) batch size |

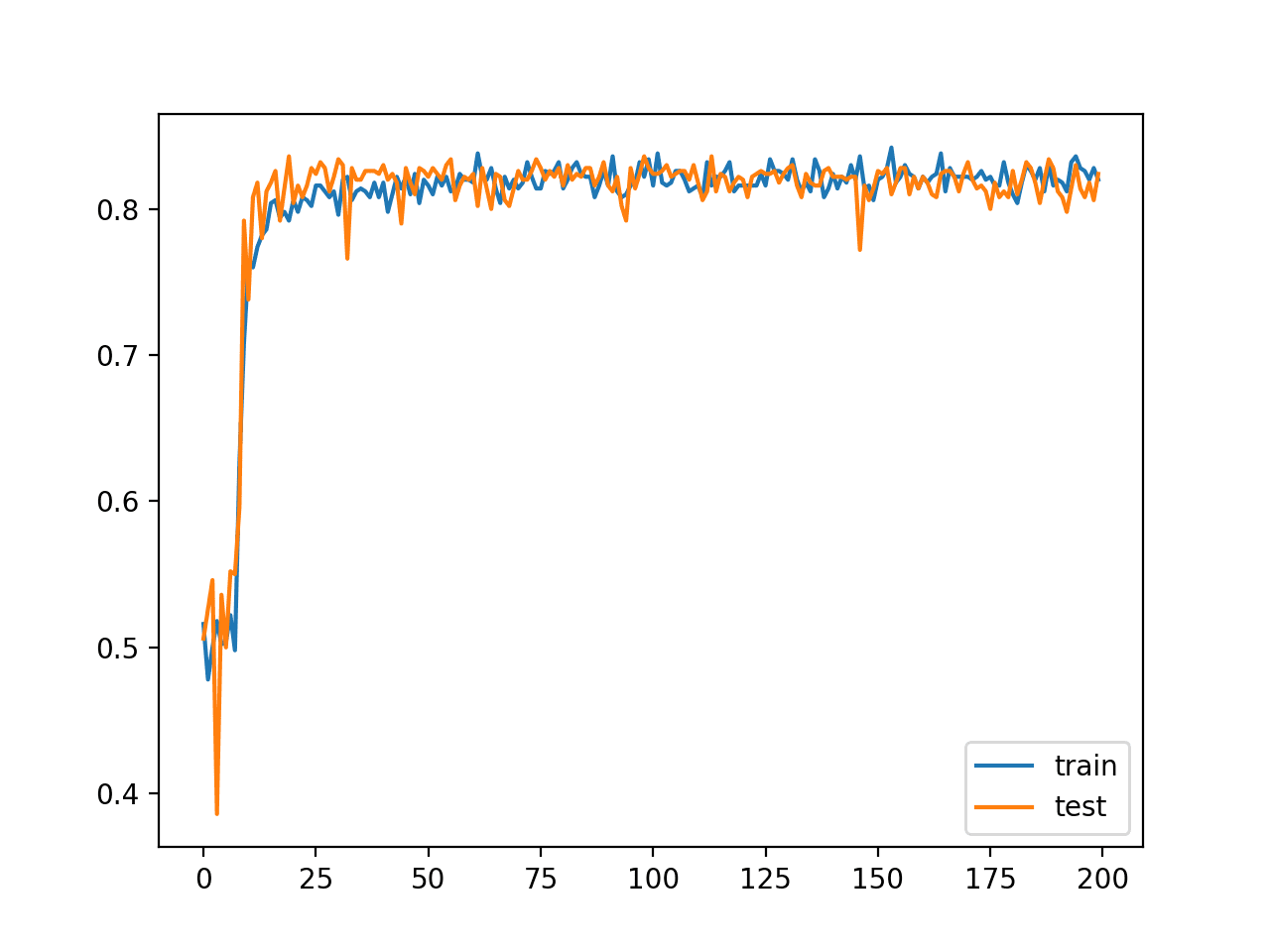

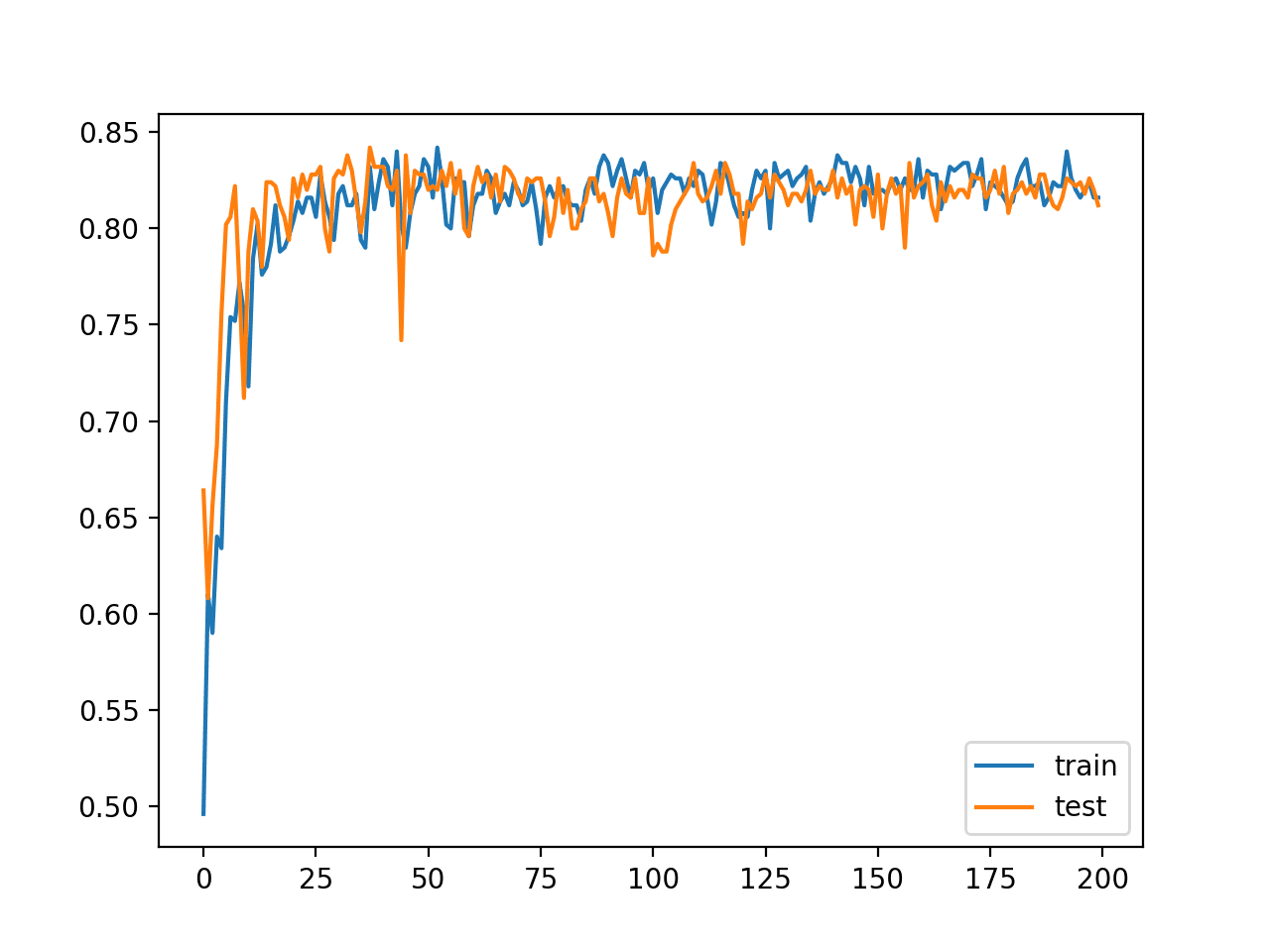

What is the relationship between learning rate and batch size?

When learning gradient descent, we learn that learning rate and batch size matter. Specifically, increasing the learning rate speeds up the learning of your model, yet risks overshooting its minimum loss. Reducing batch size means your model uses fewer samples to calculate the loss in each iteration of learning.How does batch size affect learning?

The batch size affects some indicators such as overall training time, training time per epoch, quality of the model, and similar. Usually, we chose the batch size as a power of two, in the range between 16 and 512. But generally, the size of 32 is a rule of thumb and a good initial choice.16 mar. 2023What is the learning rate for 64 batch size?

Using a batch size of 64 (orange) achieves a test accuracy of 98% while using a batch size of 1024 only achieves about 96%.- Let's try this out, with batch sizes 32, 64, 128, and 256. We will use a base learning rate of 0.01 for batch size 32, and scale accordingly for the other batch sizes. Indeed, we find that adjusting the learning rate does eliminate most of the performance gap between small and large batch sizes.

|

Control Batch Size and Learning Rate to Generalize Well - NeurIPS

Each point represents a model Totally 1,600 points are plotted has a positive correlation with the ratio of batch size to learning rate, which suggests a negative correlation between the generalization ability of neural networks and the ratio This result builds the theoretical foundation of the training strategy |

|

Which Algorithmic Choices Matter at Which Batch Sizes? - NIPS

studied how various heuristics for adjusting the learning rate as a function of batch size affect the relationship between batch size and training time Shallue et al |

|

Towards Explaining the Regularization Effect of Initial Large

Abstract Stochastic gradient descent with a large initial learning rate is widely used for the connection between large batch size and small learning rate 3 |

|

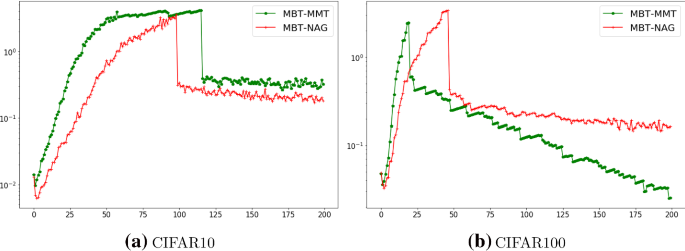

On the Generalization Benefit of Noise in Stochastic Gradient Descent

learning rate and batch size (Krizhevsky, 2014; Goyal et al , 2017; Smith et al , that SGD with Momentum significantly outperforms vanilla SGD (Sutskever et The primary difference between convergence bounds and the SDE perspective |

|

On the Generalization Benefit of Noise in Stochastic Gradient Descent

Meanwhile, SGD performs poorly compared to SGD with Momentum when the learning rate is large When the batch size is small, the optimal learn- ing rates for |

|

Train Deep Neural Networks with Small Batch Sizes - IJCAI

Deep learning architectures are usually proposed with millions of as vanilla SGD even with small batch size Our difference between noisy and noiseless gradient However, it attains a better convergence rate when batch size is con- |

|

Why Does Large Batch Training Result in Poor - HUSCAP

ponents of machine learning because a better solution generally leads to a more accurate problems In section 4, we explain why the training with a large batch size in This relationship is not so simple in neural networks because the loss learning rate during training can be useful for accelerating the training In |

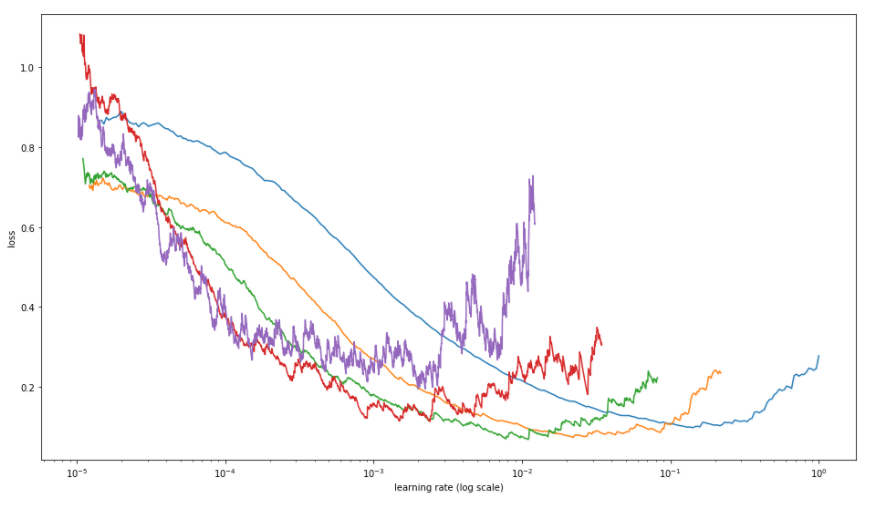

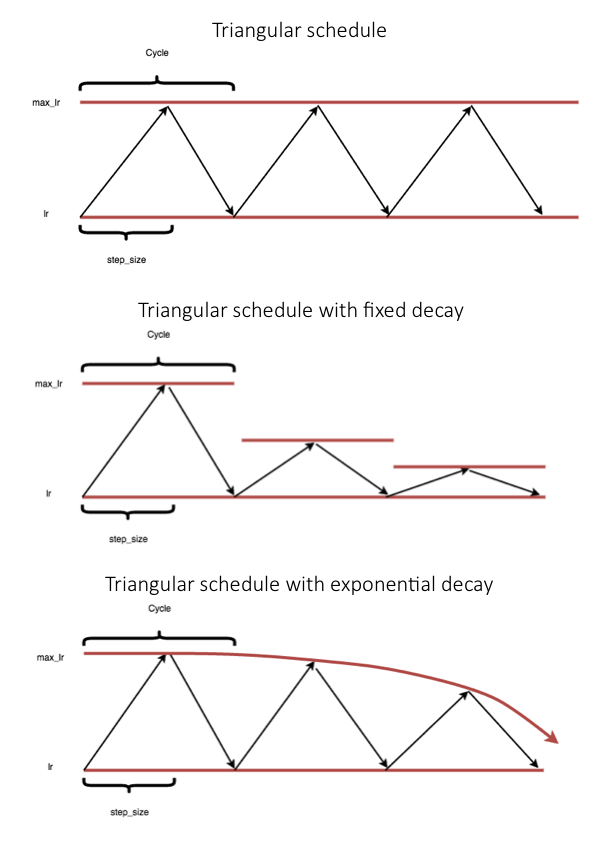

![PDF] A disciplined approach to neural network hyper-parameters PDF] A disciplined approach to neural network hyper-parameters](https://media.springernature.com/lw685/springer-static/image/art%3A10.1038%2Fs41467-020-18037-z/MediaObjects/41467_2020_18037_Fig1_HTML.png)

![PDF] A disciplined approach to neural network hyper-parameters PDF] A disciplined approach to neural network hyper-parameters](https://www.googleapis.com/download/storage/v1/b/kaggle-user-content/o/inbox%2F991320%2F761a2d7ed2c99df70822ab8dff2e0bdb%2FScreen%20Shot%202020-02-08%20at%2010.24.56%20AM.png?generation\u003d1581175542433165\u0026alt\u003dmedia)