rmsprop

|

Neural Networks for Machine Learning Lecture 6a Overview of mini

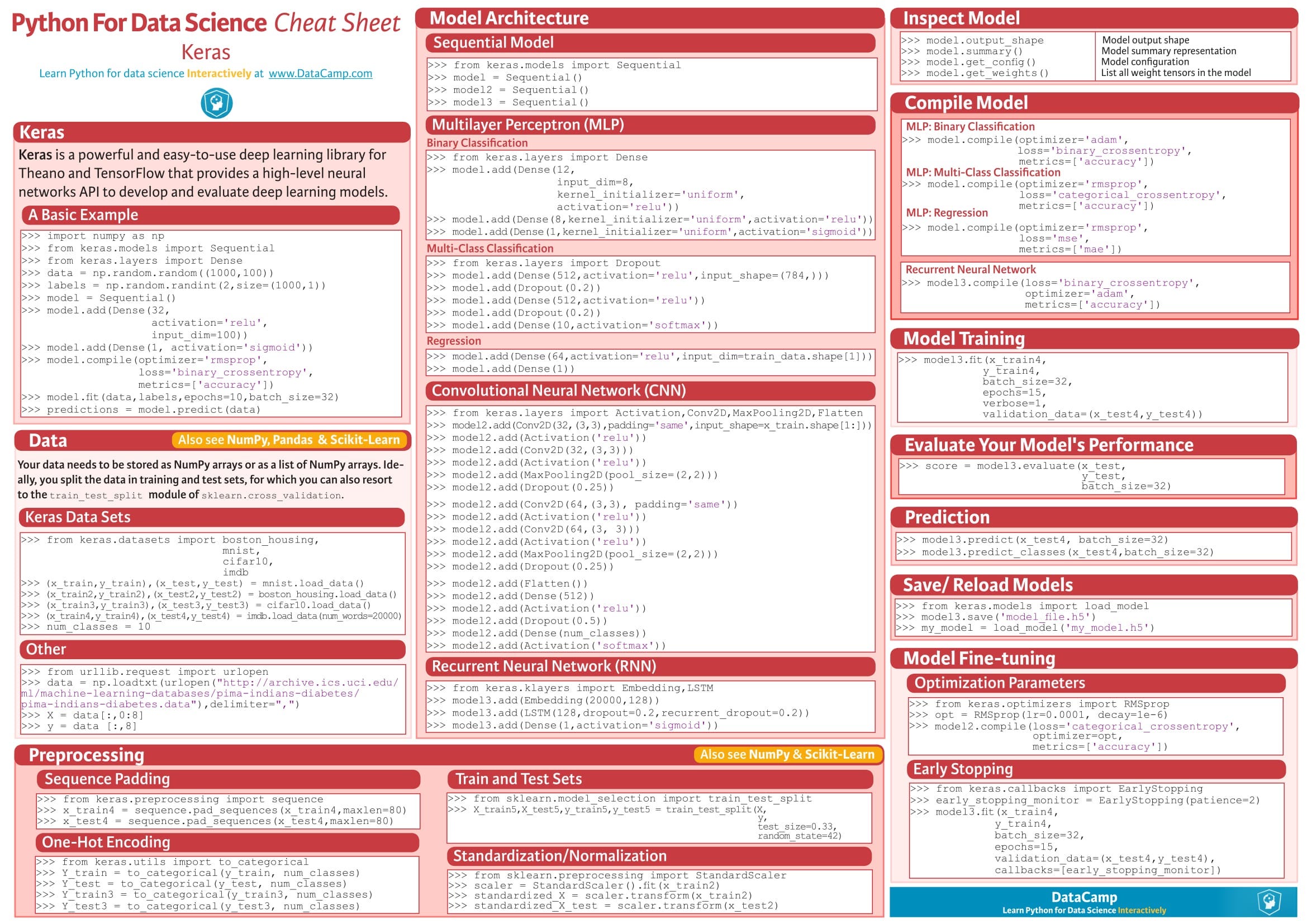

rmsprop: Divide the learning rate for a weight by a running average of the magnitudes of recent gradients for that weight. – This is the mini-?batch version |

|

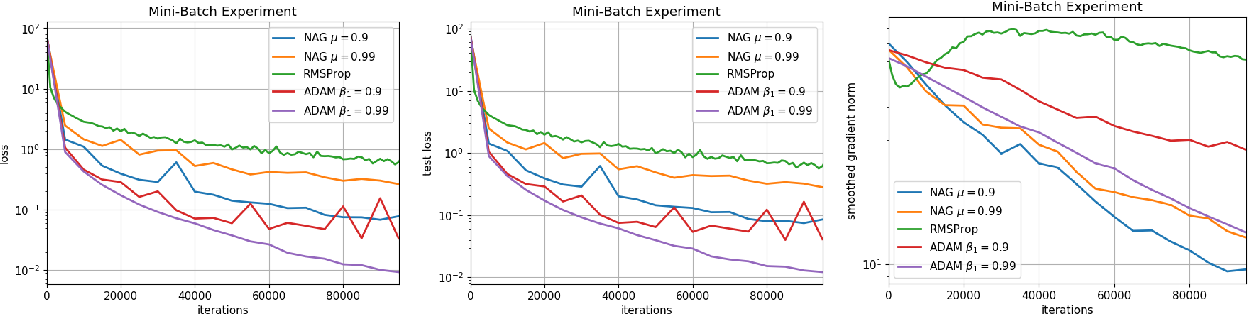

RMSPROP CONVERGES WITH PROPER HYPER- PARAMETER

Despite the existence of divergence examples RMSprop remains one of the most popular algorithms in machine learning. Towards closing the gap between theory. |

|

CONVERGENCE GUARANTEES FOR RMSPROP AND ADAM IN

In this work we make progress towards a deeper understanding of ADAM and RMSProp in two ways. First |

|

Présentation PowerPoint

Algorithmes d'optimisation. • Descente du gradient: 1. par batch. 2. stochastique. 3. avec momentum. 4. accéléré de Nesterov. 5. Adagrad. 6. RMSprop. 7. |

|

A Sufficient Condition for Convergences of Adam and RMSProp

Adam and RMSProp are two of the most influential adap- tive stochastic algorithms for training deep neural networks which have been pointed out to be |

|

A Sufficient Condition for Convergences of Adam and RMSProp

25 juin 2019 antee the global convergence of generic Adam/RMSProp for solving large-scale non-convex stochastic optimization. |

|

On Empirical Comparisons of Optimizers for Deep Learning

Ba 2015) and RMSPROP (Tieleman & Hinton |

|

Vprop: Variational Inference using RMSprop

(b) RMSprop minimizes f(?) := ?logp(y |

|

Variants of RMSProp and Adagrad with Logarithmic Regret Bounds

In this paper we have analyzed. RMSProp originally proposed for the training of deep neural networks |

|

Optimization with ADAM and RMSprop in Convolution neural

It is observed that RMSprop optimizer outperforms with 89% accuracy compared with ADAM optimizer.. Key words : Handwriting recognition CNN |

|

RMSProp and equilibrated adaptive learning rates for non

RMSProp is an adaptativelearning rate method that has found much success in prac- tice (Tieleman & Hinton2012;Korjus et al ;Carlson et al 2015) Tieleman & Hinton(2012) propose to normalize the gradients by an exponential moving average of the magni-tude of the gradient for each parameter: vt =vt 1+ (1 )(rf)2 (2) where0 |

What does Rprop stand for?

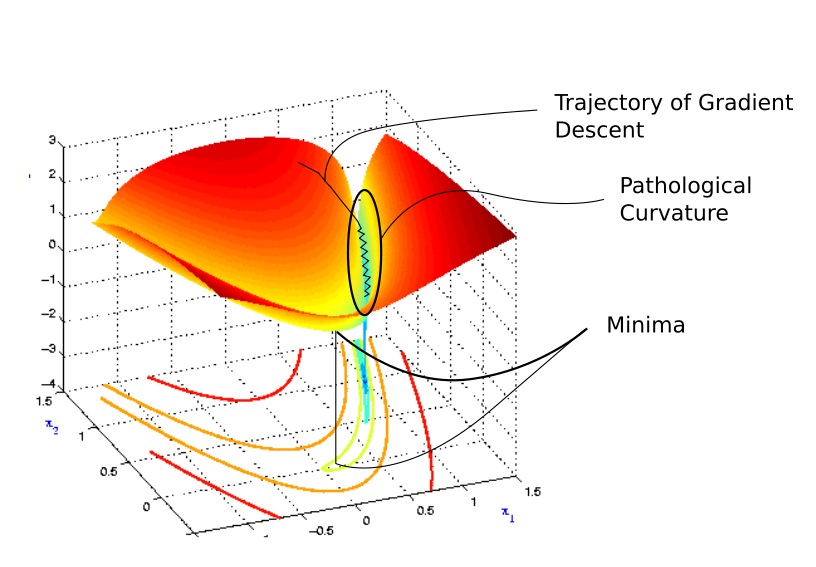

- Resilient Propagation (Rprop) •Riedmiller and Braun 1993 •Address the problem of adaptive learning rate •Increase the learning rate for a weight multiplicatively if signs of last two gradients agree •Else decrease learning rate multiplicatively Rprop Update Rprop Initialization •Initialize all updates at iteration 0 to constant value

What is a good starting point for momentum AdaGrad / RMSProp?

- Momentum AdaGrad / RMSProp Bias correction Bias correction for the fact that first and second moment estimates start at zero Adam with beta1 = 0.9, beta2 = 0.999, and learning_rate = 1e-3 or 5e-4 is a great starting point for many models! Fei-Fei Li & Justin Johnson & Serena Yeung

How does Rprop initialization work?

- Rprop Initialization •Initialize all updates at iteration 0 to constant value •If you set both learning rates to 1, you get “Manhattan update rule” •Rprop effectively divides the gradient by its magnitude •You never update using the gradient itself, but by its sign Problems with Rprop

|

RMSProp

– Momentum does not care about the alignment of the axes Page 26 Neural Networks for Machine Learning Lecture 6e rmsprop: Divide the |

|

Stochastic gradient descent

Algorithmes d'optimisation • Descente du gradient: 1 par batch 2 stochastique 3 avec momentum 4 accéléré de Nesterov 5 Adagrad 6 RMSprop 7 Adam |

|

Optimization for Deep Networks

Optimization for Deep Networks Ishan Misra Page 2 Overview • Vanilla SGD • SGD + Momentum • NAG • Rprop • AdaGrad • RMSProp • AdaDelta • Adam |

|

Adaptive Learning Rates - CEDAR

5 Algorithms with adaptive learning rates 1 AdaGrad 2 RMSProp 3 Adam 4 Choosing the right optimization algorithm 6 Approximate second-order methods |

|

A Sufficient Condition for Convergences of Adam and RMSProp

Adam and RMSProp are two of the most influential adap- tive stochastic algorithms for training deep neural networks, which have been pointed out to be |

|

Optimizers

Combines and extends AdaGrad and RMSProp: ○ AdaGrad (Adaptive Gradient Algorithm) maintains a per-parameter learning rate that improves performance |

![PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex](https://media-thumbs.golden.com/_SJmSBNBoH44TGNf5y6BbUUX8iQ\u003d/200x200/smart/golden-storage-production.s3.amazonaws.com%2Ftopic_images%2F688b88ef96714b99a13c443d9c03fbc5.png)

![PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex](https://media.springernature.com/lw685/springer-static/image/art%3A10.1007%2Fs11069-020-04015-7/MediaObjects/11069_2020_4015_Fig3_HTML.png)

![PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex](https://miro.medium.com/max/500/1*Gcnvkcos6uQvnwVQesDPzg.png)