english wikipedia dataset

How many GB is all of Wikipedia?

The total number of pages is 59,981,854.

Articles make up 11.31 percent of all pages on Wikipedia.

As of 2 July 2023, the size of the current version of all articles compressed is about 22.14 GB without media.The WikiText language modeling dataset is a collection of over 100 million tokens extracted from the set of verified Good and Featured articles on Wikipedia.

What is Wikipedia dataset?

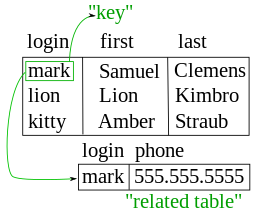

Wikipedia dataset containing cleaned articles of all languages.

The datasets are built from the Wikipedia dump (https://dumps.wikimedia.org/) with one split per language.

Each example contains the content of one full Wikipedia article with cleaning to strip markdown and unwanted sections (references, etc.).

How do I get data from Wikipedia?

To scrape public Wikipedia page data, you'll need an automated solution like Oxylabs' Web Scraper API or a custom-built scraper.

Web Scraper API is a web scraping infrastructure that, after receiving your request, gathers publicly available Wikipedia page data according to your request.

|

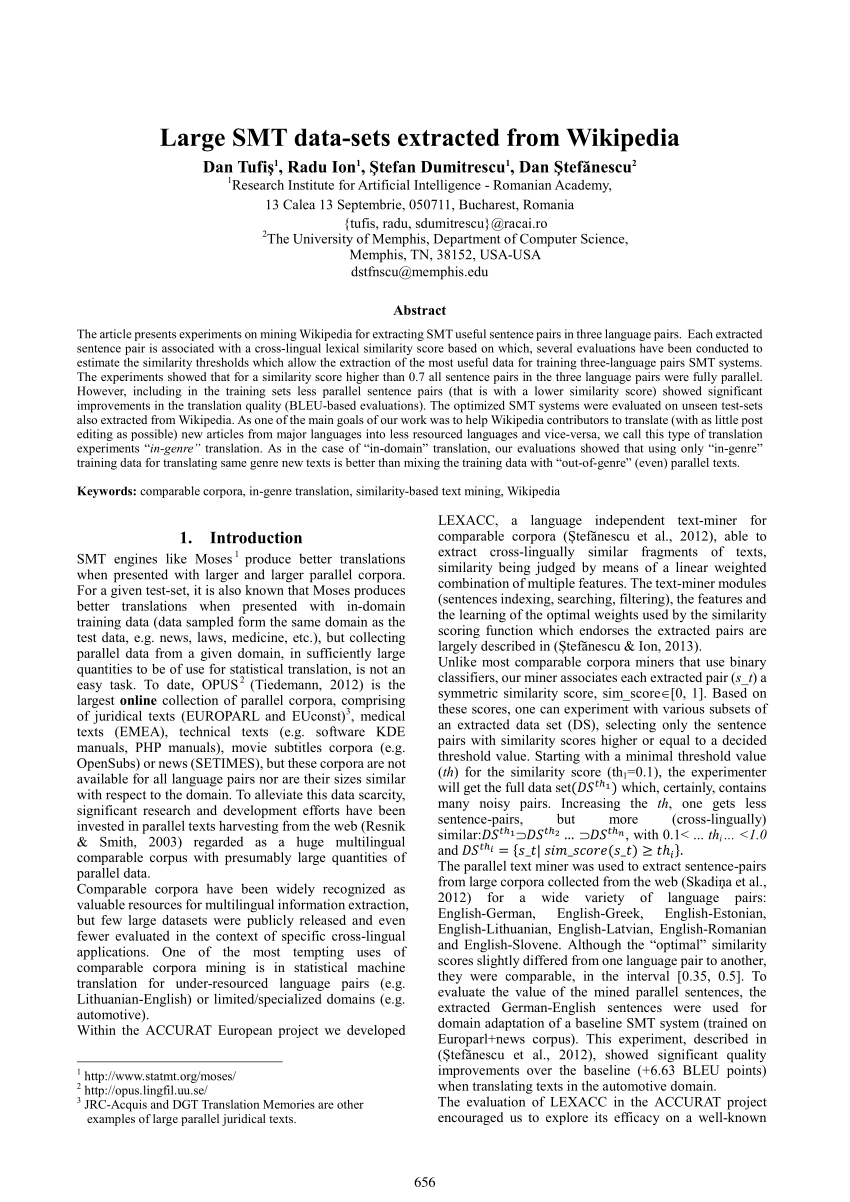

A Cross-Lingual Dictionary for English Wikipedia Concepts

WordNet: A lexical database for En- glish. Communications of the ACM 38. D. Milne and I. H. Witten. 2008. Learning to link with. Wikipedia. In CIKM. |

|

A Novel Wikipedia based Dataset for Monolingual and Cross

Nov 10 2021 The Wikipedia dataset consists of English and. German articles |

|

WikiHist.html: English Wikipedias Full Revision History in HTML

Data and code are publicly available at https://doi.org/10.5281/zenodo.3605388. 1 Introduction. Wikipedia constitutes a dataset of primary importance for. |

|

WikiLinkGraphs: A Complete Longitudinal and Multi-Language

Apr 4 2019 present a complete dataset of the network of internal Wiki- ... English Wikipedia |

|

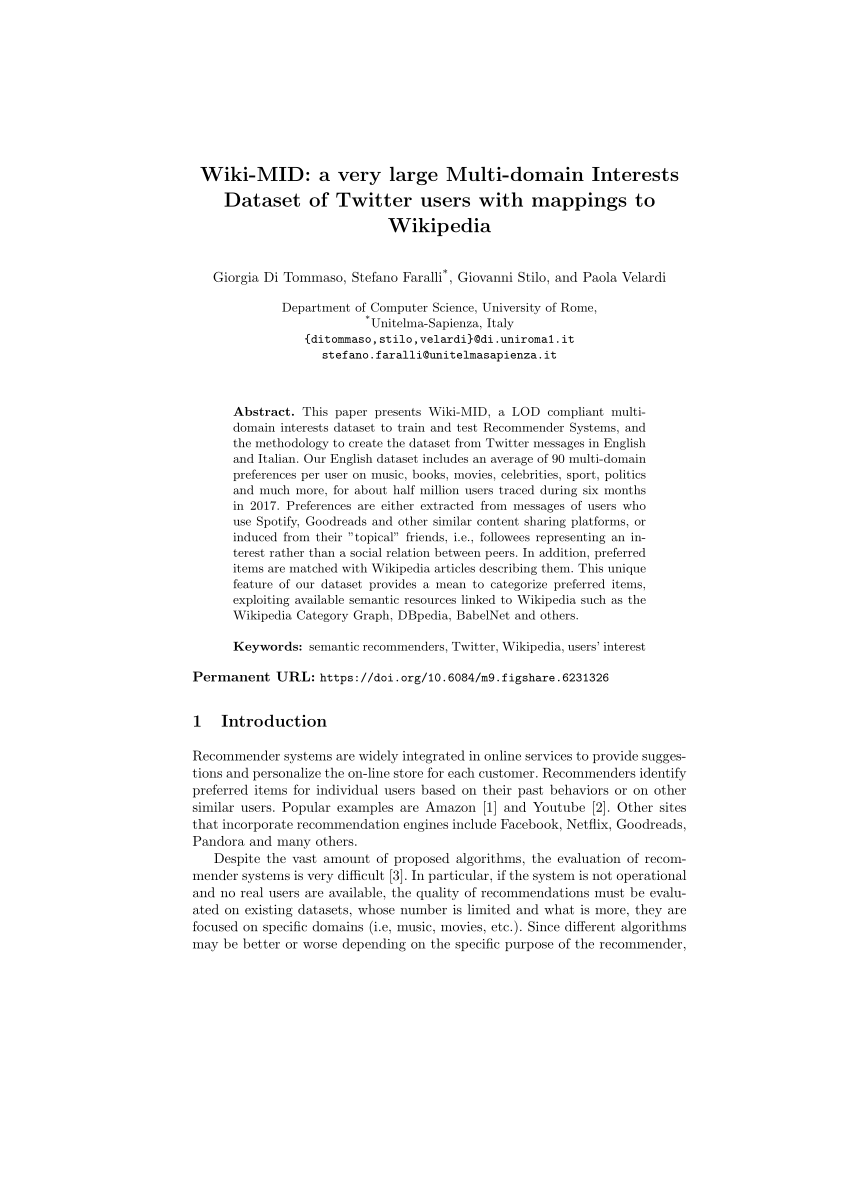

WIT: Wikipedia-based Image Text Dataset for Multimodal

Mar 3 2021 datasets is the number of languages covered. By transitioning from. English-only to highly multilingual language datasets |

|

A Large Scale Dataset for Content Reliability on Wikipedia

Jun 1 2021 this gap |

| Wiki-40B: Multilingual Language Model Dataset |

|

A graph-structured dataset for Wikipedia research

Mar 20 2019 the temporal evolution of Wikipedia hyperlinks graph. Bellomi and Bonato conducted a study [3] of macro-structure of English. Wikipedia network ... |

|

Text Segmentation as a Supervised Learning Task

Mar 25 2018 For this work we have created a new dataset |

|

Citation Detective: a Public Dataset to Improve and Quantify

To fill this gap we present Citation Detective |

|

English Wikipedias Full Revision History in HTML Format

Wikipedia is implemented as an in- stance of MediaWiki,1 a content management system writ- ten in PHP, built around a backend database that stores all |

|

Wikipedia Detox - Ellery Wulczyn

This reveals that the majority of personal attacks on Wikipedia are not the result 1This study uses data from English Wikiedia, which for brevity we will simply |

|

Wiki-40B: Multilingual Language Model Dataset - Association for

ulary for English can already achieve a high coverage rate (Baayen, 1996 We choose Wikipedia as our benchmark dataset for its permissive licensing |

|

A Topic-Aligned Multilingual Corpus of Wikipedia Articles for

coverage in English Wikipedia (most exhaustive) and Wikipedias in eight other widely spoken The resulting dataset of the topically-aligned articles in dif- |

|

English Wikipedia On Hadoop Cluster - VTechWorks - Virginia Tech

4 mai 2016 · 1 Executive Summary To develop and test big data software, one thing that is required is a big dataset The full English Wikipedia dataset |