pre trained contextual embedding of source code

|

Learning and Evaluating Contextual Embedding of Source Code

We present the first pre-trained contextual embedding of source code Our model CuBERT shows strong performance against baselines We hope that our models |

When the focus corpus is large, static embeddings reflect related concepts, while contextualized embeddings often show synonyms or cohypernyms.

Static embeddings trained only on the focus corpus capture opposing opinions better than contextualized embeddings.

What is contextual embedding?

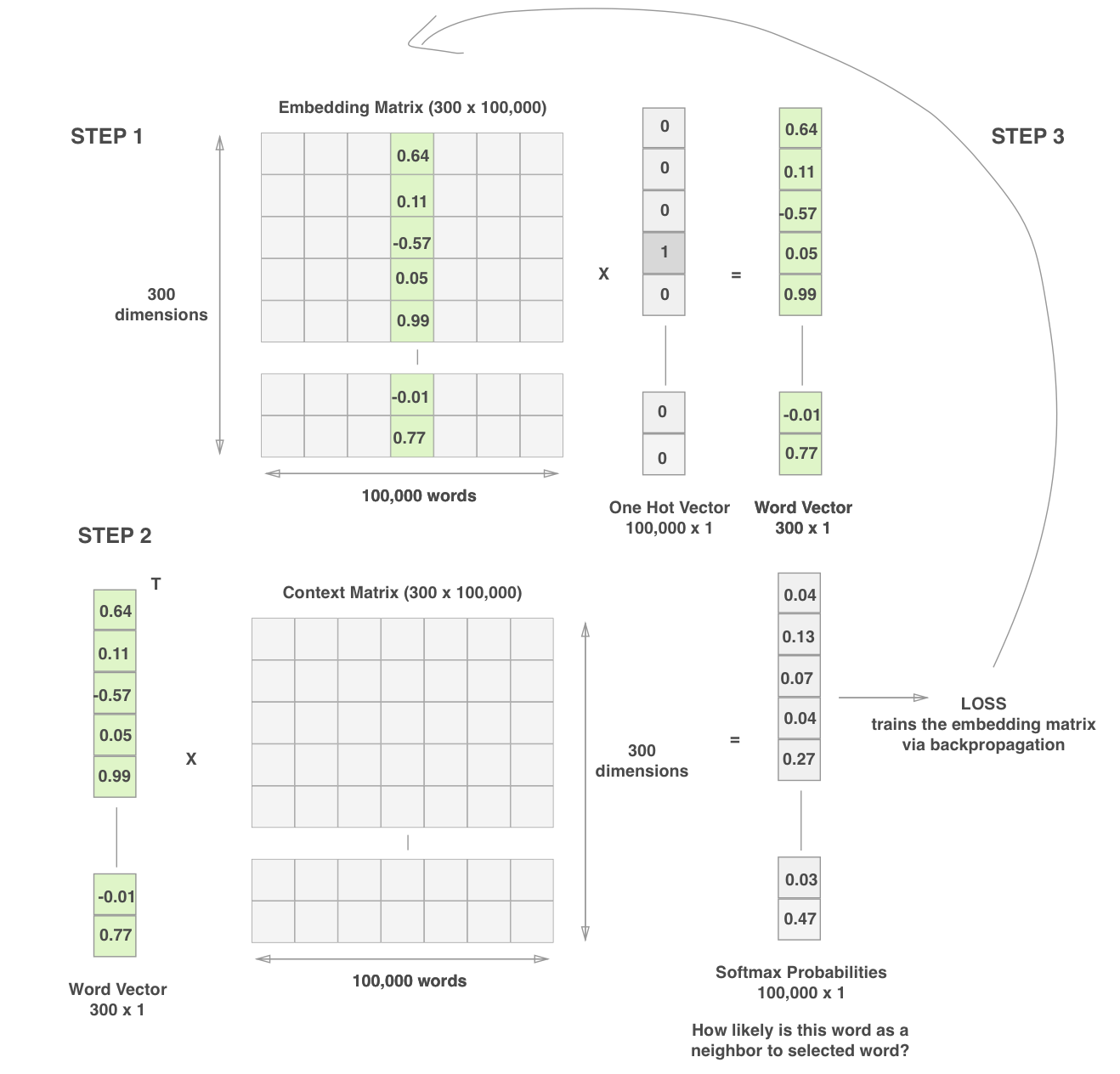

Traditional word embeddings would generate the same vector for "bank" in both sentences, failing to capture the different meanings.

Contextual embeddings, on the other hand, would generate different vectors for each instance of "bank", reflecting the different meanings implied by the context.

| Learning and Evaluating Contextual Embedding of Source Code |

|

PRE-TRAINED CONTEXTUAL EMBEDDING OF SOURCE CODE

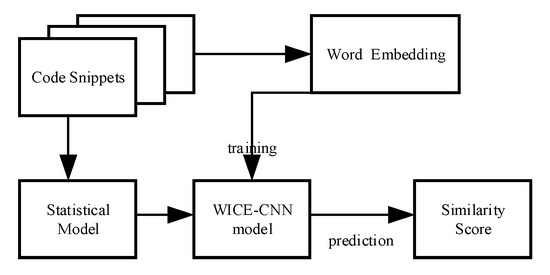

further shown that pre-trained contextual embeddings can be extremely powerful embedding of source code by training a BERT model on source code. |

|

Learning and Evaluating Contextual Embedding of Source Code

Q1: How do contextual embeddings compare against word embeddings? CuBERT outperforms BiLSTM models initialized with pre-trained source-code-specific |

|

Learning and Evaluating Contextual Embedding of Source Code

A significant ad- vancement in natural-language understanding has come with the development of pre-trained con- textual embeddings such as BERT |

|

What do pre-trained code models know about code?

25 août 2021 In order to determine whether the pre- trained vector embeddings of source code transformer models reflect code understanding in terms of ... |

|

What Do They Capture? - A Structural Analysis of Pre-Trained

14 fév. 2022 Recently many pre-trained language models for source code have ... is embedded in the linear-transformed contextual word em-. |

|

Contextual Embeddings for Arabic-English Code-Switched Data

12 déc. 2020 open source trained bilingual contextual word embedding models of FLAIR ... (2017) implemented Arabic pre-trained word embedding models. |

|

Multi-task Learning based Pre-trained Language Model for Code

29 déc. 2020 [24] extended this idea to programming language understanding tasks. They derived contextual embedding of source code by training a BERT model ... |

|

Learning and Evaluating Contextual Embedding of Source Code

In this work, we derive a pre-trained contextual embedding of tok- enized source code without explicitly modeling source-code- specific information, and show that the resulting embedding can be effectively fine-tuned for downstream tasks |

|

Contextual Word Representations with BERT and Other Pre-trained

pre-trained on text corpus from co-occurrence statistics king Solution: Train contextual representations on text corpus ELMo: Deep Contextual Word Embeddings, AI2 University of Open Source Release Well-documented code |

|

Contextual Embeddings for Arabic-English Code-Switched Data

12 déc 2020 · open source trained bilingual contextual word embedding models of FLAIR ( 2017) implemented Arabic pre-trained word embedding models |

|

Contextual String Embeddings for Sequence Labeling

We release all code and pre-trained language models in a simple-to-use framework to the embeddings to other tasks: https://github com/ zalandoresearch/flair |

|

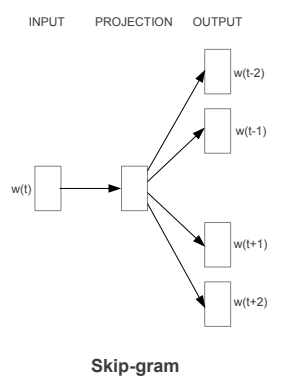

Source Code Level Word Embeddings in Aiding Semantic - CORE

from source code could considerably aid software maintenance Scientific doc2vec model separately, thus each built training model is different steps of pre- and post-processing and thus the employment of contextual recommendation |

|

Understanding the Downstream Instability of Word Embeddings

2013) and contextual word embeddings, such as BERT 2https://github com/ tmikolov/word2vec Unlike pre-trained word embeddings, contextual word em- |

![PDF] Learning and Evaluating Contextual Embedding of Source Code PDF] Learning and Evaluating Contextual Embedding of Source Code](http://jalammar.github.io/images/bert-transfer-learning.png)

![PDF] Learning and Evaluating Contextual Embedding of Source Code PDF] Learning and Evaluating Contextual Embedding of Source Code](https://media.springernature.com/lw685/springer-static/image/art%3A10.1007%2Fs41019-019-0096-6/MediaObjects/41019_2019_96_Fig2_HTML.png)