a method for stochastic optimization adam

|

Adam: A Method for Stochastic Optimization

30 jan 2017 · We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates |

What are stochastic optimization methods explain?

In particular, stochastic optimisation is the process of maximizing or minimizing the value of an objective function when one or more of the input parameters is subject to randomness.

What is the Adam method?

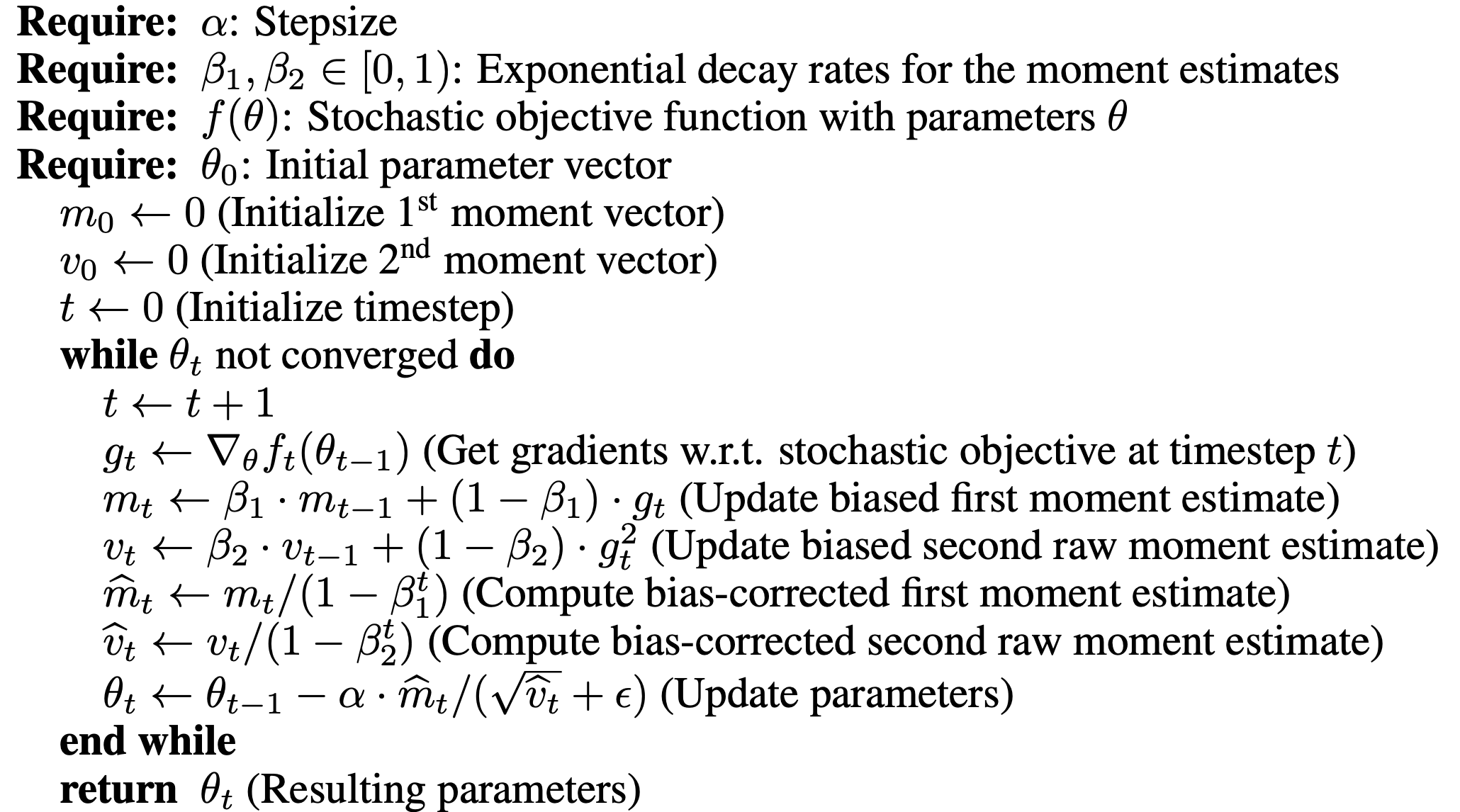

Adaptive Moment Estimation is an algorithm for optimization technique for gradient descent.

The method is really efficient when working with large problem involving a lot of data or parameters.

It requires less memory and is efficient.What is the Adam optimizer technique?

The Adam optimizer, short for “Adaptive Moment Estimation,” is an iterative optimization algorithm used to minimize the loss function during the training of neural networks.

Adam can be looked at as a combination of RMSprop and Stochastic Gradient Descent with momentum.

Developed by Diederik P.Adam is well known to perform worse than SGD for image classification tasks [22].

For our experiment, we tuned the learning rate and could only get an accuracy of 71.16%.

In comparison, Adam-LAWN achieves an accuracy of more than 76%, marginally surpassing the performance of SGD-LAWN and SGD.

|

Adam: A Method for Stochastic Optimization

30-Jan-2017 We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions |

|

Adam: A Method for Stochastic Optimization - Diederik P. Kingma

18-Oct-2015 Adam: A Method for Stochastic Optimization ... 2 Adaptive Moment Estimation (Adam) ... Large-Scale ?? First-Order Stochastic Methods. |

|

Adam - A Method for Stochastic Optimization v2.1

Adam: A Method for Stochastic. Optimization distribution $ is unknown to the learning algorithm. ... down to solving the following optimization problem:. |

|

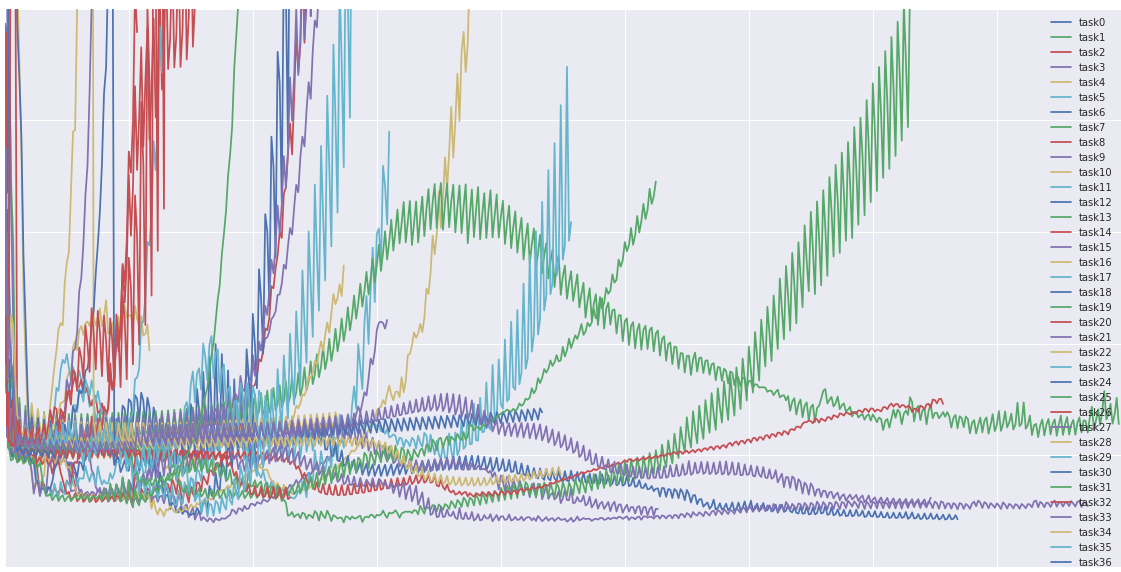

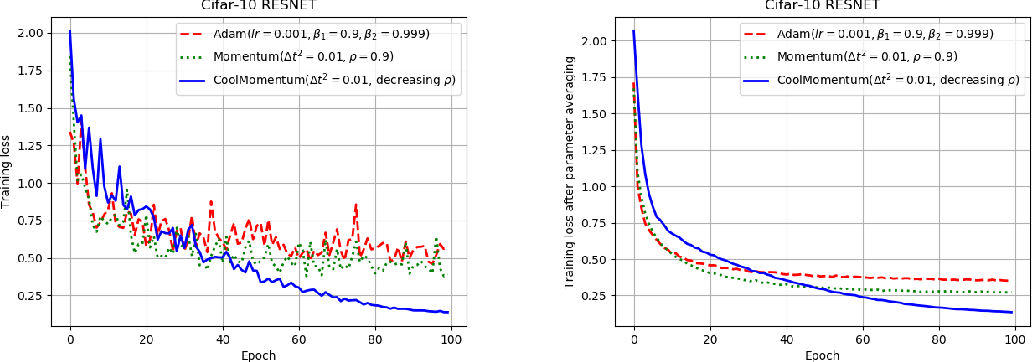

CoolMomentum: a method for stochastic optimization by Langevin

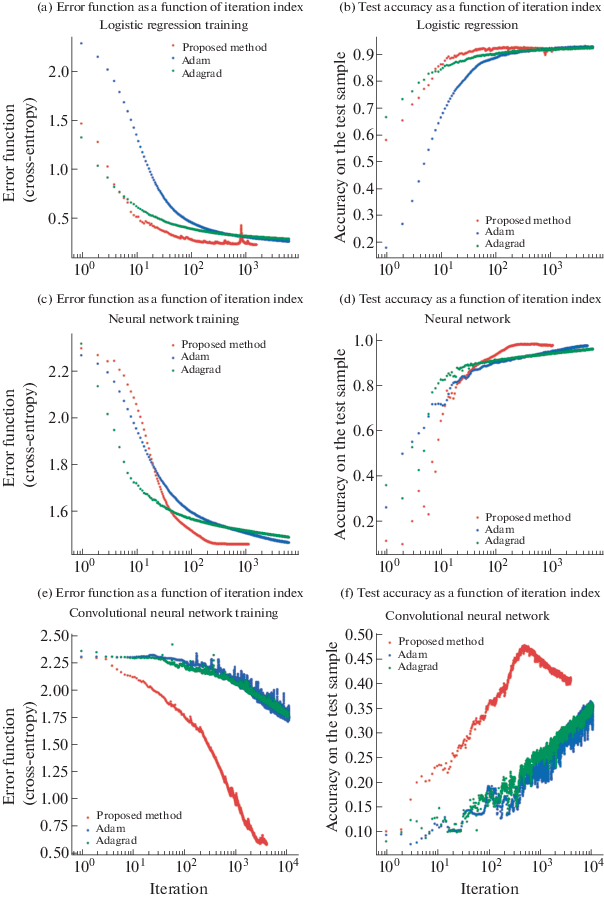

Empirically it is shown that several optimization algorithms e.g SGD with momentum3 |

|

Lecture 4: Optimization

16-Sept-2019 Adam (almost): RMSProp + Momentum. Lecture 4 - 64. Kingma and Ba “Adam: A method for stochastic optimization” |

|

Adam: A Method for Stochastic Optimization - Diederick P. Kingma

Adam: A Method for Stochastic Optimization. Diederick P. Kingma Jimmy Lei Bai. Jaya Narasimhan. February 10 |

|

Dyna: A Method of Momentum for Stochastic Optimization

12-May-2018 The dynamic relaxation is adapted for stochastic optimization of nonlinear ... tation of the algorithm is similar to the Adam Optimizer ... |

|

STOCHASTIC OPTIMIZATION

12-Sept-2019 SVGD: a Virtual Gradients Descent Method for. Stochastic Optimization. Zheng Li and Shi Shu. EasyChair preprints are intended for rapid. |

|

ACMo: Angle-Calibrated Moment Methods for Stochastic Optimization

method (ACMo) a novel stochastic optimization method. It state-of-the-art Adam-type optimizers |

|

Adam: A Method for Stochastic Optimization - HUJI moodle

18 oct 2015 · Adam: A Method for Stochastic Optimization 2 Adaptive Moment Estimation ( Adam) Large-Scale −→ First-Order Stochastic Methods |

|

PbSGD: Powered Stochastic Gradient Descent Methods for - IJCAI

methods for stochastic optimization with and without momen- tum; 2) conduct popular adaptive methods: AdaGrad, RMSprop and Adam This section is mainly |

|

Deep Learning - Optimization - Erwan Scornet

Stochastic Gradient Descent ADAM: Adaptive moment estimation for any distribution function F Another popular choice is the Gaussian distribution |

|

Stochastic Optimization for Machine Learning - Shuai Zheng

This method shows strong connections with deep learning optimizers such as RMSprop and Adam In this survey, we aim to give a comprehensive overview of |

|

Optimizers

Cost function: How good is your neural network? Then: Stochastic gradient descent Adam: A Method for Stochastic Optimization (Kingma Ba, 2015) ○ |

|

Improved Adam Optimizer for Deep Neural Networks - IEEE/ACM

Most practical optimization methods for deep neural networks (DNNs) are based on the stochastic gradient descent (SGD) algorithm However, the learning rate |

|

Dissecting Adam: The Sign, Magnitude and Variance of Stochastic

Dissecting Adam: The Sign, Magnitude and Variance of Stochastic Gradients gradient in each step of an iterative optimization algorithm becomes inefficient for |

![PDF] CoolMomentum: A Method for Stochastic Optimization by PDF] CoolMomentum: A Method for Stochastic Optimization by](https://www.mdpi.com/electronics/electronics-09-02055/article_deploy/html/images/electronics-09-02055-g001.png)