cluster analysis lecture notes ppt

|

Lecture Notes for Chapter 8 Introduction to Data Mining

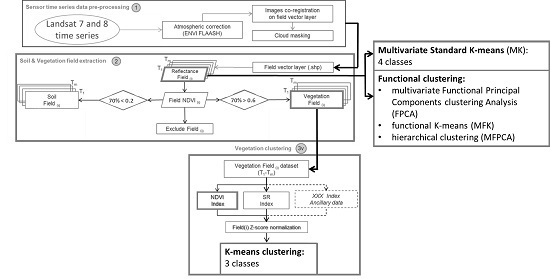

Prototype-based Fuzzy c-means Mixture Model Clustering Self-Organizing Maps Density-based Grid-based clustering Subspace clustering: CLIQUE Kernel-based: DENCLUE Graph-based Chameleon Jarvis-Patrick Shared Nearest Neighbor (SNN) Characteristics of Clustering Algorithms |

|

Chapter 15 Cluster analysis

The objective of cluster analysis is to assign observations to groups (\\clus-ters\") so that observations within each group are similar to one another with respect to variables or attributes of interest and the groups them-selves stand apart from one another |

|

Lecture14

Eis entalPNAS198 K-Means • An iterative clustering algorithm – Initialize: Pick Krandom points as cluster centers – Alternate: 1 Assign data points to closest cluster center 2 Change the cluster center to the average of its assigned points – Stop when no pointsʼ assignments change K-Means • An iterative clustering algorithm |

|

Cluster Analysis: Basic Concepts and Algorithms

What is Cluster Analysis? Finding groups of objects such that the objects in a group will be similar (or related) to one another and different from (or unrelated to) the objects in other groups Inter-cluster Intra-cluster distances are distances are maximized minimized A clustering is a set of clusters and each cluster contains a set of points |

|

CS102 Spring2020

Clustering CS102 Some Uses for Clustering §Classification! •Assign labels to clusters •Now have labeled training data for future classification §Identify similar items •For substitutes or recommendations •For de-duplication §Anomaly (outlier) detection •Items that are far from any cluster |

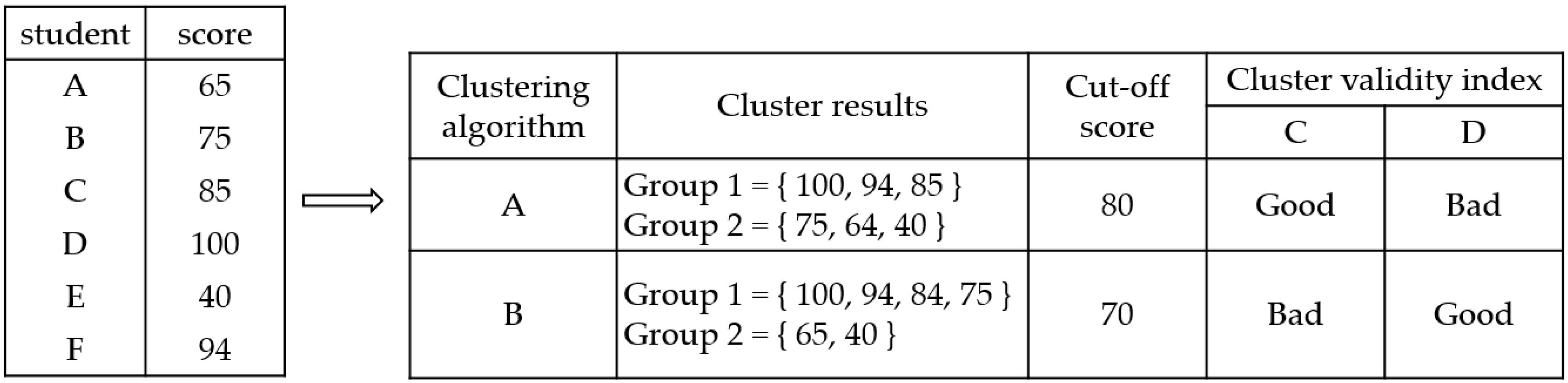

What is the difference between Classi cation and cluster analysis?

In contrast to the classi ̄cation problem where each observation is known to belong to one of a number of groups and the objective is to predict the group to which a new observation belongs, cluster analysis seeks to discover the number and composition of the groups. There are a number of clustering methods.

What is the basic idea of clustering?

Clustering • Basic idea: group together similar instances • Example: 2D point patterns Clustering • Basic idea: group together similar instances • Example: 2D point patterns Clustering • Basic idea: group together similar instances • Example: 2D point patterns • What could “similar” mean?

How do I assign data points to a cluster center?

1. Assign data points to closest cluster center 2. Change the cluster center to the average of its assigned points – Stop when no pointsʼ assignments change K@means&clustering:&Example& • Pick Krandom points as cluster centers (means) Shown here for K=2 11

What is the objective of cluster analysis?

The objective of cluster analysis is to assign observations to groups (\\clus-ters") so that observations within each group are similar to one another with respect to variables or attributes of interest, and the groups them-selves stand apart from one another.

Outline

Prototype-based Fuzzy c-means Mixture Model Clustering Self-Organizing Maps Density-based Grid-based clustering Subspace clustering: CLIQUE Kernel-based: DENCLUE Graph-based Chameleon Jarvis-Patrick Shared Nearest Neighbor (SNN) Characteristics of Clustering Algorithms www-users.cse.umn.edu

Hard (Crisp) vs Soft (Fuzzy) Clustering

Hard (Crisp) vs. Soft (Fuzzy) clustering For soft clustering allow point to belong to more than one cluster For K-means, generalize objective function www-users.cse.umn.edu

Clustering Using Mixture Models

Idea is to model the set of data points as arising from a mixture of distributions Typically, normal (Gaussian) distribution is used But other distributions have been very profitably used Clusters are found by estimating the parameters of the statistical distributions using the Expectation-Maximization (EM) algorithm k-means is a special case of th

Probabilistic Clustering: Example

Informal example: consider modeling the points that generate the following histogram. Looks like a combination of two normal (Gaussian) distributions Suppose we can estimate the mean and standard deviation of each normal distribution. This completely describes the two clusters We can compute the probabilities with which each point belongs to each c

Repeat

For each point, compute its probability under each distribution Using these probabilities, update the parameters of each distribution Until there is no change Very similar to of K-means Consists of assignment and update steps Can use random initialization Problem of local minima For normal distributions, typically use K-means to initialize If using

Probabilistic Clustering: Updating Centroids

Update formula for weights assuming an estimate for statistical parameters m m c x p ( C x ) / www-users.cse.umn.edu

Problems with EM

Convergence can be slow Only guarantees finding local maxima Makes some significant statistical assumptions Number of parameters for Gaussian distribution grows as O(d2), d the number of dimensions Parameters associated with covariance matrix K-means only estimates cluster means, which grow as O(d) www-users.cse.umn.edu

Issues with SOM

High computational complexity No guarantee of convergence Choice of grid and other parameters is somewhat arbitrary Lack of a specific objective function www-users.cse.umn.edu

Subspace Clustering

Until now, we found clusters by considering all of the attributes Some clusters may involve only a subset of attributes, i.e., subspaces of the data www-users.cse.umn.edu

Clique – A Subspace Clustering Algorithm

A grid-based clustering algorithm that methodically finds subspace clusters Partitions the data space into rectangular units of equal volume in all possible subspaces Measures the density of each unit by the fraction of points it contains A unit is dense if the fraction of overall points it contains is above a user specified threshold, A cluster

Clique Algorithm

It is impractical to check volume units in all possible subspaces, since there is an exponential number of such units Monotone property of density-based clusters: If a set of points cannot form a density based cluster in k dimensions, then the same set of points cannot form a density based cluster in all possible supersets of those dimensions Ver

Limitations of Clique

Time complexity is exponential in number of dimensions Especially if “too many” dense units are generated at lower stages May fail if clusters are of widely differing densities, since the threshold is fixed Determining appropriate threshold and unit interval length can be challenging www-users.cse.umn.edu

Denclue (DENsity CLUstering)

Based on the notion of kernel-density estimation Contribution of each point to the density is given by an influence or kernel function Formula and plot of Gaussian Kernel Overall density is the sum of the contributions of all points Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Example of Density from Gaussian Kernel www-users.cse.umn.edu

DENCLUE Algorithm

Find the density function Identify local maxima (density attractors) Assign each point to the density attractor Follow direction of maximum increase in density Define clusters as groups consisting of points associated with density attractor Discard clusters whose density attractor has a density less than a user specified minimum, Combine clusters

Graph-Based Clustering: General Concepts

Graph-Based clustering needs a proximity graph Each edge between two nodes has a weight which is the proximity between the two points Many hierarchical clustering algorithms can be viewed in graph terms MIN (single link) merges subgraph pairs connected with a lowest weight edge Group Average merges subgraph pairs that have high average connectivity

Graph-Based Clustering: Chameleon

Based on several key ideas Sparsification of the proximity graph Partitioning the data into clusters that are relatively pure subclusters of the “true” clusters Merging based on preserving characteristics of clusters Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar www-users.cse.umn.edu

Graph-Based Clustering: Sparsification

The amount of data that needs to be processed is drastically reduced Sparsification can eliminate more than 99% of the entries in a proximity matrix The amount of time required to cluster the data is drastically reduced The size of the problems that can be handled is increased Graph-Based Clustering: Sparsification

Limitations of Current Merging Schemes

Existing merging schemes in hierarchical clustering algorithms are static in nature MIN: Merge two clusters based on their closeness (or minimum distance) GROUP-AVERAGE: Merge two clusters based on their average connectivity Limitations of Current Merging Schemes (c) (d) Closeness schemes will merge (a) and (b) Average connectivity schemes will mer

Chameleon: Clustering Using Dynamic Modeling

Adapt to the characteristics of the data set to find the natural clusters Use a dynamic model to measure the similarity between clusters Main properties are the relative closeness and relative inter-connectivity of the cluster Two clusters are combined if the resulting cluster shares certain properties with the constituent clusters The merging sche

Chameleon: Steps

Preprocessing Step: Represent the data by a Graph Given a set of points, construct the k-nearest-neighbor (k-NN) graph to capture the relationship between a point and its k nearest neighbors Concept of neighborhood is captured dynamically (even if region is sparse) Phase 1: Use a multilevel graph partitioning algorithm on the graph to find a large

Chameleon: Steps

Phase 2: Use Hierarchical Agglomerative Clustering to merge sub-clusters Two clusters are combined if the resulting cluster shares certain properties with the constituent clusters Two key properties used to model cluster similarity: Relative Interconnectivity: Absolute interconnectivity of two clusters normalized by the internal connectivity of the

Spectral Clustering

Spectral clustering is a graph-based clustering approach Does a graph partitioning of the proximity graph of a data set Breaks the graph into components, such that The nodes in a component are strongly connected to other nodes in the component The nodes in a component are weakly connected to nodes in other components See simple example below (W is

Strengths and Limitations

Can detect clusters of different shape and sizes Sensitive to how graph is created and sparsified Sensitive to outliers Time complexity depends on the sparsity of the data matrix Improved by sparsification www-users.cse.umn.edu

Graph-Based Clustering: SNN Approach

Shared Nearest Neighbor (SNN) graph: the weight of an edge is the number of shared nearest neighbors between vertices given that the vertices are connected i j i 4 j Graph-Based Clustering: SNN Approach Shared Nearest Neighbor (SNN) graph: the weight of an edge is the number of shared neighbors between vertices given that the vertices are connected

Creating the SNN Graph

Sparse Graph Shared Near Neighbor Graph Link weights are similarities between neighboring points Link weights are number of Shared Nearest Neighbors www-users.cse.umn.edu

When Jarvis-Patrick Does NOT Work Well

Smallest threshold, T, that does not merge clusters. www-users.cse.umn.edu

SNN Density-Based Clustering

Both are partitional. K-means is complete; DBSCAN is not. K-means has a prototype-based notion of a cluster; DB uses a density-based notion. K-means can find clusters that are not well-separated. DBSCAN will merge clusters that touch. DBSCAN handles clusters of different shapes and sizes; K-means prefers globular clusters. Comparison of DBSCAN and

|

Palmiye

Select cluster centers in ppt. Fundamentals Of Statistics Ppt World Statistics Day on 20 October happens when. Note: The formula for the standard error did the |

|

Lecture notes for STATG019 Selected Topics in Statistics: Cluster

(2014) Data Clustering: Algorithms and Applications |

|

Clustering Lecture 14

Clustering. Lecture 14. David Sontag. New York University. Slides adapted from Luke Zettlemoyer Vibhav Gogate |

|

Introduction to Cluster Analysis

5 juin 2018 Lecture notes from C Shalizi 36-350 Data Mining |

|

LECTURE NOTES ON DATA MINING& DATA WAREHOUSING

What Is Cluster Analysis Types of Data in Cluster Analysis |

|

Présentation PowerPoint

*Some materials in this lecture are used from https://towardsdatascience.com/unsupervised-machine-learning-clustering-analysis-d40f2b34ae7e. |

|

Statistics: 3.1 Cluster Analysis 1 Introduction 2 Approaches to cluster

Therefore the choice of variables included in a cluster analysis must be underpinned by concep- tual considerations. This is very important because the clusters |

|

Presentazione standard di PowerPoint

1 National Reference Laboratory for Listeria monocytogenes CgMLST and SNPs analysis improved the identification of the clusters |

|

Data Mining Cluster Analysis: Basic Concepts and Algorithms

Lecture Notes for Chapter 8 Applications of Cluster Analysis. ? Understanding ... A division data objects into non-overlapping subsets (clusters). |

| Clustering |

|

Clustering Lecture 14 - peoplecsailmitedu

Clustering Lecture 14 David Sontag New York University Slides adapted from Luke Zettlemoyer, Vibhav Gogate, Carlos Guestrin, Andrew Moore, Dan Klein |

|

Unsupervised Learning: Clustering - MIT

Clustering Shimon Ullman + Tomaso Poggio Danny Harari + Daneil Zysman + Darren Seibert 1 Choose k (random) data points (seeds) to be the initial centroids, cluster centers 2 Assign each data clustering analysis of the input data |

|

Chapter 15 Cluster analysis

The distance between each pair of observations is shown in Figure 15 4(a) Figure 15 4 Nearest neighbor method, Step 1 For example, the distance between a |

|

Lecture 18: Clustering & classification - Duke University

30 oct 2003 · CPS260/BGT204 1 Algorithms in Computational Biology clusters Cluster analysis is also used to form descriptive statistics to ascertain whether [4] Jeong-Ho Chang http://cbit snu ac kr/tutorial-2002/ppt/ClusterAnalysis pdf |

|

Cluster Analysis - Computer Science & Engineering User Home Pages

Cluster analysis divides data into groups (clusters) that are meaningful, useful, the bibliographic notes provide references to relevant books and papers that satisfies the objective function, e g , that minimizes the total SSE Of course, |

|

Data Mining - Clustering

Poznan University of Technology Poznan, Poland Lecture 7 SE Master Course J Han course on data mining 1 A cluster is a subset of objects which are “ similar” 2 A subset of objects Cluster Analysis → Analiza skupień, Grupowanie |

|

CLUSTERING WITH GIS: AN ATTEMPT TO CLASSIFY TURKISH

1 nov 2006 · Contains polygon data of district's administrative borders This map is actually base map for making thematic maps and analysis 1 November |

|

Cluster Analysis

10 3 1 Agglomerative versus Divisive Hierarchical Clustering 20 Cluster analysis or simply clustering is the process of partitioning a set of of presentation |

|

Data Mining Cluster Analysis: Advanced Concepts and Algorithms

Cluster Analysis: Advanced Concepts and Algorithms Lecture Notes for Chapter 9 Introduction to number of points from a cluster and then “shrinking” them |

|

Introduction to Data Mining

12 fév 2020 · provides up-to-date information, lecture slides, and exercise material 19 02 2020 Lecture Cluster Analysis Presentation of project results |