adagrad

|

Adaptive Subgradient Methods for Online Learning and Stochastic

In contrast to AROW the ADAGRAD algorithm uses the root of the inverse covariance matrix a consequence of our formal analysis Crammer et al 's algorithm and |

|

Adaptive Subgradient Methods for Online Learning and Stochastic

Before introducing our adaptive gradient algorithm which we term ADAGRAD |

|

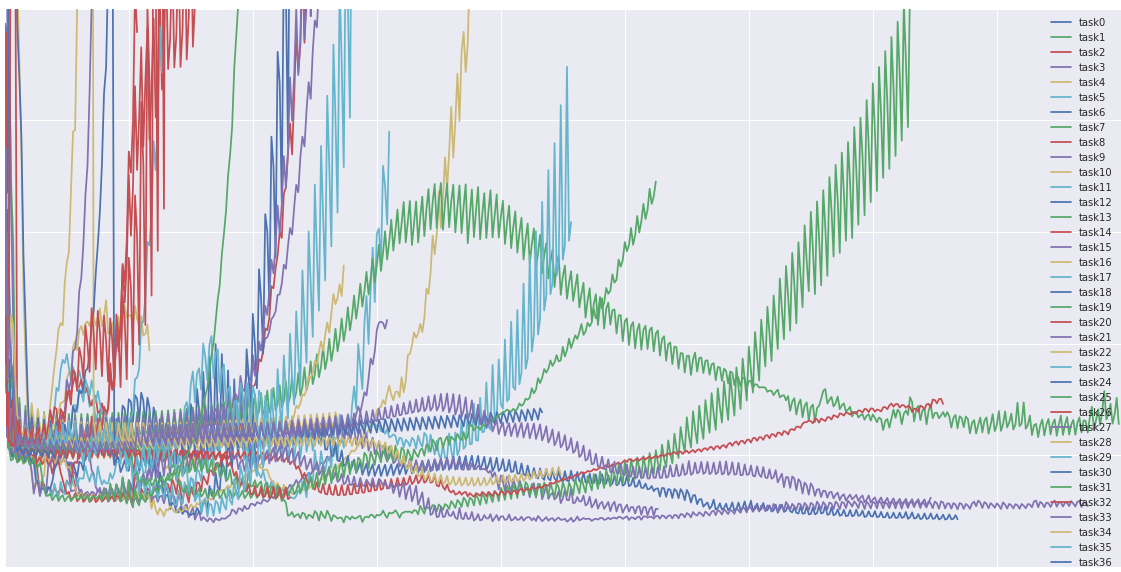

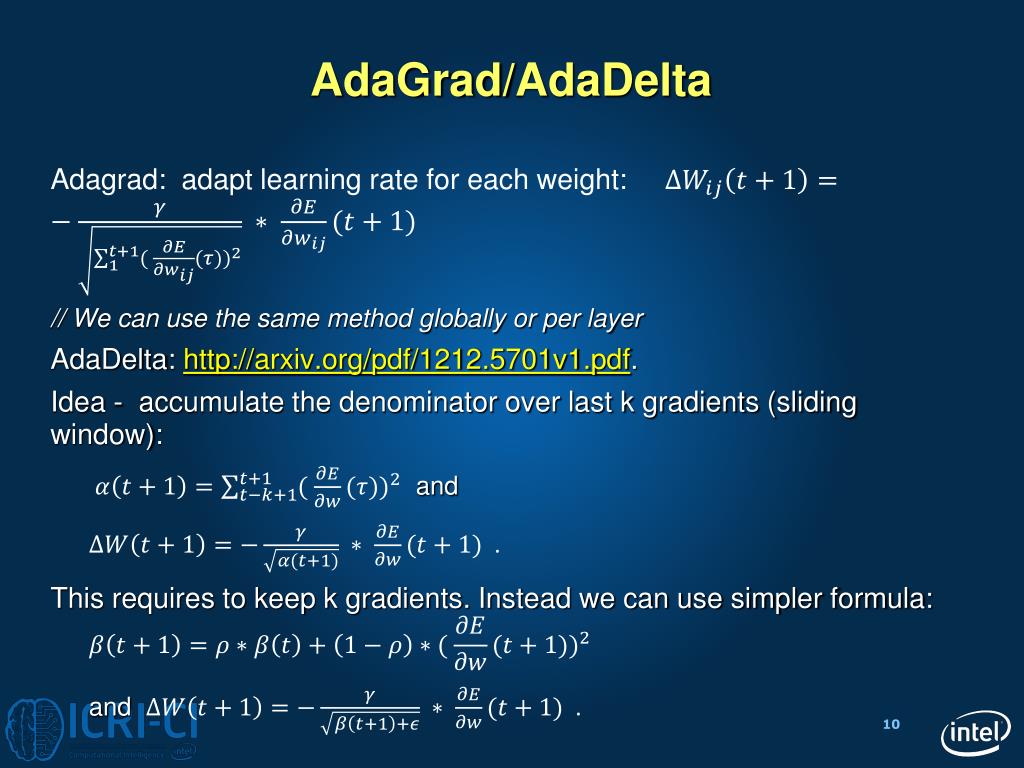

Adaptive Gradient Methods AdaGrad / Adam

Adagrad AdaDelta |

|

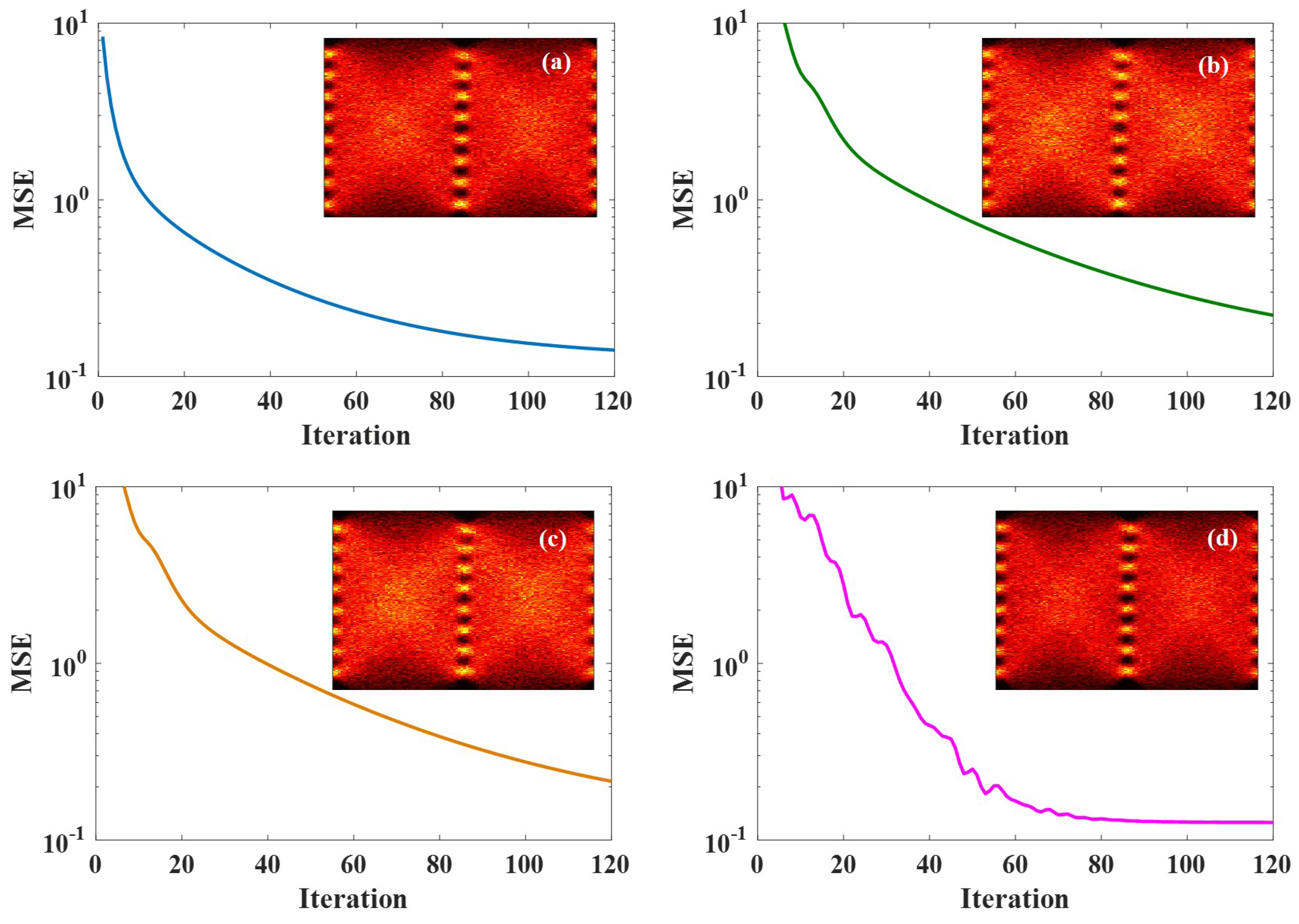

AdaGrad stepsizes: Sharp convergence over nonconvex landscapes

Abstract. Adaptive gradient methods such as AdaGrad and its variants update the stepsize in stochastic gradient descent on the fly according to the |

|

Adagrad Adam and Online-to-Batch

04-Jul-2017 Adagrad. Adam. Online-To-Batch. Motivation. Stochastic Optimization. Standard stochastic gradient algorithms follow a predetermined scheme. |

|

Why ADAGRAD Fails for Online Topic Modeling

lyzing large datasets and ADAGRAD is a widely-used technique for tuning learning rates during online gradient optimization. |

|

Adagrad - An Optimizer for Stochastic Gradient Descent

The Adagrad optimizer in contrast modifies the learning rate adapting to the direction of the descent towards the optimum value. In other words |

|

(Nearly) Dimension Independent Private ERM with AdaGrad Rates

In this paper we propose noisy-AdaGrad a novel optimization algorithm that leverages gradient pre-conditioning and knowledge of the subspace in which |

|

Adaptive Gradient Methods AdaGrad / Adam

Adagrad AdaDelta |

|

AdaGrad stepsizes: Sharp convergence over nonconvex landscapes

Abstract. Adaptive gradient methods such as AdaGrad and its variants update the stepsize in stochastic gradient descent on the fly according to the |

|

Adaptive Subgradient Methods for Online Learning and Stochastic

Our algorithm called ADAGRAD |

|

AdaGrad - Adaptive Subgradient Methods for Online Learning and

Before introducing our adaptive gradient algorithm, which we term ADAGRAD, we establish no- tation Vectors and scalars are lower case italic letters, such as x |

|

Why ADAGRAD Fails for Online Topic Modeling - Association for

lyzing large datasets, and ADAGRAD is a widely-used technique for tuning learning rates during online gradient optimization However, these two techniques do |

|

Adaptive Subgradient Methods for Online Learning and Stochastic

Our algorithm, called ADAGRAD, makes a second-order correction to the online gradient descent to suffer Ω(d2) loss while ADAGRAD suffers constant regret |

|

AdaGrad Stepsizes - Proceedings of Machine Learning Research

AdaGrad Stepsizes: Sharp Convergence Over Nonconvex Landscapes Rachel Ward *12 Xiaoxia Wu *12 Léon Bottou 2 Abstract Adaptive gradient methods |

|

Notes on AdaGrad - Miraheze

Notes on AdaGrad Joseph Perla 2014 1 Introduction Stochastic Gradient Descent (SGD) is a common online learning algorithm for optimizing convex (and |

|

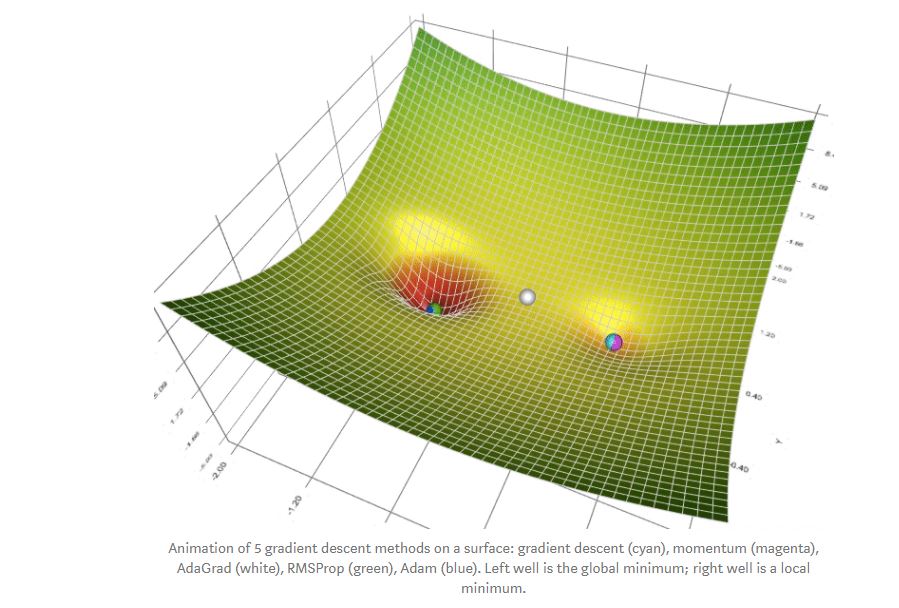

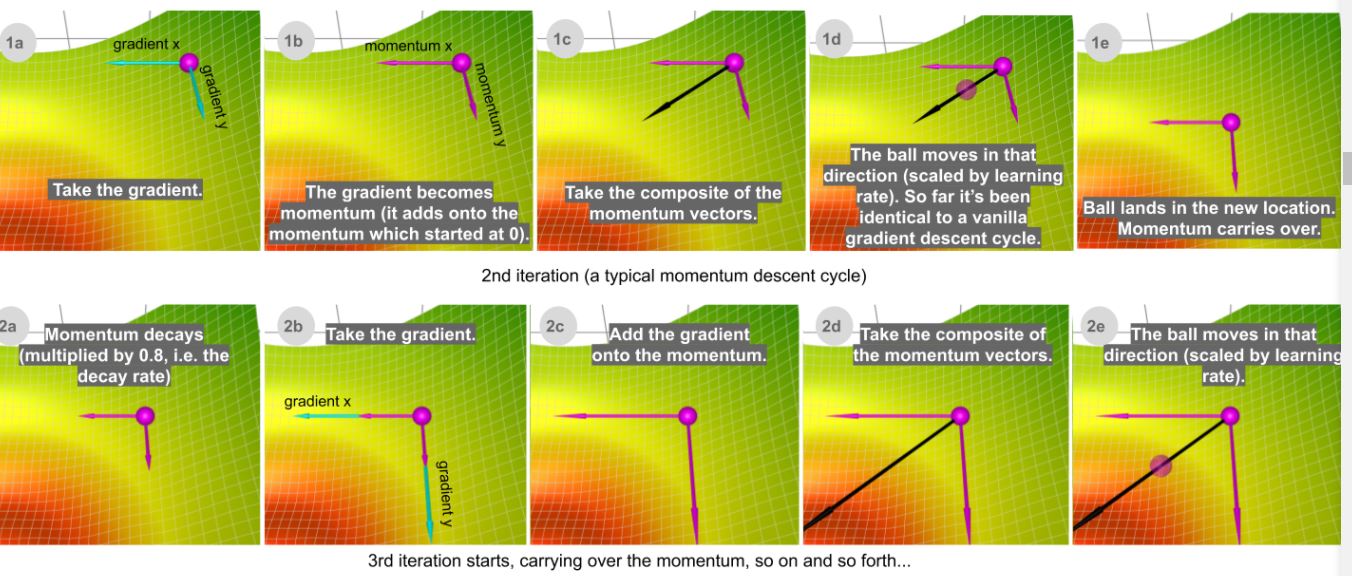

Stochastic gradient descent

Algorithmes d'optimisation • Descente du gradient: 1 par batch 2 stochastique 3 avec momentum 4 accéléré de Nesterov 5 Adagrad 6 RMSprop 7 Adam |

|

The Implicit Bias of AdaGrad on Separable Data - NIPS Proceedings

We show that AdaGrad converges to a direction that can be characterized as the solution of a quadratic optimization problem with the same feasible set as the |

|

Adagrad, Adam and Online-to-Batch

4 juil 2017 · Let's begin by motivating Adagrad from 2 different viewpoints: Stochastic optimization (brief) Online convex optimization Page 5 Adagrad Adam |

|

Adaptive Gradient Methods AdaGrad / Adam - Washington

Adagrad, AdaDelta, RMS prop, ADAM, l-‐BFGS, heavy ball gradient, momemtum – Noise injection: • Simulated annealing, dropout, Langevin methods |

![PDF] AdaGrad stepsizes: sharp convergence over nonconvex PDF] AdaGrad stepsizes: sharp convergence over nonconvex](https://hackernoon.com/hn-images/1*BOTBDPGYVQ3X9u-Wc4OhzQ.png)

![PDF] Variants of RMSProp and Adagrad with Logarithmic Regret PDF] Variants of RMSProp and Adagrad with Logarithmic Regret](https://miro.medium.com/max/500/1*Gcnvkcos6uQvnwVQesDPzg.png)