adam a method for stochastic optimization bibtex

Which is better Adam or SGD?

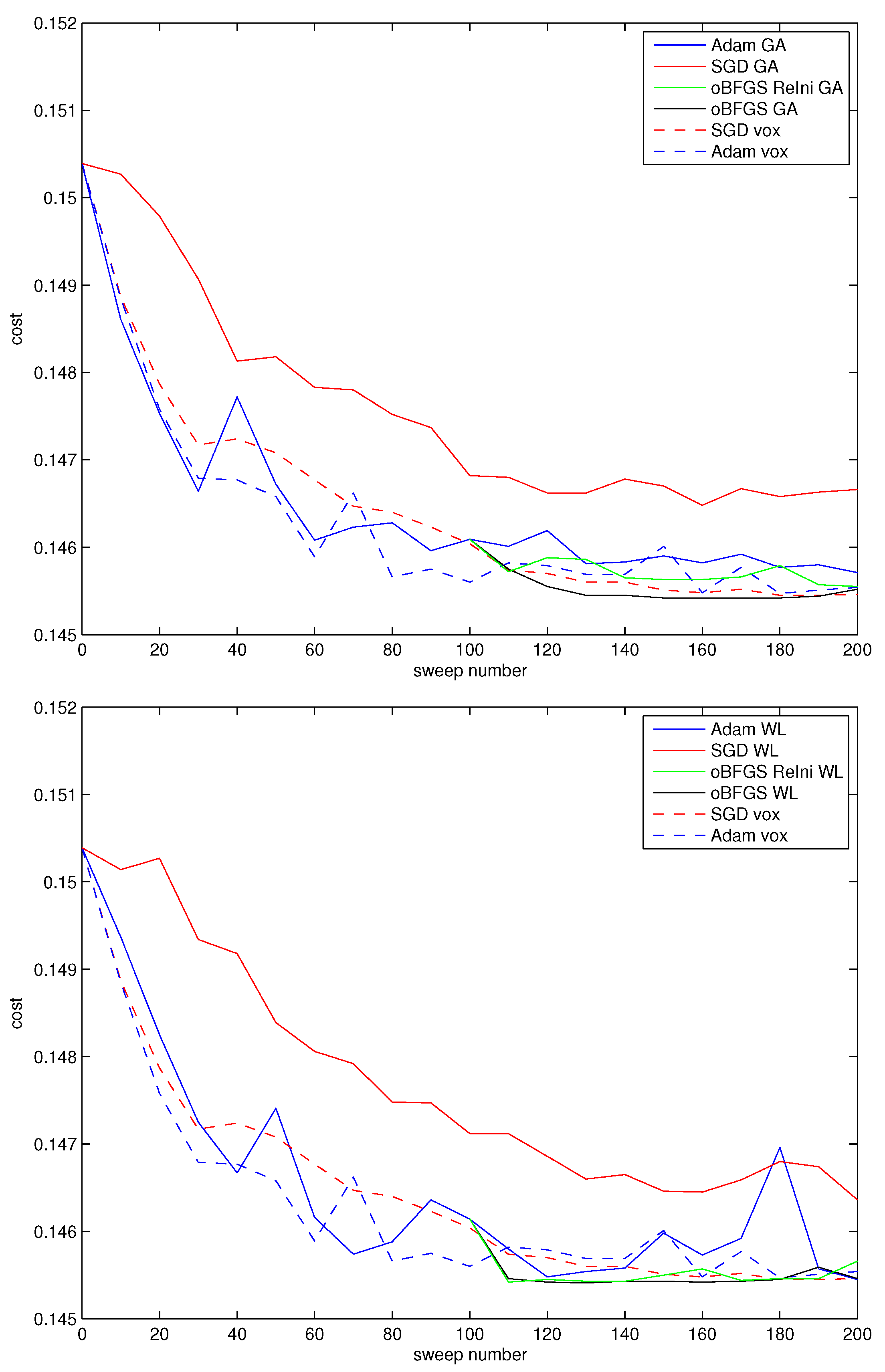

Adam is well known to perform worse than SGD for image classification tasks [22].

For our experiment, we tuned the learning rate and could only get an accuracy of 71.16%.

In comparison, Adam-LAWN achieves an accuracy of more than 76%, marginally surpassing the performance of SGD-LAWN and SGD.Kingma, Jimmy Lei Ba, Adam: A Method For Stochastic Optimization, Published as a conference paper at ICLR 2015.

|

SGDR: STOCHASTIC GRADIENT DESCENT WITH WARM

Adam: A method for stochastic optimization. arXiv preprint. arXiv:1412.6980 2014. A. Krizhevsky |

|

Adaptive Subgradient Methods for Online Learning and Stochastic

Before introducing our adaptive gradient algorithm which we term ADAGRAD |

|

INCORPORATING NESTEROV MOMENTUM INTO ADAM

Diederik Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint. arXiv:1412.6980 2014. Yann LeCun |

|

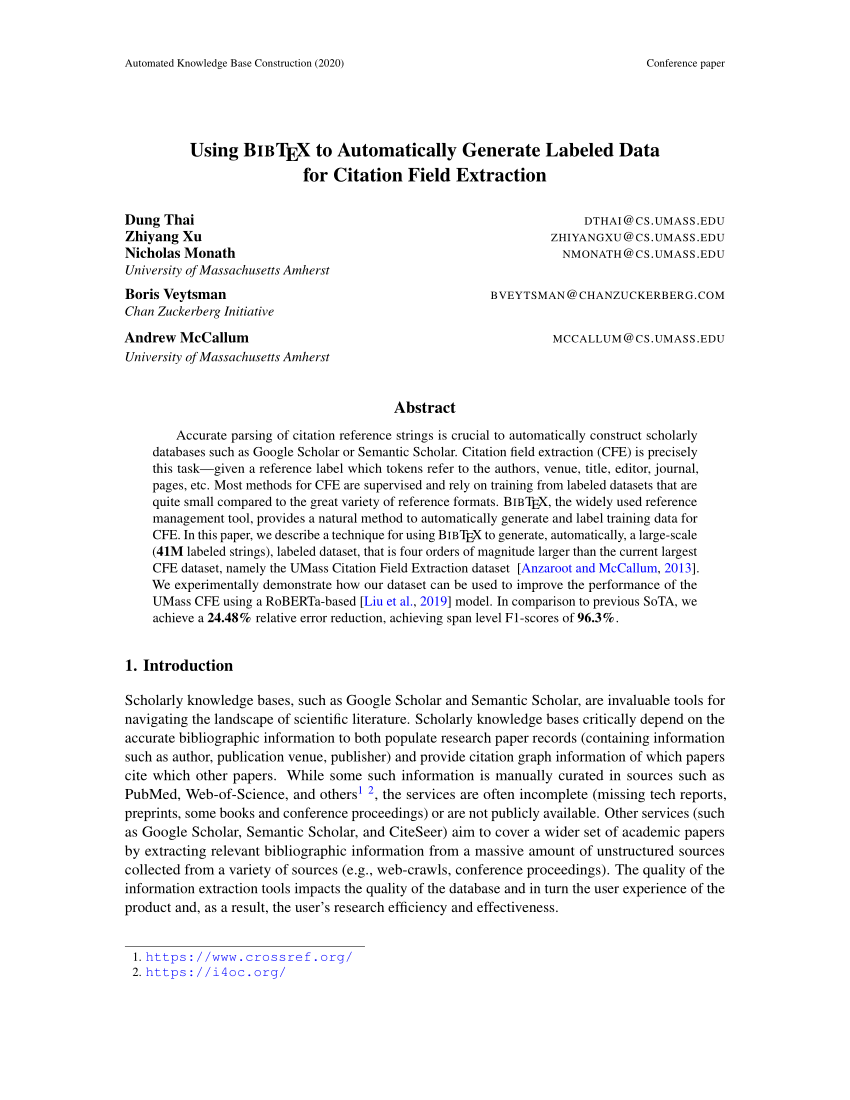

Using BIBTEX to Automatically Generate Labeled Data for Citation

In this paper we describe a technique for using BIBTEX to generate |

|

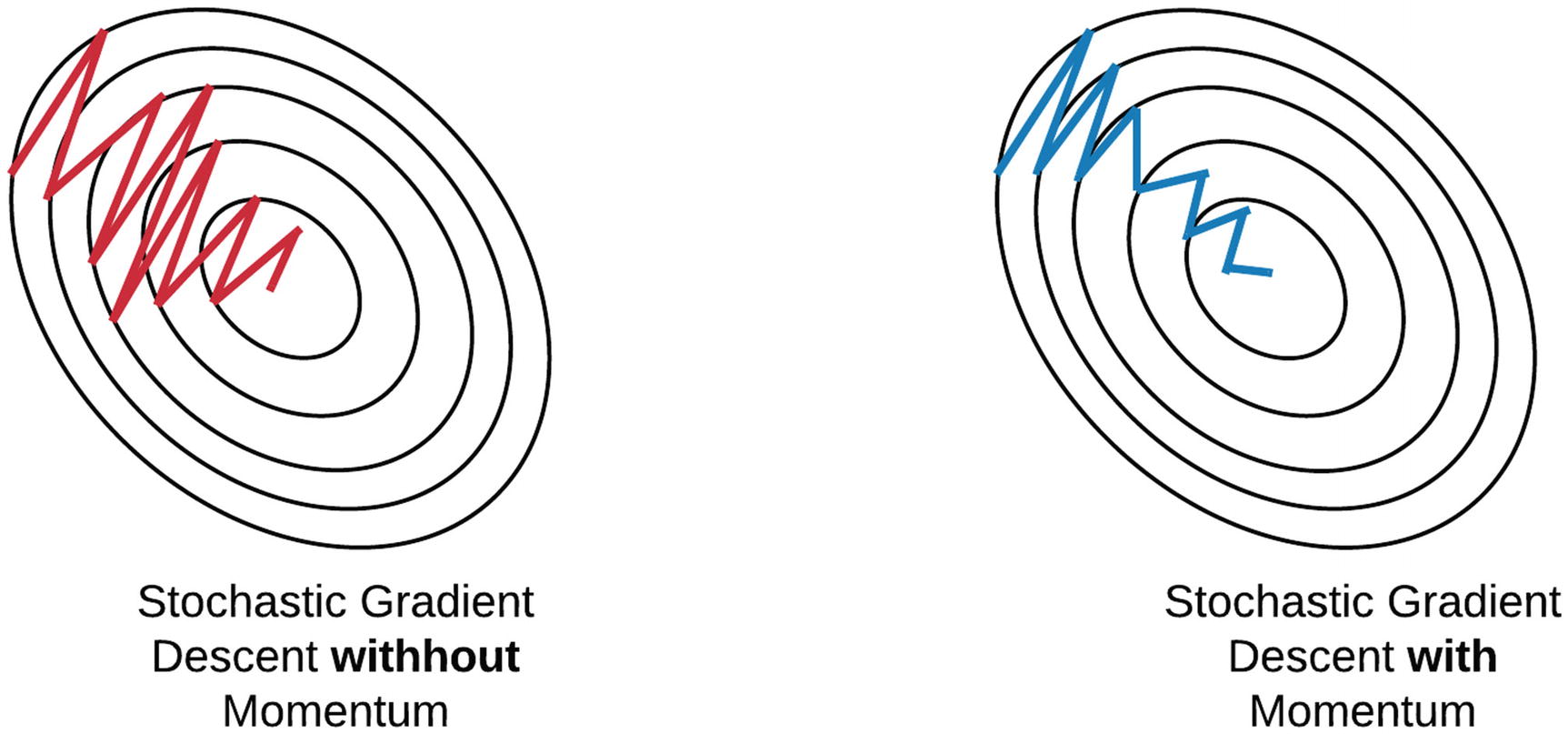

The Frontier of SGD and Its Variants in Machine Learning

IEEE. [13] Kingma D. |

|

Closing the Generalization Gap of Adaptive Gradient Methods in

Adam: A method for stochastic optimization. Interna- tional Conference on Learning Representations 2015. [Kipf and Welling |

|

Customized Nonlinear Bandits for Online Response Selection in

son sampling method that is applied to a polynomial feature Adam: A method for stochastic ... Matroid bandits: Fast combinatorial optimization. |

|

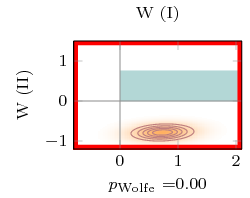

Trivializations for Gradient-Based Optimization on Manifolds

given by a Euclidean optimization algorithm—e.g. SGD |

|

Variance Reduced Training with Stratified Sampling for Forecasting

Advances in neural information processing systems 26:315–323 |

|

INCORPORATING NESTEROV MOMENTUM INTO ADAM

ized optimization algorithm Adam (Kingma Ba, 2014) has a provably better bound than gradient descent for convex, non-stochastic objectives–can be |

|

Adaptive Subgradient Methods for Online Learning and Stochastic

Keywords: subgradient methods, adaptivity, online learning, stochastic considered efficient and robust methods for stochastic optimization, the ImageNet data set and was instrumental in helping to get our experiments running, and Adam |

|

Hyper-parameter optimization tools comparison for Multiple Object

16 oct 2018 · our study with a simple stochastic optimization algorithm as a baseline 1000 citation and additional websites dedicated to explain the tool Snoek, J , Larochelle, H , Adams, R P : Practical bayesian opti- mization of |

|

Meta-Learning with Implicit Gradients - NIPS Proceedings - NeurIPS

ond, implicit MAML is agnostic to the inner optimization method used, as long as it can find an methods, Adam [28], or gradient descent with momentum can also be used without modification Adam: A method for stochastic optimization |

|

Iterative Methods for Optimization CT Kelley - SIAM

Part II of this book covers some algorithms for noisy or global optimization or both There When that is the case we will cite some of the We also omit stochastic methods like the special-purpose methods discussed in [38] and [39], Linear and Nonlinear Conjugate Gradient Methods, L M Adams and J L Nazareth, |

|

Unsupervised Neural Hidden Markov Models - Association for

5 nov 2016 · a generative neural approach to HMMs and demon- strate how Adam: A method for stochastic optimization The International Conference on |

|

Input Convex Neural Networks - Proceedings of Machine Learning

structured prediction), this optimization problem is convex This is similar in Comparison of approaches on BibTeX multi-label classi- fication task Kingma, Diederik and Ba, Jimmy Adam: A method for stochastic optimization arXiv preprint |

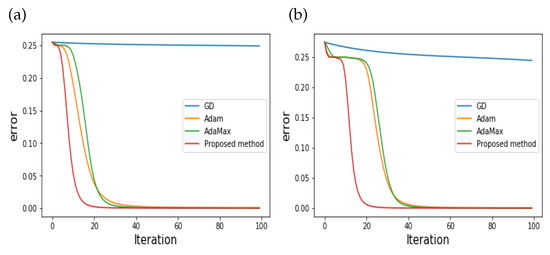

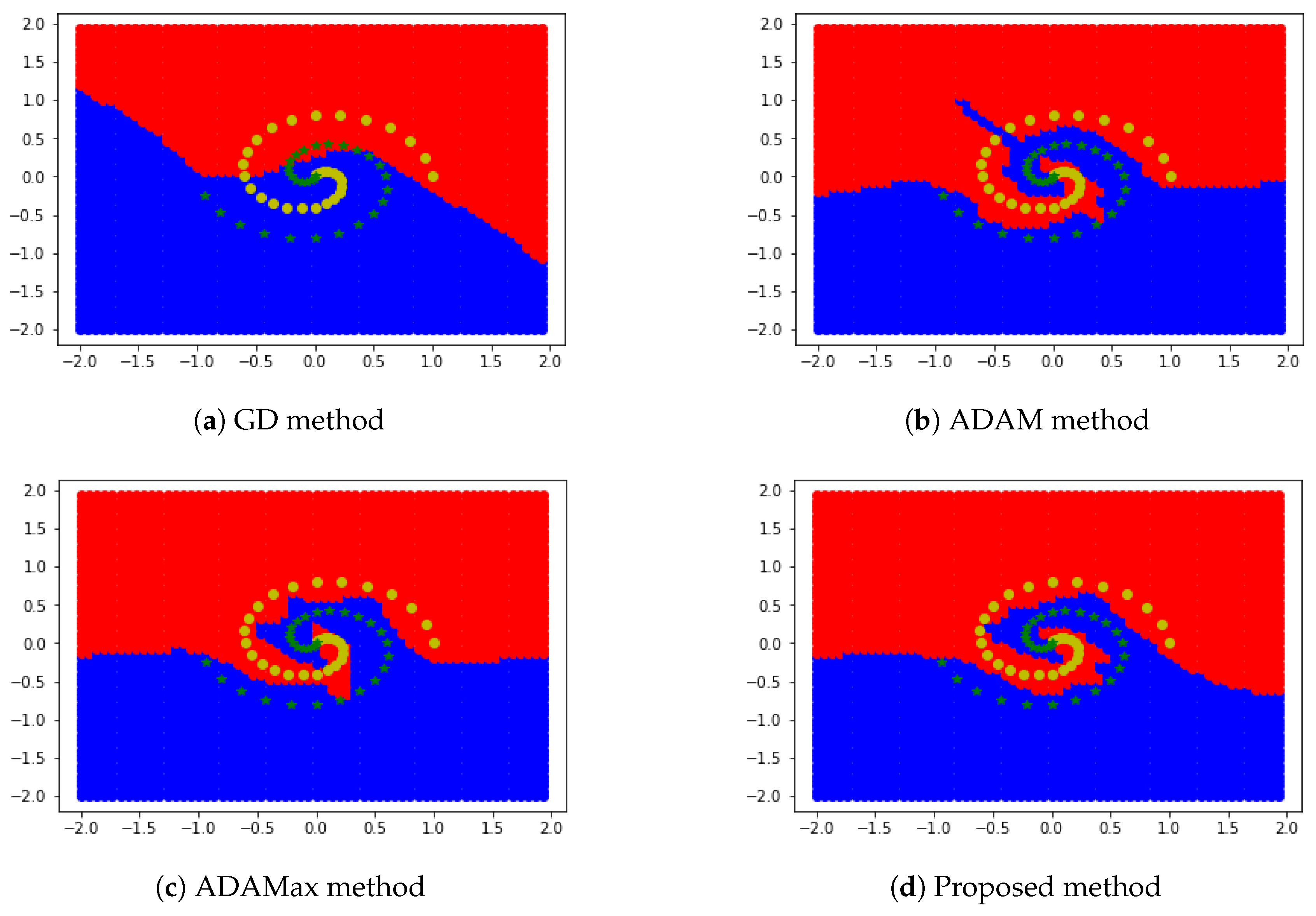

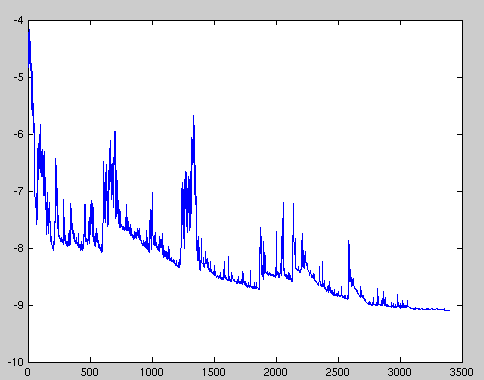

![PDF] Convergence and Dynamical Behavior of the Adam Algorithm for PDF] Convergence and Dynamical Behavior of the Adam Algorithm for](https://www.mdpi.com/symmetry/symmetry-11-00942/article_deploy/html/images/symmetry-11-00942-g005.png)

![PDF] Convergence and Dynamical Behavior of the Adam Algorithm for PDF] Convergence and Dynamical Behavior of the Adam Algorithm for](https://d3i71xaburhd42.cloudfront.net/f84c7a92190ea0702af6a1ee1744c7094472df65/26-Figure3-1.png)

![PDF] Convergence and Dynamical Behavior of the Adam Algorithm for PDF] Convergence and Dynamical Behavior of the Adam Algorithm for](https://www.mdpi.com/symmetry/symmetry-11-00942/article_deploy/html/images/symmetry-11-00942-g001.png)