adam a method for stochastic optimization citation

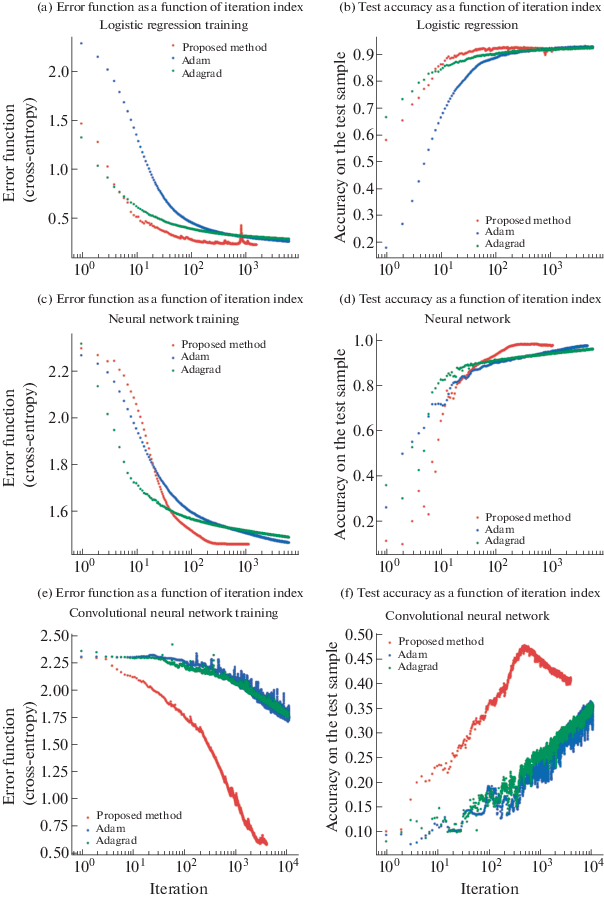

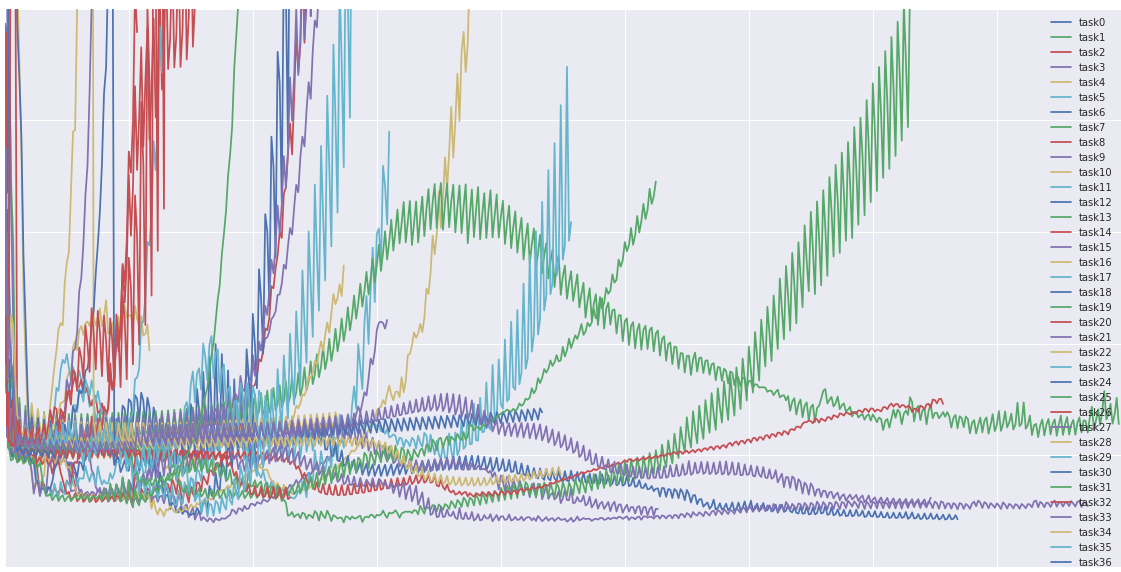

The Adam optimizer achieves the highest accuracy in a reasonable amount of time.

RMSprop achieves similar accuracy to Adam but with a much longer computation time.

Surprisingly, the SGD algorithm required the least training time and produced the best results.

Who invented Adam optimizer?

Developed by Diederik P.

Kingma and Jimmy Ba in 2014, Adam has become a go-to choice for many machine learning practitioners.

What is Adam Epsilon?

In the Adam algorithm and other high precision computational algorithms as such, the parameter ϵ, is a machine precision threshold that essentially represents the smallest scaled differential between two floating-point representable numbers in computations (machine epsilon).

|

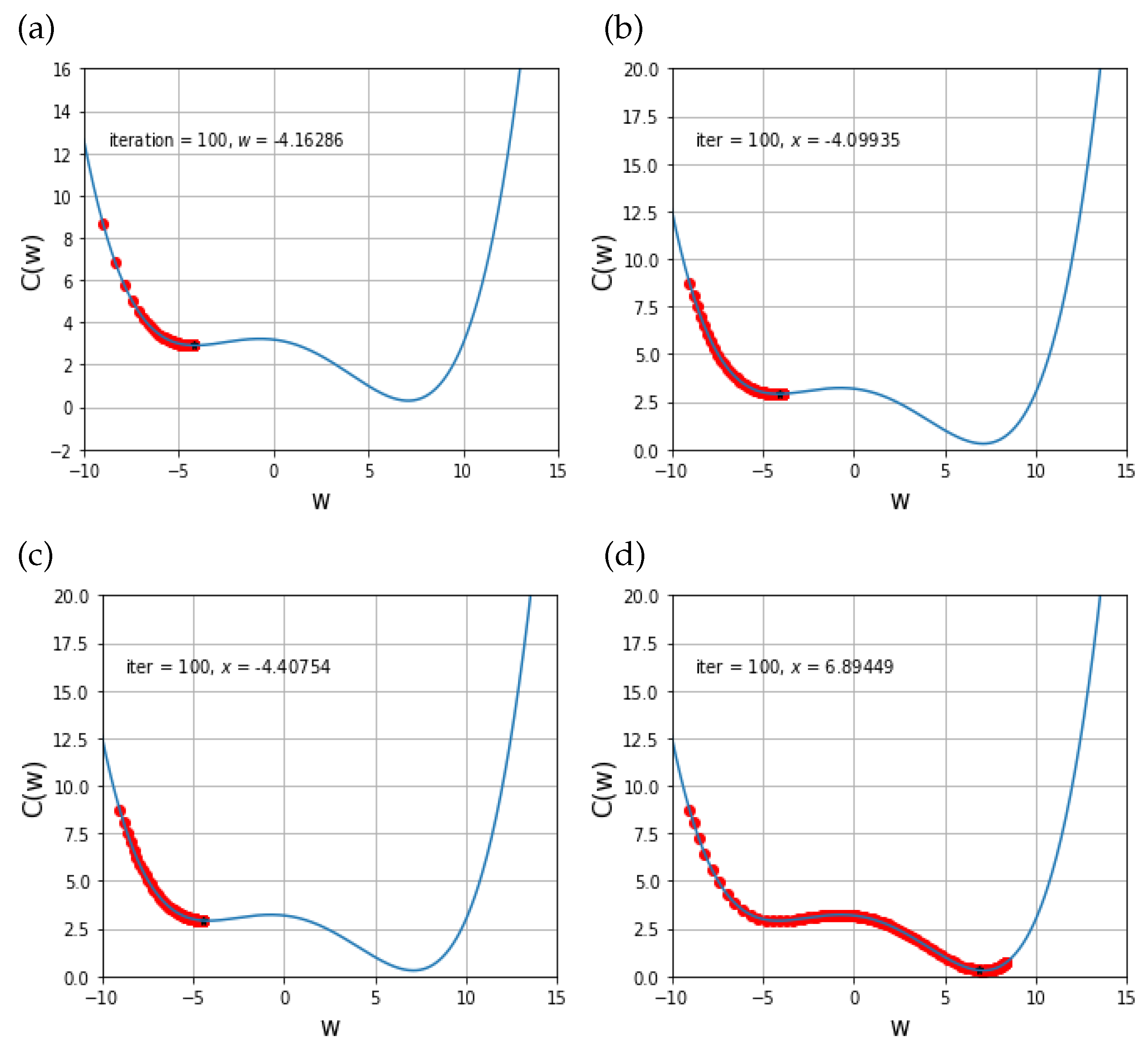

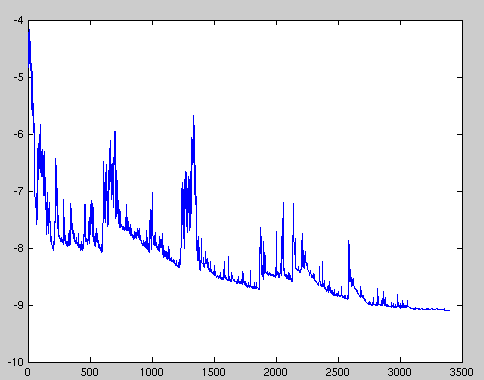

Adam: A Method for Stochastic Optimization

30 ???. 2017 ?. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions |

|

Adaptive Subgradient Methods for Online Learning and Stochastic

?=1 g?g? . Online learning and stochastic optimization are closely related and basically interchangeable. (Cesa-Bianchi et al. 2004). In order |

|

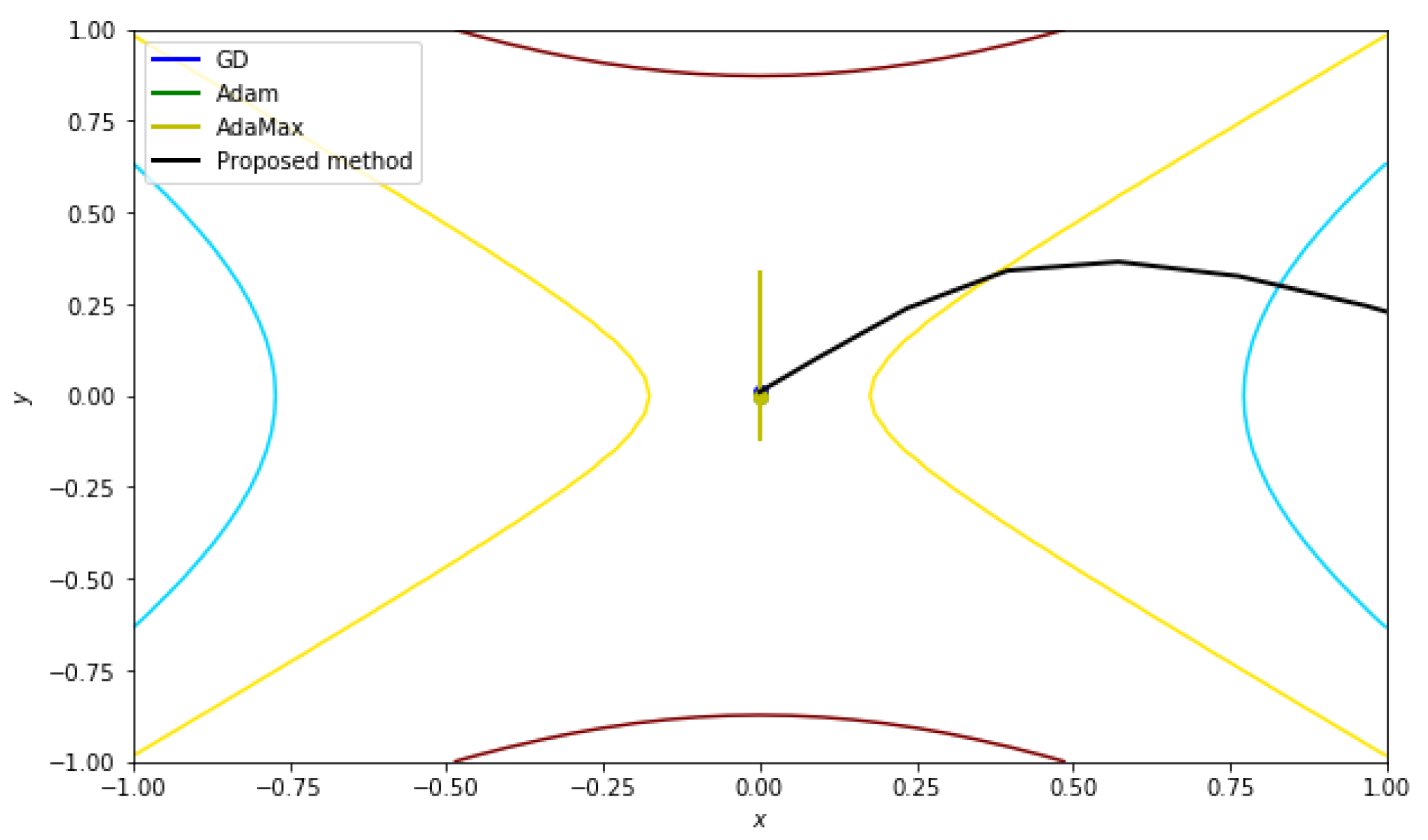

ACMo: Angle-Calibrated Moment Methods for Stochastic Optimization

method (ACMo) a novel stochastic optimization method. It state-of-the-art Adam-type optimizers |

|

Adaptive Methods for Nonconvex Optimization

We study nonconvex stochastic optimization problems of the form min x?Rdf(x) := Es?P[l(x s)] |

|

INCORPORATING NESTEROV MOMENTUM INTO ADAM

Adaptive subgradient methods for online learning and stochastic optimization. The Journal of Machine Learning Research 12:2121–2159 |

|

Adeptus: Fast Stochastic Optimization Using Similarity

Popular first-order stochastic optimization methods for deep mentum) or adaptive step-size methods (e.g. Adam/AdaMax. AdaBelief). |

|

Optimization for quantitative decisions: a versatile multi-tasker or an

25 ???. 2022 ?. Adam ([Kingma and Ba 2014] |

|

Research on IPSO-RBF transformer fault diagnosis based on Adam

22 ???. 2022 ?. diagnosis based on Adam optimization. To cite this article: Ningning Shao et al 2022 J. Phys.: Conf. Ser. 2290 012117. |

|

ACMo: Angle-Calibrated Moment Methods for Stochastic Optimization

method (ACMo) a novel stochastic optimization method. It state-of-the-art Adam-type optimizers |

|

Optimization for quantitative decisions: a versatile multi-tasker or an

16 ???. 2022 ?. Adam ([Kingma and Ba 2014] |

|

Effective neural network training with a new weighting - IEEE Xplore

Citation information: DOI test the performance of our proposed NWM-Adam optimization algorithm, we compare it In these algorithms, stochastic gradient |

|

Memory Efficient Adaptive Optimization - NIPS Proceedings - NeurIPS

Adaptive gradient-based optimizers such as Adagrad and Adam are crucial for method in the convex (online or stochastic) optimization setting, and demonstrate experimentally that URL http://dl acm org/citation cfm?id=646255 684566 |

|

A Proof of Local Convergence for the Adam - OPUS 4 – KOBV

contribution of this paper is a method for the local convergence analysis in batch Index Terms—Non-convex optimization, Adam optimizer, con- Stochastic gradient descent (SGD) becomes an effective Available: http://dl acm org/ citation |

|

UC Santa Cruz - eScholarshiporg

A study of the Exponentiated Gradient +/- algorithm for stochastic optimization of Adam — Applies concepts of both momentum and adaptive weights while the citation commonly used for it is to slide 29 lecture 6 from a lecture by Hinton |

|

Efficient Full-Matrix Adaptive Regularization - Proceedings of

mostly applicable to convex optimization, and is thus less influential in deep for an algorithm with adaptive regularization in a stochastic By citation count (or GitHub search hits), towards a full-matrix drop-in replacement for Adam; how- |