adam a method for stochastic optimization iclr

What is the best setting for Adam optimizer?

Best Practices for Using Adam Optimization

Use Default Hyperparameters: In most cases, the default hyperparameters for Adam optimization (beta1=0.9, beta2=0.999, epsilon=1e-8) work well and do not need to be tuned.Adaptive Moment Estimation is an algorithm for optimization technique for gradient descent.

The method is really efficient when working with large problem involving a lot of data or parameters.

It requires less memory and is efficient.

What is Adam optimization technique?

The Adam optimizer, short for “Adaptive Moment Estimation,” is an iterative optimization algorithm used to minimize the loss function during the training of neural networks.

Adam can be looked at as a combination of RMSprop and Stochastic Gradient Descent with momentum.

|

Adam: A Method for Stochastic Optimization

30-01-2017 Published as a conference paper at ICLR 2015. Algorithm 1: Adam our proposed algorithm for stochastic optimization. See section 2 for ... |

|

Adaptive Gradient Methods And Beyond

Adam: A method for stochastic optimization. ICLR 2015. Page 9. Adaptive Methods: Pros. ? Faster training speed. |

|

Lecture 4: Optimization

16-09-2019 Adam (almost): RMSProp + Momentum. Lecture 4 - 64. Kingma and Ba “Adam: A method for stochastic optimization” |

|

Lecture 3.pptx

15-04-2022 Kingma and Ba “Adam: A method for stochastic optimization” |

|

ACMo: Angle-Calibrated Moment Methods for Stochastic Optimization

method (ACMo) a novel stochastic optimization method. It state-of-the-art Adam-type optimizers |

|

Lecture 7: Training Neural Networks Part 2

25-04-2017 Kingma and Ba “Adam: A method for stochastic optimization” |

|

Q-Space Deep Learning for Alzheimers Disease Diagnosis: Global

ICLR. Workshops 2015. [15] D. Kingma J. Ba. Adam: a method for stochastic optimization. ICLR 2015 Diffusion MRI Processing Methods. |

|

Lecture 8: Training Neural Networks Part 2

22-04-2021 Kingma and Ba “Adam: A method for stochastic optimization” |

|

ACMo: Angle-Calibrated Moment Methods for Stochastic Optimization

method (ACMo) a novel stochastic optimization method. It state-of-the-art Adam-type optimizers |

|

Lecture 8: Training Neural Networks Part 2

25-04-2019 Kingma and Ba “Adam: A method for stochastic optimization” |

|

PbSGD: Powered Stochastic Gradient Descent Methods for - IJCAI

Adam: A method for stochastic optimization Proc of ICLR, 2015 [Krizhevsky and Hinton, 2009] Alex Krizhevsky and Geof- frey Hinton Learning multiple |

|

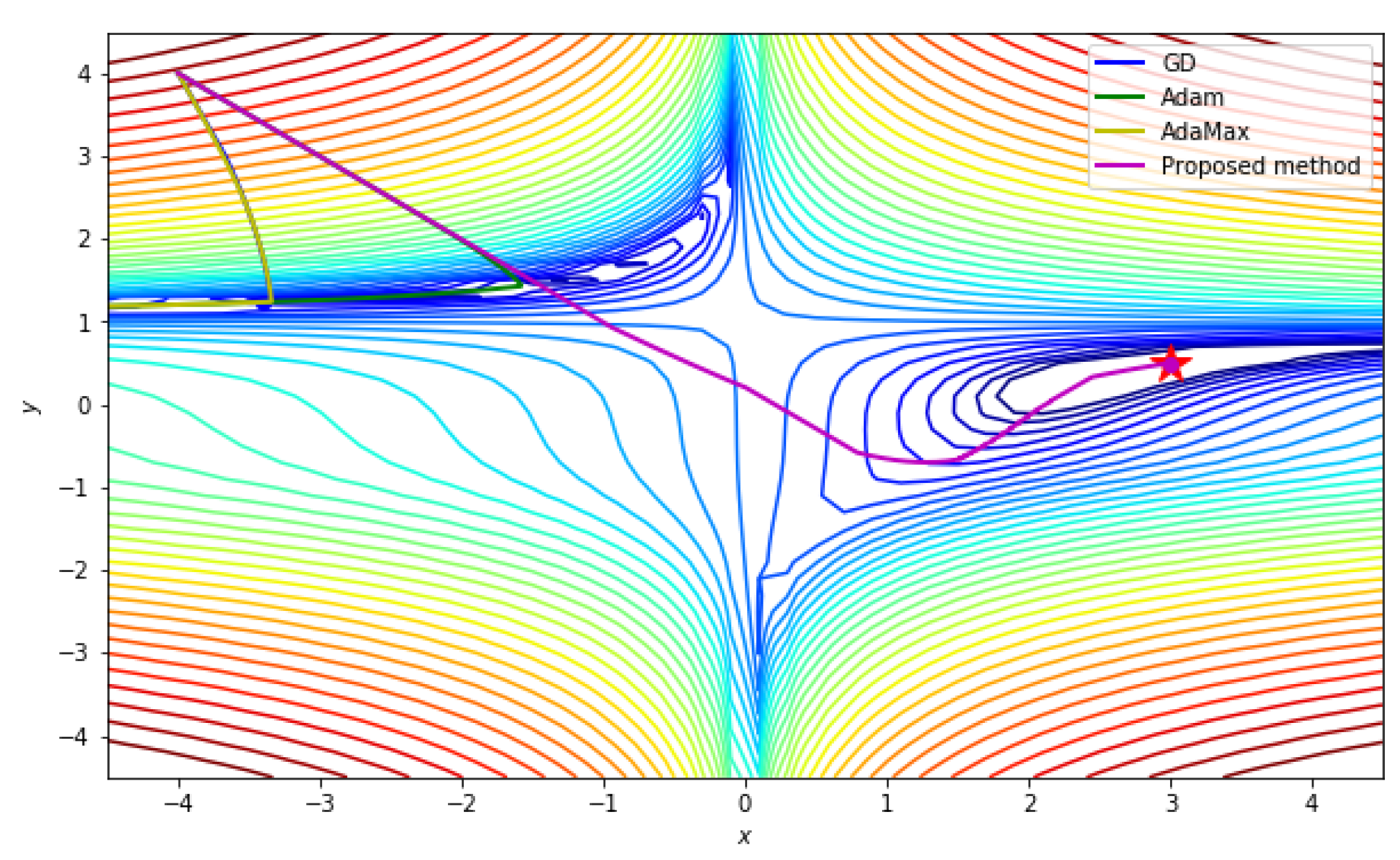

An Optimization Strategy Based on Hybrid Algorithm of Adam and

Therefore, this paper proposes a new variant of the ADAM algorithm (AMSGRAD) , which not only solves the convergence Stochastic gradient descent (SGD) [2] has emerged as on Learning Representations (ICLR 2015), 2015 4 Duchi |

|

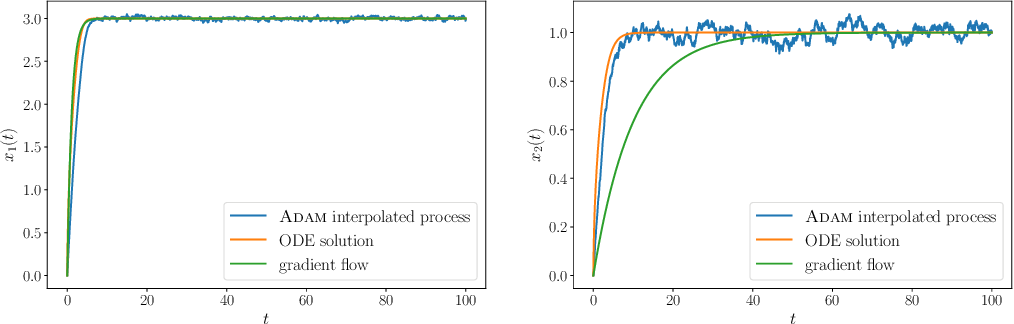

Dissecting Adam: The Sign, Magnitude and Variance of Stochastic

Dissecting Adam: The Sign, Magnitude and Variance of Stochastic Gradients Lukas Balles 1 Philipp Hennig gradient in each step of an iterative optimization algorithm becomes inefficient for Representations (ICLR), 2015 Krizhevsky, A |

|

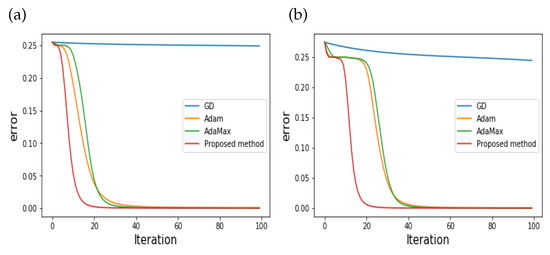

Improved Adam Optimizer for Deep Neural Networks - IEEE/ACM

Most practical optimization methods for deep neural networks (DNNs) are based on the stochastic gradient descent (SGD) algorithm However, the learning rate |

|

First-Order Optimization (Training) Algorithms in - CEUR-WSorg

The most widely used optimization method in deep learning is the first-order algo The stochastic average gradient (SAG) algorithm [12] is a difference decrease strate AdaMax algorithm [22] is a Adam's algorithm modification, wherein the ing Representations, ICLR 2014, Banff, AB, Canada, pp 1-13, April 14-16, 2014 |

|

Nesterov gradient - Purdue Engineering

Loss vs iterations of gradient-based optimization • Notice: *Diederik P Kingma and Jimmy Ba, “Adam: A Method for Stochastic Optimization”, The 3rd International Conference for Learning Representations (ICLR), San Diego, 2015 |

![PDF] Convergence and Dynamical Behavior of the Adam Algorithm for PDF] Convergence and Dynamical Behavior of the Adam Algorithm for](https://i1.rgstatic.net/publication/334398886_Workshop_track_-ICLR_2018_IMPROVING_ADAM_OPTIMIZER/links/5d2768f992851cf4407a7012/largepreview.png)

![PDF] Convergence and Dynamical Behavior of the Adam Algorithm for PDF] Convergence and Dynamical Behavior of the Adam Algorithm for](https://www.mdpi.com/symmetry/symmetry-11-00942/article_deploy/html/images/symmetry-11-00942-g001.png)