adam learning rate batch size

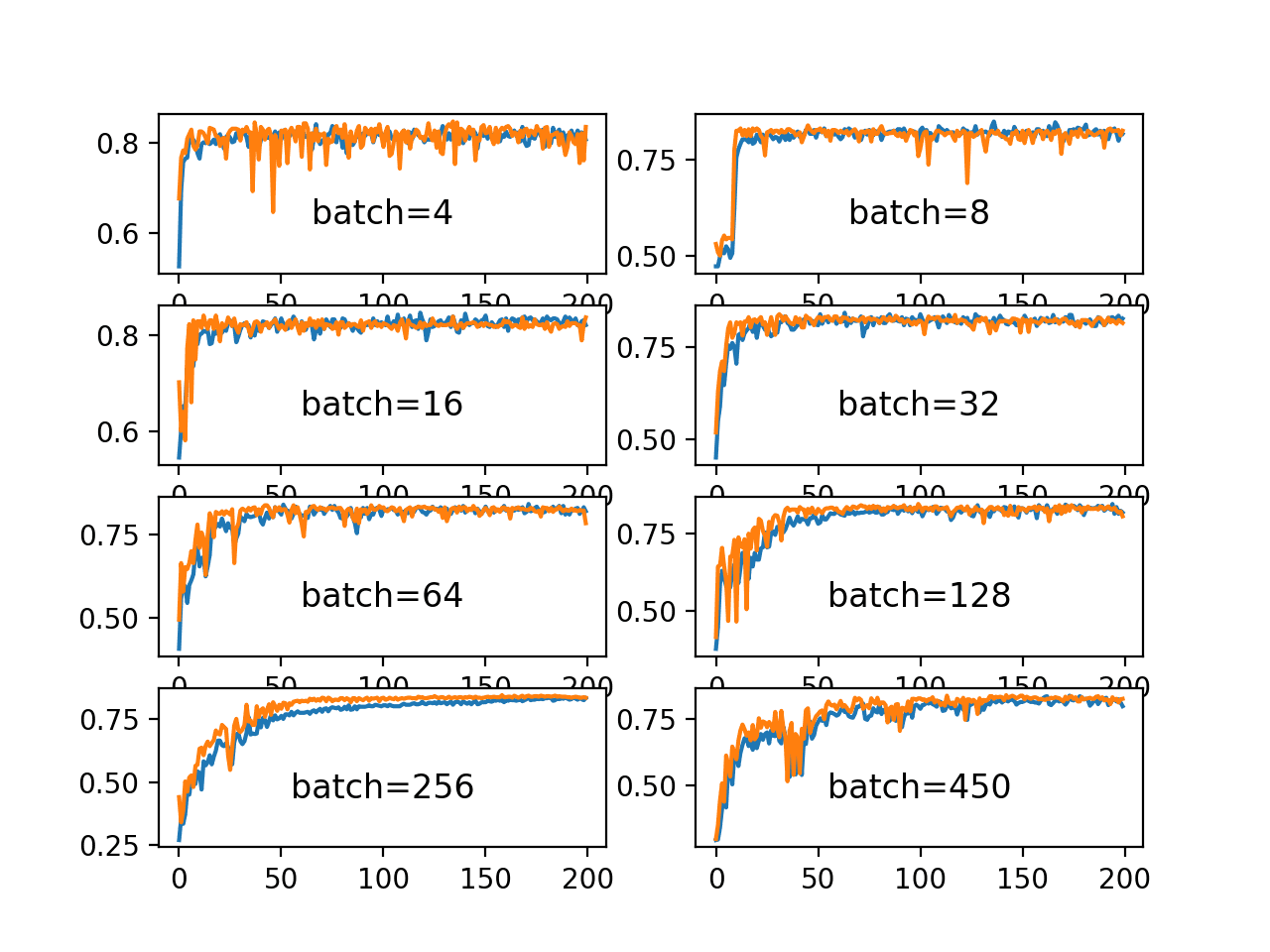

The number of training examples used in the estimate of the error gradient is a hyperparameter for the learning algorithm called the “batch size,” or simply the “batch.” A batch size of 32 means that 32 samples from the training dataset will be used to estimate the error gradient before the model weights are updated.

What is a good batch size for machine learning?

General guidelines for choosing the right batch size

It is a good practice to start with the default batch size of 32 and then try other values if you're not satisfied with the default value.

How is learning rate related to batch size?

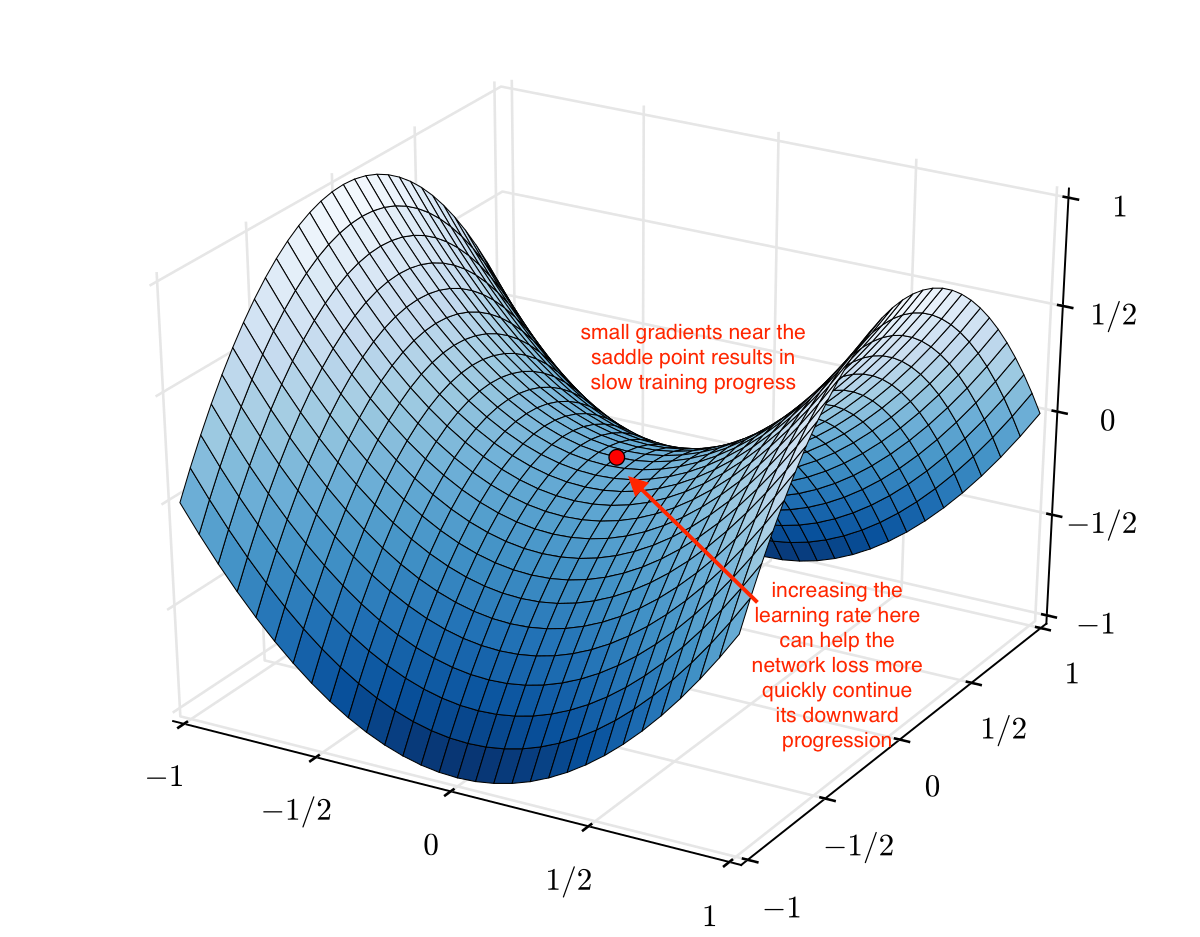

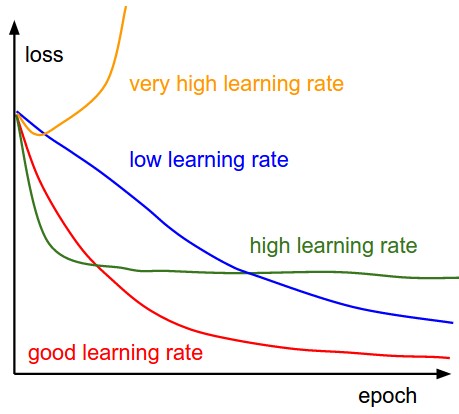

For example, a large batch size may require a smaller learning rate to avoid overshooting, while a small batch size may require a larger learning rate to escape local minima.

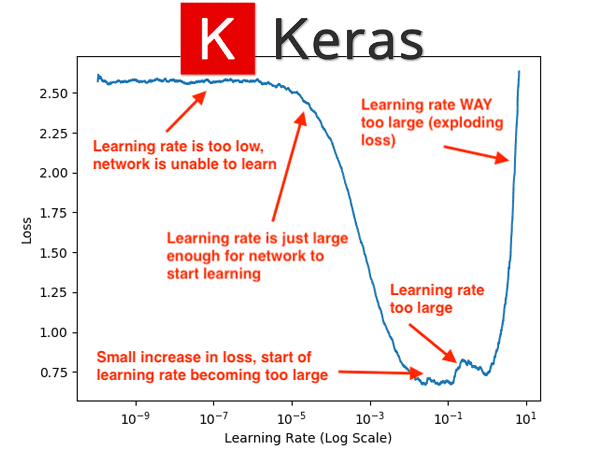

What is a good learning rate for Adam?

An optimal learning rate value (default value 0.001) means that the optimizer would update the parameters just right to reach the local minima.

Varying learning rate between 0.0001 and 0.01 is considered optimal in most of the cases.

|

DONT DECAY THE LEARNING RATE INCREASE THE BATCH SIZE

the batch size during training. This procedure is successful for stochastic gradi- ent descent (SGD) SGD with momentum |

|

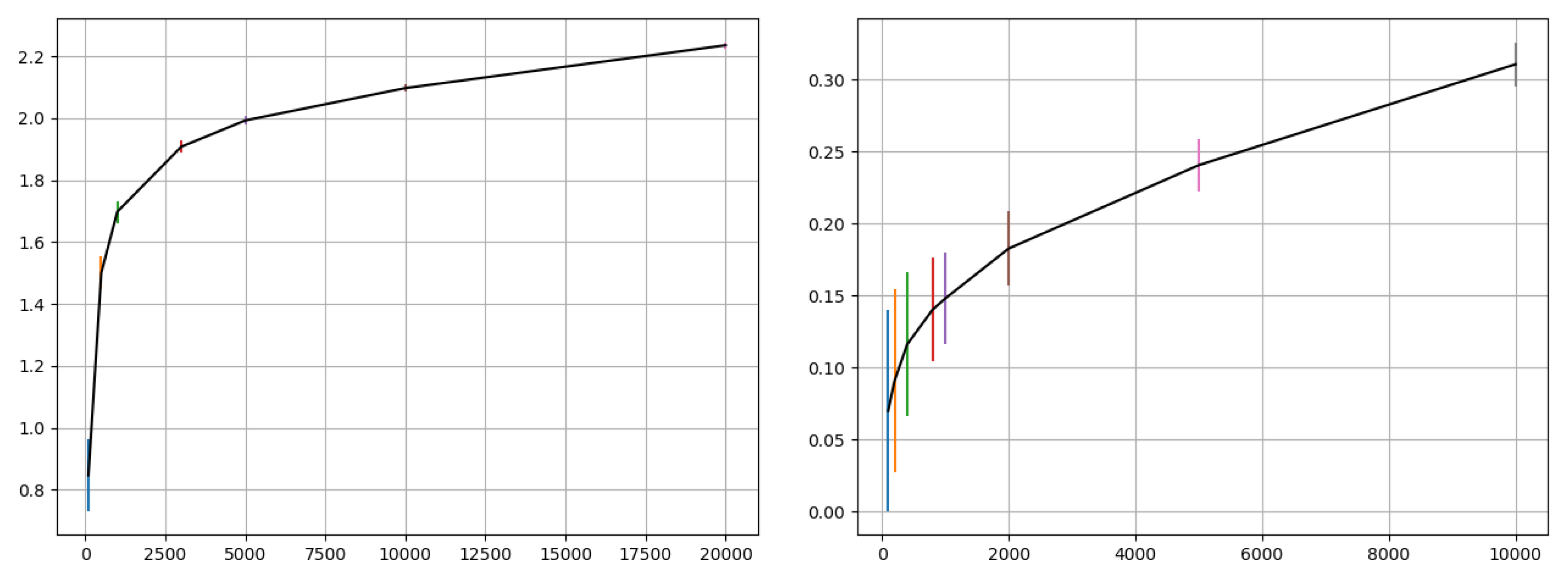

An Empirical Model of Large-Batch Training

14 déc. 2018 momentum Adam |

|

Analyzing Performance of Deep Learning Techniques for Web

learning rate number of epochs and batch size as they all have different range of values. Adam. Learning Rate. 0.1 |

|

Online Batch Selection for Faster Training of Neural Networks

25 avr. 2016 only its diagonal to achieve adaptive learning rates. ... Online Batch Selection in Adam Batch Size 64. Epochs. Training cost function. |

|

Deep Learning Optimisé - Jean Zay

Optimiseur de descente de gradient. 2. Optimiseur SGD ?. Problématique Large Batches ?. Learning Rate Schedulers ?. Momentum ? |

|

Which Algorithmic Choices Matter at Which Batch Sizes? Insights

optimal learning rates and large batch training making it a useful tool to Through large scale experiments with Adam [Kingma and Ba |

|

Learning Rates as a Function of Batch Size: A Random Matrix

(such as the Adam default settings) we derive and verify the efficacy of a square root learning rate scaling with batch size. Specifically we mean that we |

|

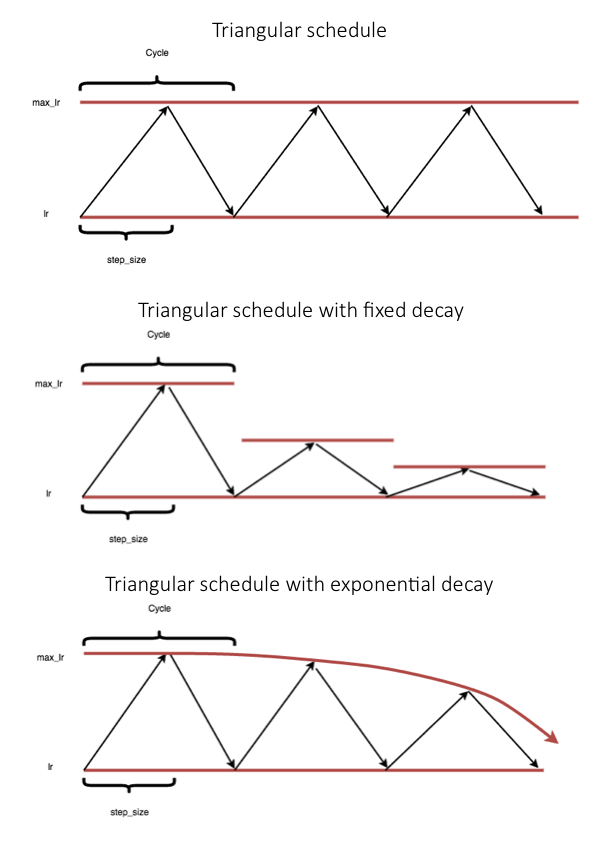

Applying Cyclical Learning Rate to Neural Machine Translation

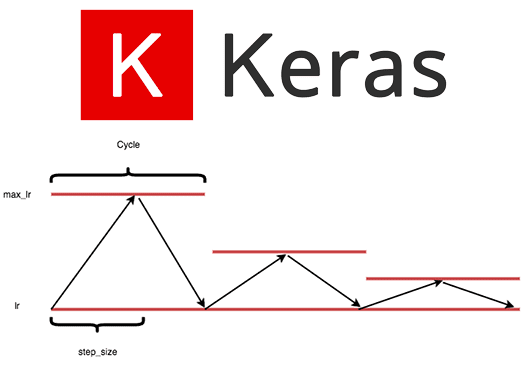

6 avr. 2020 issues such as learning rate policy and batch size. It is often assumed that using the mainstream op- timizer (Adam) with the default ... |

|

Training Deep Networks with Stochastic Gradient Normalized by

6 févr. 2020 is robust to the choice of learning rate and weight initialization (2) works well in a ... (2015) showed that large batch size is benefi-. |

|

Training Tips for the Transformer Model Martin Popel Ond?ej Bojar

proved training regarding batch size learning rate |

|

Which Algorithmic Choices Matter at Which Batch Sizes? - NIPS

optimal learning rates and large batch training, making it a useful tool to generate Through large scale experiments with Adam [Kingma and Ba, 2014] and |

|

Analyzing Performance of Deep Learning - ScienceDirectcom

learning rate, number of epochs and batch size as they all have different range of values Nesterov accelerated gradient, Adagrad, RMSProp, AdaDelta, Adam |

|

Train Deep Neural Networks with Small Batch Sizes - IJCAI

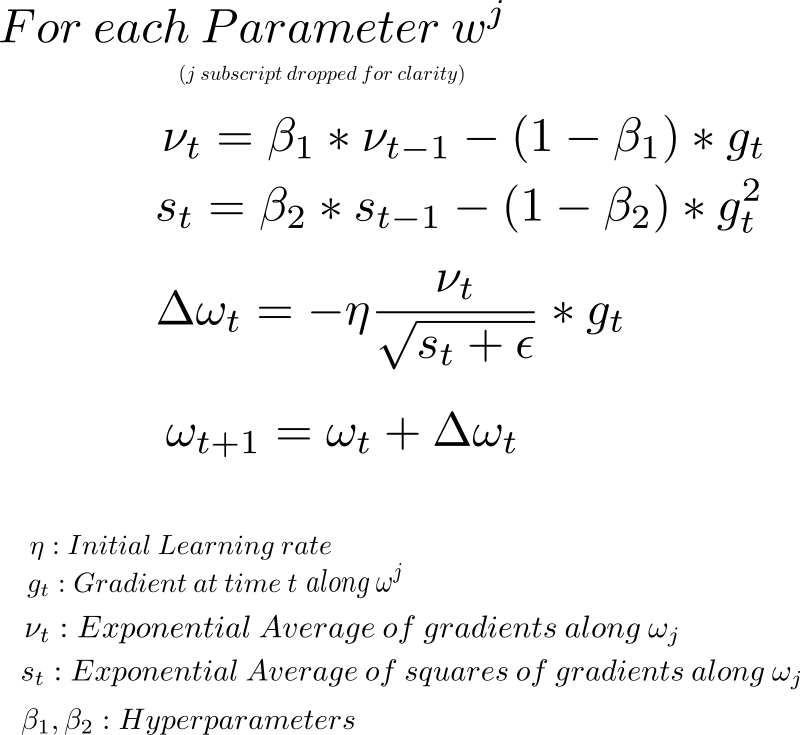

For TRAdam and Adam, β1 = 0 9, β2 = 0 999, the learning rate is initially set to 0 001 and decayed to 0 0001, 0 00001 at epoch 100 and 150, respectively For |

|

Training Tips for the Transformer Model

(2017), the gradient noise scale, i e scale of random fluctuations in the SGD (or Adam etc ) dynamics, is proportional to learning rate divided by the batch size (cf |

|

Mini-batch gradient descent - CS230 Deep Learning

iterations cost Batch gradient descent mini batch # (t) cost Mini-batch gradient descent Choosing your mini-batch size Adam optimization algorithm |

|

Advanced Training Techniques

Gradient Descent ○ Momentum ○ RMSProp ○ Adam ○ Distributed SGD ○ Gradient Crank up learning rate when increasing batch size ○ Trick: use |

|

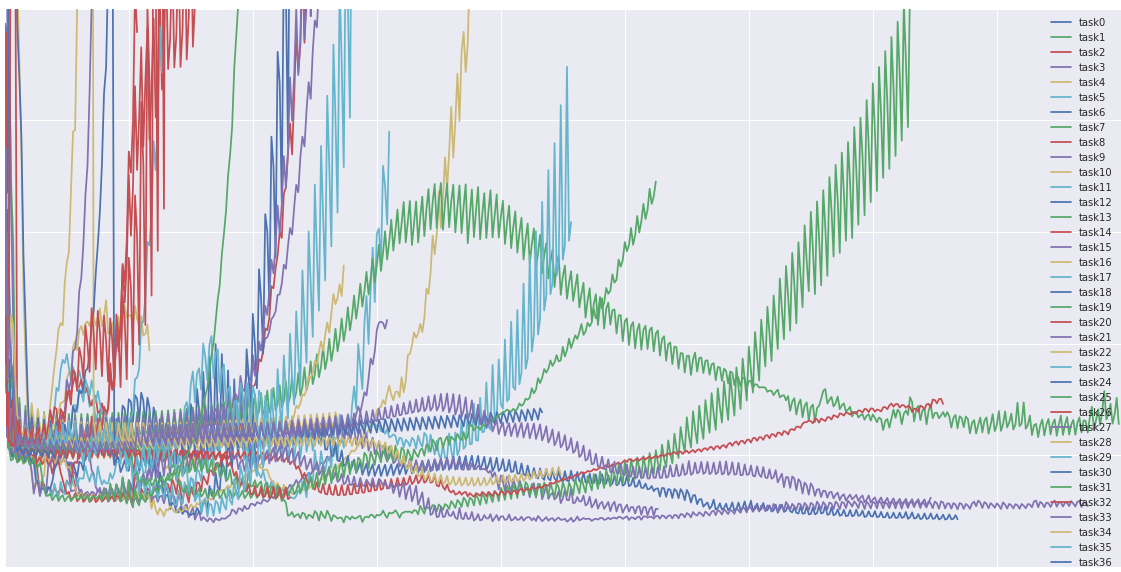

ONLINE BATCH SELECTION FOR FASTER TRAINING OF NEURAL

dataset suggest that selecting batches speeds up both AdaDelta and Adam by a 10−1 Online Batch Selection in Adam, Batch Size 64 Epochs Training cost |