increase batch size learning rate

|

DONT DECAY THE LEARNING RATE INCREASE THE BATCH SIZE

We can further reduce the number of parameter updates by increasing the learning rate ? and scaling the batch size B ? ?. Finally one can increase the mo-. |

|

AdaBatch: Adaptive Batch Sizes for Training Deep Neural Networks

14 févr. 2018 The aforementioned study shows that the batch size increase in lieu of learning rate decay works on several optimization algorithms: SGD SGD ... |

|

On the Computational Inefficiency of Large Batch Sizes for

30 nov. 2018 Increasing the mini-batch size for stochastic gradient descent offers ... up the learning rate to compensate for an increased batch size ... |

|

An Empirical Model of Large-Batch Training

14 déc. 2018 The optimal learning rate initially scales linearly as we increase the batch size leveling off in the way predicted by Equation 2.7. Right: For ... |

|

The Limit of the Batch Size

15 juin 2020 batch size is increased beyond a certain boundary which we refer to ... If we use a batch size B (B<BL |

|

Revisiting Small Batch Training for Deep Neural Networks

20 avr. 2018 datasets show that increasing the mini-batch size progressively reduces the range of learning rates that provide stable convergence and ... |

|

Dont Decay the Learning Rate Increase the Batch Size

24 févr. 2018 We can further reduce the number of parameter updates by increasing the learning rate ? and scaling the batch size B ? ?. |

|

ArXiv:2108.06084v3 [cs.LG] 1 Feb 2022

1 févr. 2022 To reduce their expensive training cost practitioners attempt to increase the batch sizes and learning rates. However |

|

ADABATCH: ADAPTIVE BATCH SIZES FOR TRAINING DEEP

Thus increasing the batch size can mimic learning rate decay |

|

Control Batch Size and Learning Rate to Generalize Well

This paper reports both theoretical and empirical evidence of a training strategy that we should control the ratio of batch size to learning rate not too large |

|

A arXiv:171100489v2 [csLG] 24 Feb 2018

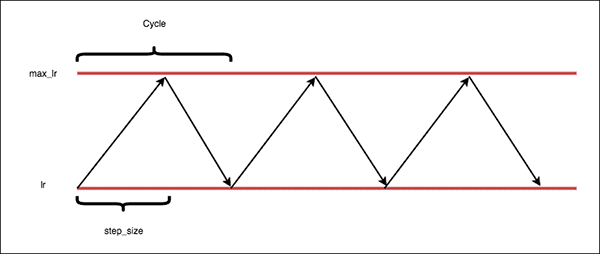

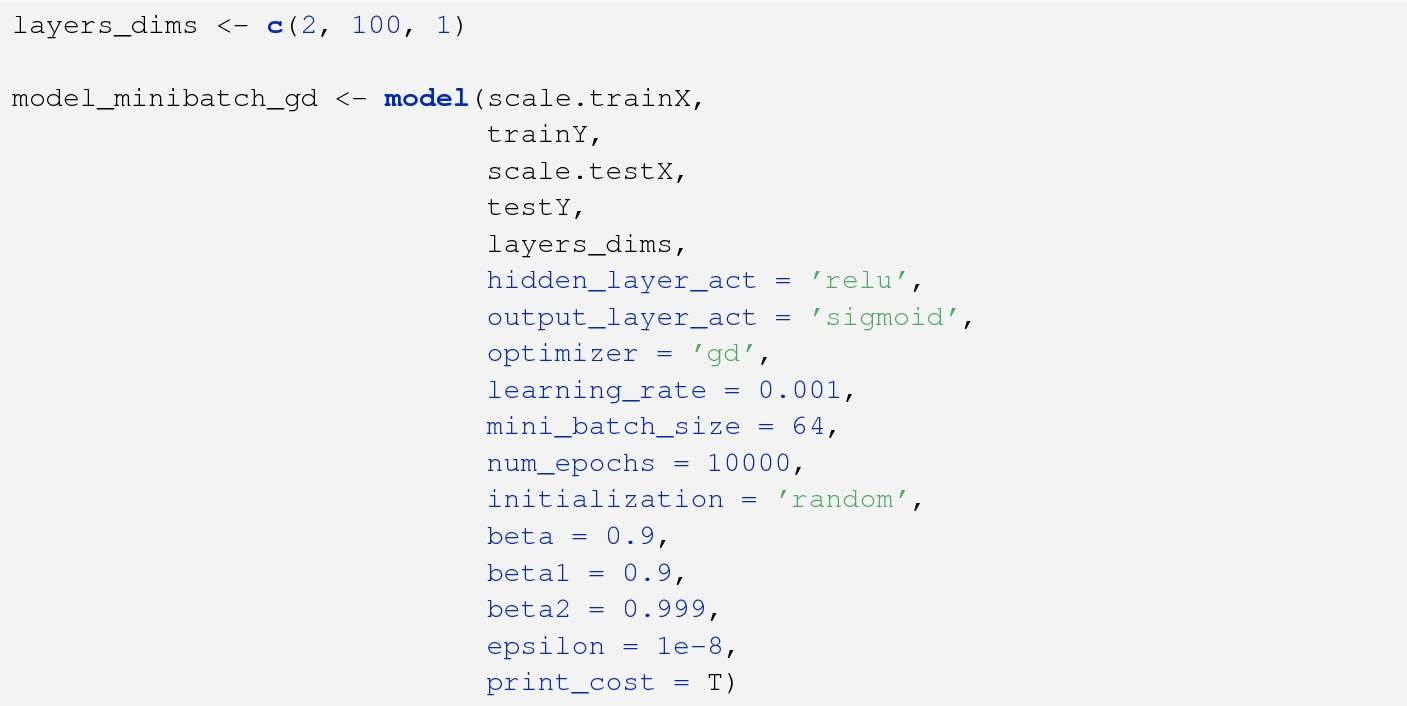

“Increasing batch size” also uses a learning rate of0 1 initial batch size of 128 and momentum coef?cient of 0 9 but the batch size increases by afactor of 5 at each step These schedules are identical to “Decaying learning rate” and “Increasingbatch size” in section 5 1 above |

|

Exploit Your Hyperparameters: Batch Size and Learning Rate as

In order to improve large batch training performance for a given training computation cost it hasbeen proposed to scale the learning rate linearly with the batch size (Krizhevsky 2014; Chen et al 2016; Bottou et al 2016; Goyal et al 2017) |

|

Coupling Adaptive Batch Sizes with Learning Rates - arXivorg

constant batch size stability and convergence is thusoftenenforcedbymeansofa(manually tuned) decreasing learningrate schedule We propose a practical method for dynamic batch size adaptation It estimates the vari-ance of the stochastic gradients and adapts the batch size to decrease the variance proportion- |

|

Control Batch Size and Learning Rate to Generalize Well

correlation with the ratio of batch size to learning rate This correlation builds the theoretical foundation of the training strategy Furthermore we conduct a large-scale experiment to verify the correlation and training strategy We trained 1600 models based on architectures ResNet-110 and VGG-19 with datasets CIFAR-10 |

|

ArXiv:170803888v3 [csCV] 13 Sep 2017

Increasing the global batch while keeping the same number of epochs means that you have fewer iterations to update weights The straight-forward way to compensate for a smaller number of iterations is to do larger steps by increasing the learning rate (LR) For example Krizhevsky (2014) suggests to linearly scale up LR with batch size However |

Should we increase learning rate or reduce batch size?

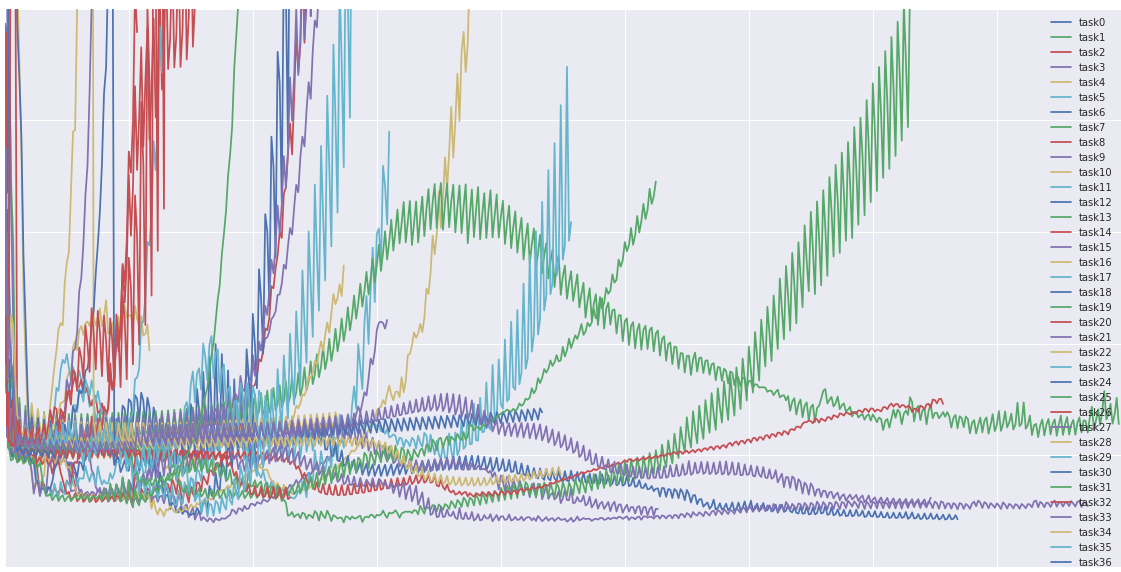

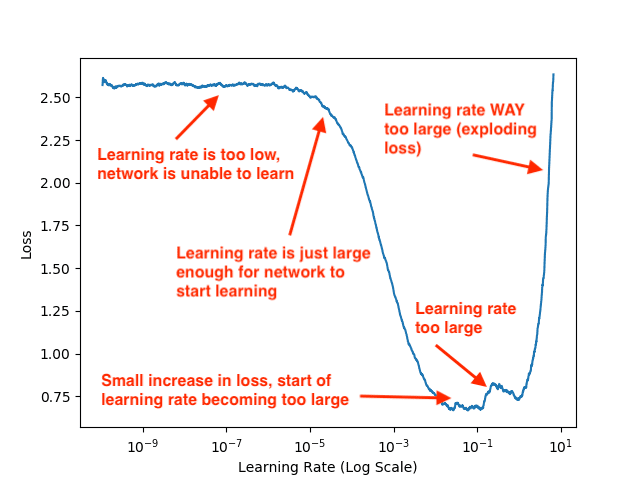

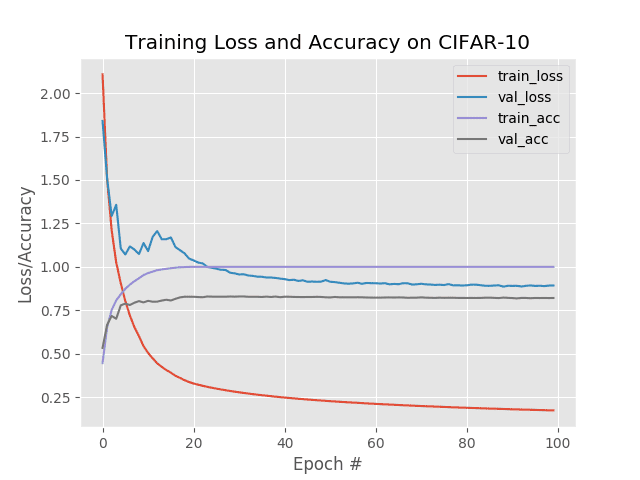

- Specifically, increasing the learning rate speeds up the learning of your model, yet risks overshooting its minimum loss. Reducing batch size means your model uses fewer samples to calculate the loss in each iteration of learning. Beyond that, these precious hyperparameters receive little attention. We tune them to minimize our training loss.

What does it mean to reduce batch size?

- Reducing batch size means your model uses fewer samples to calculate the loss in each iteration of learning. Beyond that, these precious hyperparameters receive little attention. We tune them to minimize our training loss. Then use “more advanced” regularization approaches to improve our models, reducing overfitting. Is that the right approach?

Does learning rate to batch size influence the generalization capacity of DNN?

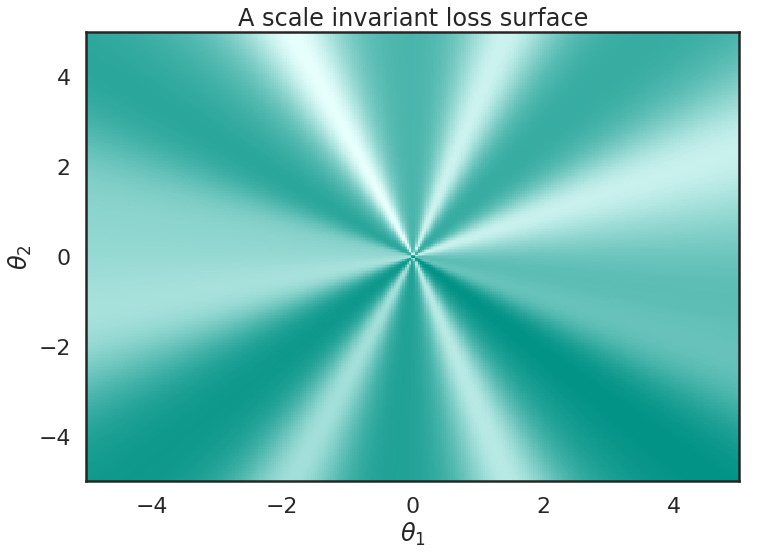

- Width of Minima Reached by Stochastic Gradient Descent is Influenced by Learning Rate to Batch Size Ratio. The authors give the mathematical and empirical foundation to the idea that the ratio of learning rate to batch size influences the generalization capacity of DNN.

Do larger batch sizes converge faster?

- In general: Larger batch sizes result in faster progress in training, but don't always converge as fast. Smaller batch sizes train slower, but can converge faster. It's definitely problem dependent. In general, the models improve with more epochs of training, to a point. They'll start to plateau in accuracy as they converge.

|

Control Batch Size and Learning Rate to Generalize Well - NeurIPS

This paper reports both theoretical and empirical evidence of a training strategy that we should control the ratio of batch size to learning rate not too large to |

|

Which Algorithmic Choices Matter at Which Batch Sizes? - NIPS

Increasing the batch size is a popular way to speed up neural network training, optimal learning rates and large batch training, making it a useful tool to |

|

Training ImageNet in 1 Hour - Facebook Research

be large, which implies nontrivial growth in the SGD mini- batch size In this paper batch ∪jBj of size kn and learning rate ˆη yields: ˆwt+1 = wt − ˆη 1 kn ∑ |

|

CROSSBOW: Scaling Deep Learning with Small Batch Sizes on

To fully utilise all GPUs, systems must increase the batch size, which hinders statistical efficiency Users tune hyper-parameters such as the learning rate to |

|

On the Generalization Benefit of Noise in Stochastic Gradient Descent

However, unlike our previous experiments, we notice that the optimal learning rate increases as square root of the batch size for small batch sizes, before leveling |

|

On the Generalization Benefit of Noise in Stochastic Gradient Descent

(2016), who showed that the test accuracy often falls if one holds the learning rate constant and increases the batch size, even if one continues training until the |

|

Why Does Large Batch Training Result in Poor - HUSCAP

that gradually increases batch size during training We also explain learning rate during training can be useful for accelerating the training In this work, we |

![PDF] A disciplined approach to neural network hyper-parameters PDF] A disciplined approach to neural network hyper-parameters](https://machinelearningmastery.com/wp-content/uploads/2018/11/Line-Plot-of-Classification-Accuracy-on-Train-and-Tests-Sets-of-a-MLP-Fit-with-Stochastic-Gradient-Descent-and-Smaller-Learning-Rate.png)

![PDF] Control Batch Size and Learning Rate to Generalize Well PDF] Control Batch Size and Learning Rate to Generalize Well](https://machinelearningmastery.com/wp-content/uploads/2018/11/Scatter-Plot-of-Blobs-Dataset-with-Three-Classes-and-Points-Colored-by-Class-Value.png)

![PDF] A disciplined approach to neural network hyper-parameters PDF] A disciplined approach to neural network hyper-parameters](https://www.jeremyjordan.me/content/images/2018/02/Screen-Shot-2018-02-24-at-11.47.09-AM.png)

![PDF] A disciplined approach to neural network hyper-parameters PDF] A disciplined approach to neural network hyper-parameters](https://machinelearningmastery.com/wp-content/uploads/2018/11/Line-Plot-of-Classification-Accuracy-on-Train-and-Tests-Sets-of-a-MLP-Fit-with-Stochastic-Gradient-Descent.png)

![PDF] Control Batch Size and Learning Rate to Generalize Well PDF] Control Batch Size and Learning Rate to Generalize Well](https://media.geeksforgeeks.org/wp-content/uploads/20190510082812/index16.png)

![PDF] Dynamic Mini-batch SGD for Elastic Distributed Training PDF] Dynamic Mini-batch SGD for Elastic Distributed Training](https://i.ytimg.com/vi/ZBVwnoVIvZk/mqdefault.jpg)

![PDF] Dynamic Mini-batch SGD for Elastic Distributed Training PDF] Dynamic Mini-batch SGD for Elastic Distributed Training](https://i1.rgstatic.net/publication/338224954_Batch_size_for_training_convolutional_neural_networks_for_sentence_classiiication/links/5e08f90e92851c8364a47f14/largepreview.png)

![PDF] A disciplined approach to neural network hyper-parameters PDF] A disciplined approach to neural network hyper-parameters](https://i1.rgstatic.net/publication/331840131_Inefficiency_of_K-FAC_for_Large_Batch_Size_Training/links/5c954f3792851cf0ae910e44/largepreview.png)

![PDF] A disciplined approach to neural network hyper-parameters PDF] A disciplined approach to neural network hyper-parameters](https://miro.medium.com/max/1776/1*f0nZ0ivzGsFeQS-HpM_Sog.png)