l2 regularization neural network

|

On the training dynamics of deep networks with L2 regularization

To gain a better theoretical understanding we consider the setup of an infinitely wide neural network trained using gradient flow. We focus on networks with |

|

On Regularization and Robustness of Deep Neural Networks

30 nov. 2018 In particular we study the connection between adversarial training under l2 perturbations and penalizing with the RKHS norm |

|

A baseline regularization scheme for transfer learning with

14 oct. 2019 learning with convolutional neural networks. ... We argue that the standard L2 regularization which drives the parame-. |

|

Regularization Matters: A Nonparametric Perspective on

With l2 regularization overparametrized neural network trained by GD resembles the solution of kernel ridge regression. • We further prove that by adding |

|

Adaptive L2 Regularization in Person Re-Identification

based datasets [12] [13]. L2 regularization imposes constraints on the parameters of neural networks and adds penalties to the objective function. |

|

Three Mechanisms of Weight Decay Regularization

29 oct. 2018 Weight decay is one of the standard tricks in the neural network toolbox ... weight decay has been shown to outperform L2 regularization for ... |

|

HAL

21 oct. 2019 ing with L1 and L2 regularization among them and against equivalent-size feed-forward neural networks with simple (fully-connected) and ... |

|

Regularization techniques for fine-tuning in neural machine translation

networks is fine-tuning (Hinton and Salakhutdi- of the weight matrices of the neural network are ... L2-norm regularization is widely used for ma-. |

|

Effect of the regularization hyperparameter on deep learning- based

Demonstrations rely on training U-net on small LGE-. MRI datasets using the arbitrarily selected L2 regularization values. The remaining hyperparameters are to |

|

Regularization for Deep Learning - UNIST

One of the main problems that hindered the progress in deep learning research was L2 regularization promotes grouping – results in equal weights for |

|

Regularization in Deep Neural Networks - OPUS at UTS

Chapter 3: We introduce a new regularize technique named “Shakeout” to im- prove the generalization performance of deep neural networks beyond Dropout Compared |

|

Regularization in Neural Networks - CEDAR

Topics in Neural Net Regularization • Definition of regularization • Methods 1 Limiting capacity: no of hidden units 2 Norm Penalties: L2- and L?- |

|

On the training dynamics of deep networks with L2 regularization

We study the role of L2 regularization in deep learning and uncover simple relations between the performance of the model the L2 coefficient the learning |

|

Neural Network Regularization - Classes

The sharing of the weights means that every model is very strongly regularized • It's a much better regularizer than L2 or L1 penalties that pull the weights |

|

Deep learning - Optimization and Regularization in deep networks

6 mar 2021 · Adaptive learning rate methods have become the norm in training neural networks but in some applications they fail to converge to an optimal |

|

Learning Neural Networks with Adaptive Regularization - OpenReview

Our Contributions To answer the above question we develop an adaptive regularization method for neural nets inspired by the empirical Bayes method Motivated |

|

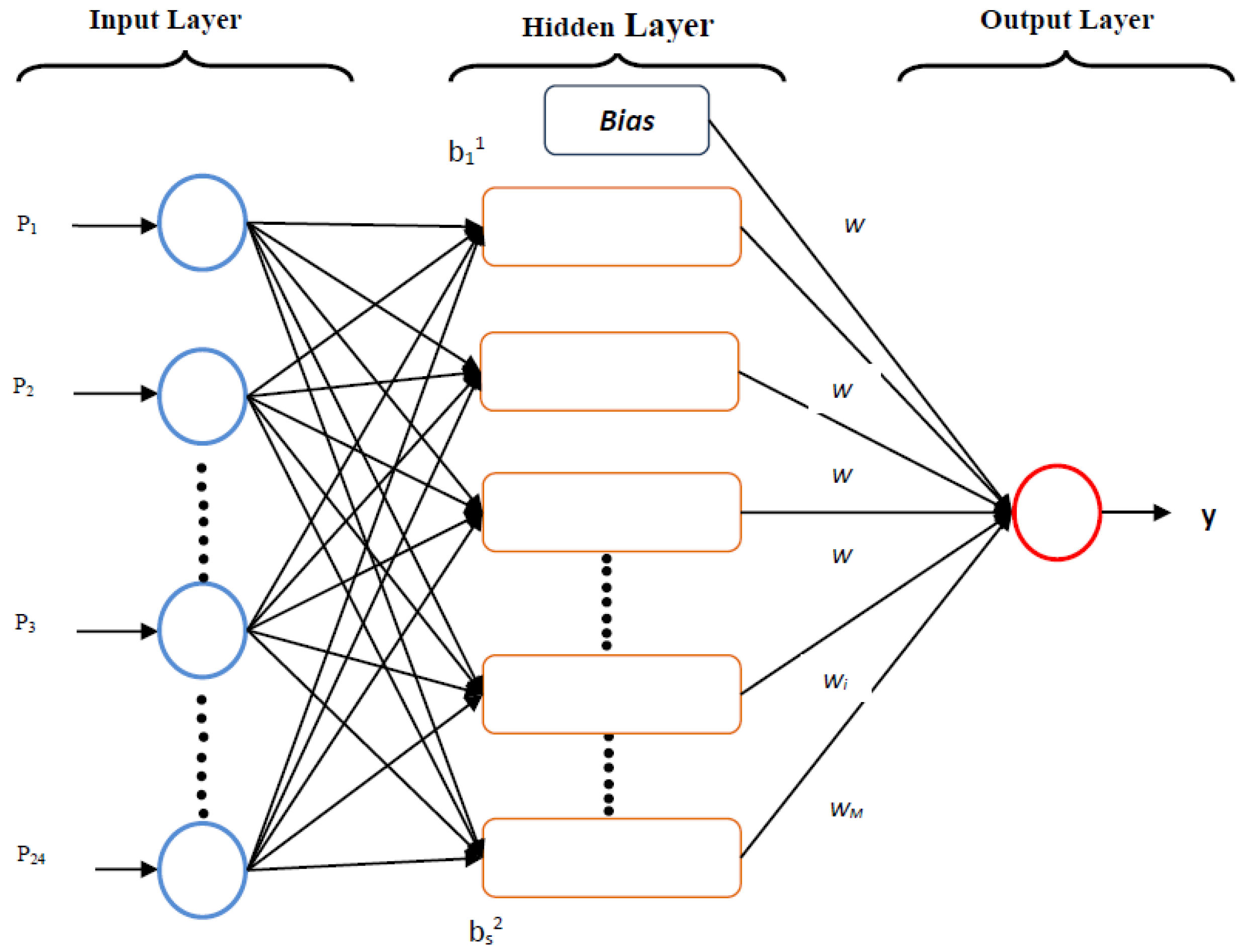

An Analysis of the Regularization Between L2 and Dropout in Single

In this paper an analysis of different regularization techniques between L2-norm and dropout in a single hidden layer neural networks are investigated on the |

|

ArXiv:181005547v2 [csLG] 15 Oct 2019

15 oct 2019 · In this paper we introduce a physics-driven regularization method for training of deep neural net- works (DNNs) for use in engineering |

|

A New Angle on L2 Regularization - arXiv

28 jui 2018 · Is it possible to regularize a neural network against adversarial examples by only using weight decay? The idea is simple enough and has been |

What is L2 regularization in neural networks?

The L2 regularization is the most common type of all regularization techniques and is also commonly known as weight decay or Ride Regression. The mathematical derivation of this regularization, as well as the mathematical explanation of why this method works at reducing overfitting, is quite long and complex.What is L2 Regularisation?

L2 regularization acts like a force that removes a small percentage of weights at each iteration. Therefore, weights will never be equal to zero. L2 regularization penalizes (weight)² There is an additional parameter to tune the L2 regularization term which is called regularization rate (lambda).What is the effect of L2 regularization in neural network?

L2 Regularization shrinks all the weights to small values, preventing the model from learning any complex concept wrt. any particular node/feature, thereby preventing overfitting.- In L2 regularization we take the sum of all the parameters squared and add it with the square difference of the actual output and predictions. Same as L1 if you increase the value of lambda, the value of the parameters will decrease as L2 will penalize the parameters.

|

Regularization in Neural Networks - CEDAR

in Neural Net Regularization • Definition of regularization • Methods 1 Limiting capacity: no of hidden units 2 Norm Penalties: L2- and LΙ- regularization 3 |

|

Regularization Methods in Neural Networks - DiVA

In this report, four common regularization methods for dealing with overfitting are evaluated The methods L1, L2, Early stopping and Dropout are first tested with |

|

Deep Neural Network Regularization for Feature - IEEE Xplore

3 mai 2019 · Network embedded with the classical regularization tech- niques such as l1, l2 regularization are mainly used to reduce the overfitting and |

|

Regularization Matters: Generalization and Optimization of Neural

For infinite-width two layer networks with l2-regularized loss, noisy gradient descent finds a global optimizer in a polynomial number of iterations This improves |

|

Regularization - Sebastian Raschka

STAT 479: Deep Learning SS 2019 2 Overview: Regularization / Regularizing Effects • Early stopping •L1/L2 regularization (norm penalties) • Dropout |

|

An Analysis of the Regularization Between L2 and Dropout - UKSim

between L2-norm and dropout in a single hidden layer neural networks are investigated on the MNIST dataset In our experiment, both regularization methods |

|

Regularization in Deep Neural Networks - OPUS at UTS - University

ity of deep neural networks beyond Dropout, via introducing a combination of L0, L1, and L2 regularization effect into the network training Then we considered |

|

Regularization for Deep Learning

to the objective function In other academic communities, L2 regularization is also known as ridge regression or Tikhonov regularization We can gain some insight |

![PDF] Bayesian Regularized Neural Networks for Small n Big p Data PDF] Bayesian Regularized Neural Networks for Small n Big p Data](https://cdn.analyticsvidhya.com/wp-content/uploads/2018/04/Screen-Shot-2018-04-04-at-1.53.35-AM.png)

![Bayesian Regularization of Neural Networks - [PDF Document] Bayesian Regularization of Neural Networks - [PDF Document]](https://www.intechopen.com/media/chapter/50570/media/fig5.png)