An Algorithm for Fast Convergence in Training Neural Networks

|

An algorithm for fast convergence in training neural

In this work two modifications on Levenberg-Marquardt algorithm for feedforward neural networks are studied One modrfication is made on performance index |

|

Efficient Algorithm for Training Neural Networks with one Hidden Layer

The algorithm has a similar convergence rate as the Lavenberg-Marquardt (LM) method and it is less computationally intensive and requires less memory This is |

|

A Very Fast Learning Method for Neural Networks Based on

Abstract This paper introduces a learning method for two-layer feedforward neural networks based on sen- sitivity analysis which uses a linear training |

How do you make neural networks converge faster?

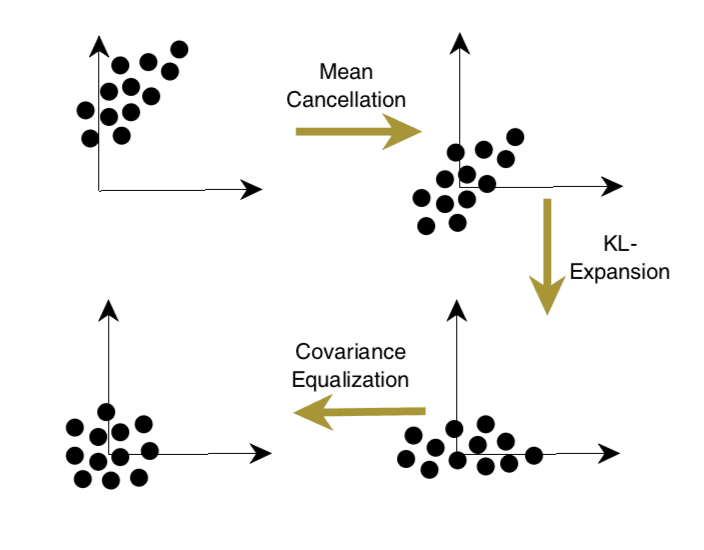

Input normalization

This method is also one of the most helpful methods to make neural networks converge faster.

In many of the learning processes, we experience faster training when the training data sum to zero.

We can normalize the input data by subtracting the mean value from each input variable.How do you train a neural network?

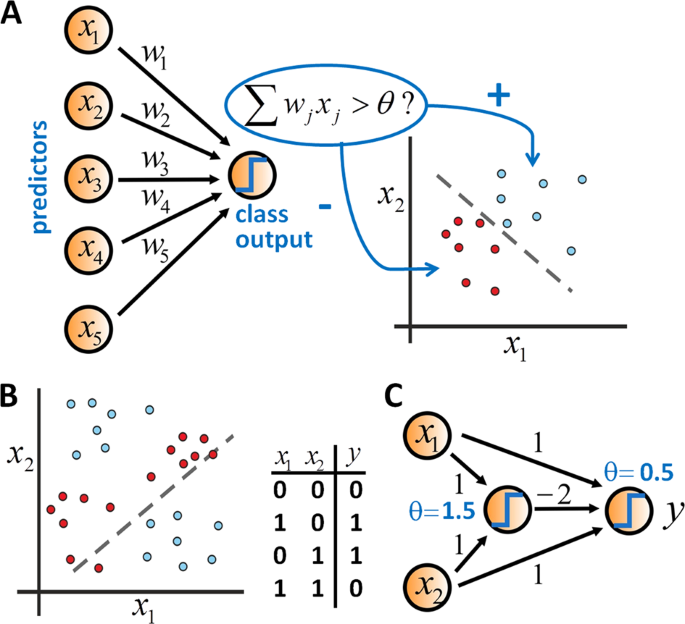

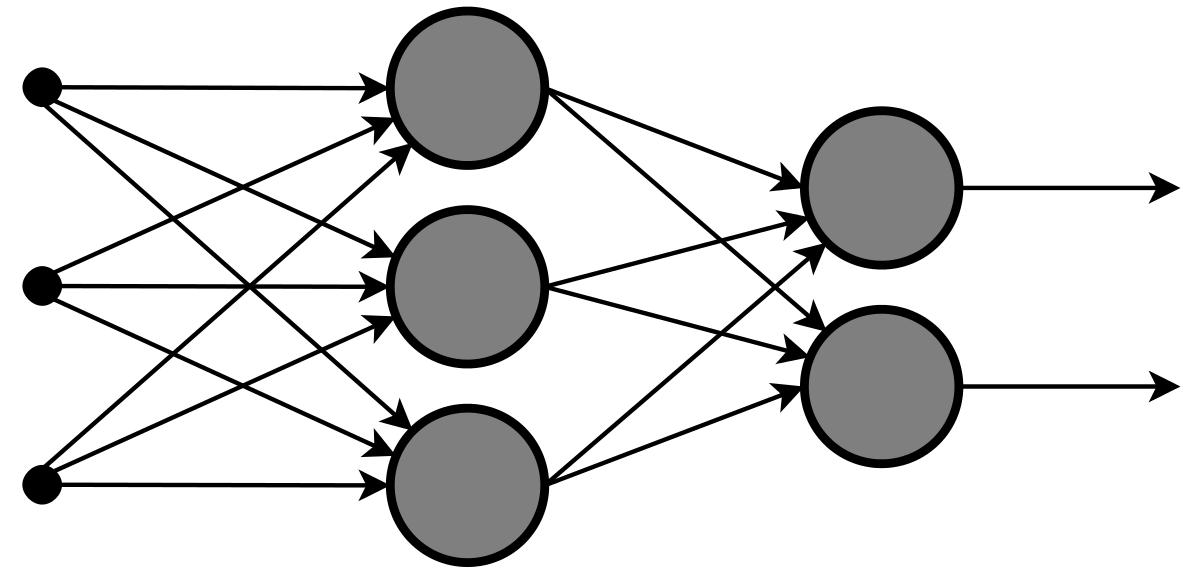

The learning (training) process of a neural network is an iterative process in which the calculations are carried out forward and backward through each layer in the network until the loss function is minimized.

The entire learning process can be divided into three main parts: Forward propagation (Forward pass)What is the best training algorithm for neural networks?

Backpropagation is the most common training algorithm for neural networks.

It makes gradient descent feasible for multi-layer neural networks.

TensorFlow handles backpropagation automatically, so you don't need a deep understanding of the algorithm.The three main types of learning in neural networks are supervised learning, unsupervised learning, and reinforcement learning.

|

An Algorithm for Fast Convergence in Training Neural Networks

An Algorithm for Fast Convergence in Training Neural. Networks. Bogdan M. Wilamowski. University of Idaho. Graduate Center at Boise. 800 Park Blvd. Boise |

|

An Algorithm for Fast Convergence in Training Neural Networks

An Algorithm for Fast Convergence in Training Neural. Networks. Bogdan M. Wilamowski. University of Idaho. Graduate Center at Boise. 800 Park Blvd. Boise |

|

An Algorithm for Fast Convergence in Training Neural Networks

An Algorithm for Fast Convergence in Training Neural. Networks. Bogdan M. Wilamowski [1][2][3] has been a significant milestone in neural network. |

|

Training Feed-forward Neural Networks Using the Gradient Descent

Keywords: BP Algorithm; Optimal Stepsize; Fast Convergence; Hessian Matrix Computation;. Feedforward Neural Networks. 1 Introduction. |

|

An algorithm for fast convergence in training neural networks

An Algorithm for Fast Convergence in Training Neural. Networks. Bogdan M. Wilamowski. University of Idaho. Graduate Center at Boise. 800 Park Blvd. Boise |

|

A Very Fast Learning Method for Neural Networks Based on

and a novel supervised learning algorithm for two-layer feedforward neural networks that presents a high convergence speed is proposed. This algorithm |

|

Faster Convergence & Generalization in DNNs

10-Oct-2018 Deep neural networks have gained tremendous popularity in last few years. They ... The algorithm used often to train these networks is ... |

|

Optimization for deep learning: theory and algorithms

19-Dec-2019 train a neural network from scratch it is very likely that your first few ... of algorithm convergence: make convergence possible |

|

Device Scheduling with Fast Convergence for Wireless Federated

03-Nov-2019 on the training input-output pair {xin |

|

Levenberg–Marquardt Training

09-Mar-2010 In the artificial neural-networks field this algo- ... the Gauss–Newton algorithm |

| Faster Neural Network Training with Approximate Tensor Operations |

| Neuro-Fuzzy Computing - Size |

| SpiFoG: an efficient supervised learning algorithm for the network of |

| A New Learning Algorithm for a Fully Connected Neuro-Fuzzy |

| Comparison of different artificial neural network (ANN) training |

|

A Very Fast Learning Method for Neural Networks Based on

and a novel supervised learning algorithm for two-layer feedforward neural networks that presents a high convergence speed is proposed This algorithm, the |

|

An algorithm for fast convergence in training neural networks

In this work, two modifications on Levenberg-Marquardt algorithm for feedforward neural networks are studied One modrfication is made on performance index, |

|

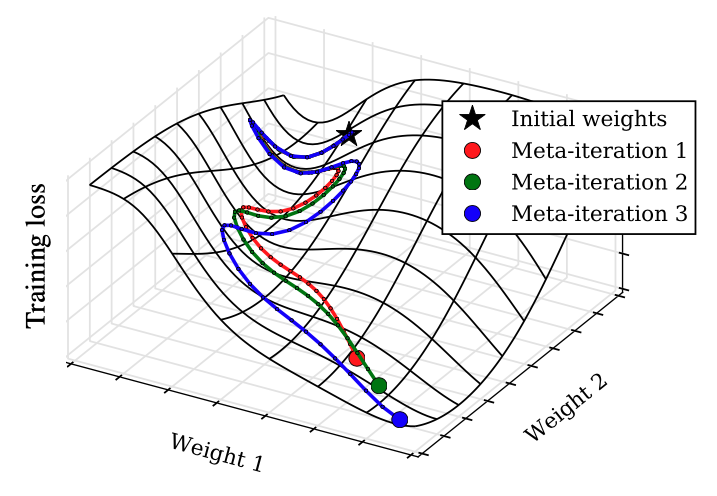

8 Fast Learning Algorithms

Several fast learning algorithms for neural networks work by trying to find the best value of γ which still guarantees convergence Introduction of the momentum |

|

Neural Networks: Optimization Part 1

Recap: Gradient Descent Algorithm • In order to Training Neural Nets by Gradient Descent • Gradient For the fastest convergence, ideally, the learning rate |

|

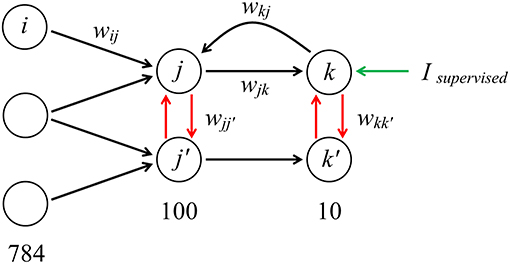

Efficient Algorithm for Training Neural Networks with one Hidden

Efficient Algorithm for Training Neural Networks with one Hidden Layer Bogdan M a similar convergence rate as the Lavenberg-Marquardt (LM) method and it is less combines the speed of the Newton algorithm with the stability of the |

|

Optic Modified BackPropagation training algorithm for fast - IPCSIT

OMBP: Optic Modified BackPropagation training algorithm for fast convergence of Feedforward Neural Network Omar Charif12+, Hichem Omrani1, and Philippe |

![PDF] Fast convergence rates of deep neural networks for PDF] Fast convergence rates of deep neural networks for](https://i1.rgstatic.net/publication/286316681_Training_a_Feed-Forward_Neural_Network_Using_Artificial_Bee_Colony_with_Back-Propagation_Algorithm/links/59937448aca272ec9084de4e/largepreview.png)

![PDF] Optimization for deep learning: theory and algorithms PDF] Optimization for deep learning: theory and algorithms](https://ars.els-cdn.com/content/image/1-s2.0-S2405844018346036-gr7.jpg)

![PDF] Fast convergence rates of deep neural networks for PDF] Fast convergence rates of deep neural networks for](https://media.springernature.com/original/springer-static/image/chp%3A10.1007%2F978-3-030-50153-2_57/MediaObjects/500679_1_En_57_Fig1_HTML.png)

![PDF] Fast convergence rates of deep neural networks for PDF] Fast convergence rates of deep neural networks for](https://media.springernature.com/m685/springer-static/image/art%3A10.1038%2Fs41598-020-62484-z/MediaObjects/41598_2020_62484_Fig1_HTML.png)