Lecture 10: Shannon-Fano-Elias Code Arithmetic Code

Lecture 10: Shannon-Fano-Elias Code Arithmetic Code

Arithmetic Code. • Shannon-Fano-Elias coding. • Arithmetic code. • Competitive optimality of Shannon code. • Generation of random variables.

Example 1

Example 1

2. Shannon-Fano coding: X. P(X). I (bits) steps code code word length: d = 0.4 · 1+0.3 · 2+0.15 · 3+0.1 · 4+0.05 · 5=2.1 [bits/symbol]. Data rate:.

Shannon – Fano Code

Shannon – Fano Code

Shannon–Fano coding named after Claude Elwood Shannon and Robert Fano

1 Entropy

1 Entropy

The Shannon-Fano code is pretty decent: Question: What can you say about the expected number of bits used to encode one (random) symbol? Answer: By

Source Coding techniques: 1- Shannon – Fano Code Shannon

Source Coding techniques: 1- Shannon – Fano Code Shannon

Shannon–Fano coding named after Claude Elwood Shannon and Robert Fano

15 pt Kraft Inequality and Shannon-Fano Codes

15 pt Kraft Inequality and Shannon-Fano Codes

Aug 13 2012 Theorem: Suppose that C is a prefix code and TA has D letters. Then. ? x?SA. D. ?l(x) ? 1. (?) where l(x) = length of codeword C(x).

ECE 587 / STA 563: Lecture 5 – Lossless Compression Outline of

ECE 587 / STA 563: Lecture 5 – Lossless Compression Outline of

Sep 3 2020 A source code is a mapping C from a source alphabet X to D-ary ... as the Shannon–Fano–Elias Code. ... Portable Document Format (PDF).

Data Compression

Data Compression

Shannon-Fano-Elias Codes index inside class ? fixed-length code with K+p bits ... code C aaaa. 0 aaab. 10 aab. 110 ab. 1110 b. 1111 suitable code D.

Lecture 7: Source Coding and Kraft Inequality

Lecture 7: Source Coding and Kraft Inequality

set of finite-length strings of symbol from D-ary alphabet D Expected length L(C) of a source code C(x) for X with pdf p(x) ... Shannon-Fano coding.

Lecture 2: Source coding Conditional Entropy

Lecture 2: Source coding Conditional Entropy

https://www.cs.cmu.edu/~venkatg/teaching/ITCS-spr2013/notes/lect-jan17.pdf

[PDF] Cours/TD 5 Codage Shannon Codage arithmétique : Elias

[PDF] Cours/TD 5 Codage Shannon Codage arithmétique : Elias

Cours/TD 5 Codage Shannon Codage arithmétique : Elias 5 1 De l'algorithme de Fano et Shannon au codage arithméthique Si N-1

[PDF] Cours/TD 2 Codage ”proche de lentropie”

[PDF] Cours/TD 2 Codage ”proche de lentropie”

Idée naturelle (Shannon et Fano) : associer aux symboles de notre alphabet des mots code binaires dont la longueur est égale au contenu d'information des

[PDF] Théorie de linformation - Chap 1: Codage Source - Esentn

[PDF] Théorie de linformation - Chap 1: Codage Source - Esentn

Algorithme de Huffman 6 Algorithme de Fano-Shannon 7 Limitations de Fano-Shannon et Huffman et Supériorité de LZW 8 Code par répétition

[PDF] Codage entropique - CU-ELBAYADHDZ

[PDF] Codage entropique - CU-ELBAYADHDZ

Le codage de Shannon-Fano est la première méthode de codage entropique efficace développée en même temps par Claude Shannon et Robert Fano en 1949 Cette

[PDF] Notes de cours Codage de Huffman

[PDF] Notes de cours Codage de Huffman

Code développé en 1960 par Claude E Shannon (MIT) et Robert M Fano (Laboratoires de Bell) • Assignation du code selon la probabilité de chaque symbole

[PDF] techniques de codage et de compression

[PDF] techniques de codage et de compression

1 2 1 Quels sont les symboles de code ? Quelle est la valence du codage ? Chaque symbole de code CODAGES COMPRESSIFS : SHANNON FANO-SHANNON ET HUFFMAN

[PDF] Introduction au codage source - Laurent Oudre

[PDF] Introduction au codage source - Laurent Oudre

Exercice 1 : Codes non singuliers déchiffrables et instantanés 1 Construire le code de Shannon-Fano associé `a la variable aléatoire X et calculer la

[PDF] Cours 6 - Introduction au codage source - Laurent Oudre

[PDF] Cours 6 - Introduction au codage source - Laurent Oudre

Master Ingénierie et Innovations en Images et Réseaux - 1`ere année 2017-2018 Code de Shannon-Fano exemple au début de la création du format ZIP)

[PDF] Information Calcul et Communication Module 2 - Moodle EPFL

[PDF] Information Calcul et Communication Module 2 - Moodle EPFL

compression optimale : code de Huffman ? compression avec pertes Shannon-Fano (bis) Th de Shannon Codes de Huffman Compression avec pertes Conclusion 1

[PDF] Codage de Source - Cédric Richard

[PDF] Codage de Source - Cédric Richard

Codage de source Digital Communications B Sklar Prentice Hall 1 Modèle de communication : le paradigme de Shannon Code de Shannon-Fano

Information Theory - Lecture 5 Lecturer: Hawraa Shareef

Source Coding techniques:

1- Shannon Fano Code

ShannonFano coding, named after Claude Elwood Shannon and Robert Fano, is a technique for constructing a prefix code based on a set of symbols and their probabilities. The algorithm works, and it produces fairly efficient variable-length encodings; when the two smaller sets produced by a partitioning are in fact of equal probability, the one bit of information used to distinguish them is used most efficiently. Unfortunately, ShannonFano does not always produce optimal prefix codes For this reason, ShannonFano is almost never used; Huffman coding is almost as computationally simple and produces prefix codes that always achieve the lowest expected code word length.Shannon-Fano Algorithm

A ShannonFano tree is built according to a specification designed to define an effective code table. The actual algorithm is simple1- For a given list of symbols, develop a corresponding list of probabilities or

frequency known2- Sort the lists of symbols according to frequency, with the most frequently

occurring symbols at the left and the least common at the right3- Divide the list into two parts, with the total frequency counts of the left part

being as close to the total of the right as possible4- The left part of the list is assigned the binary digit 0, and the right part is

assigned the digit 1. This means that the codes for the symbols in the first part will all start with 0ˬ and the codes in the second part will all start with 15- Recursively apply the steps 3 and 4 to each of the two halves, subdividing

groups and adding bits to the codes until each symbol has become a corresponding code leaf on the treeExample:

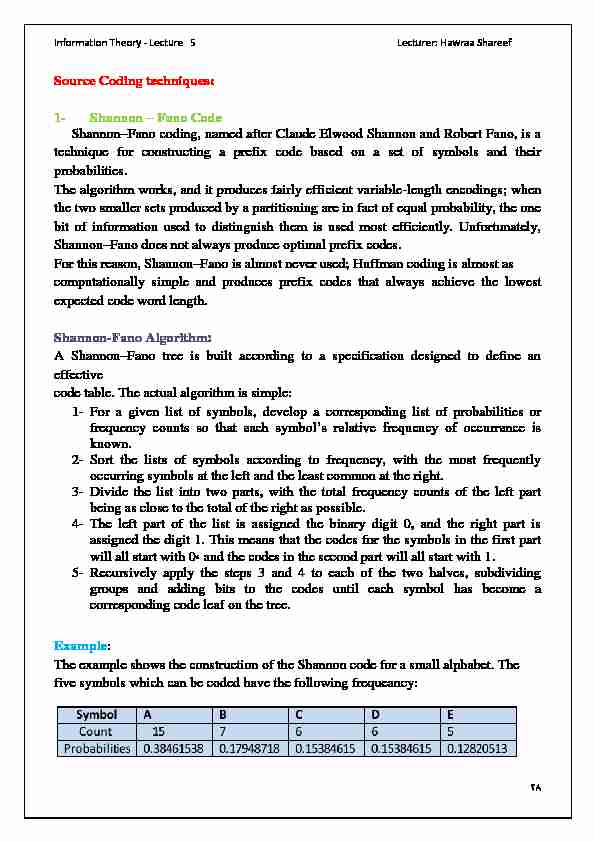

The example shows the construction of the Shannon code for a small alphabet. The five symbols which can be coded have the following frequeancy:Information Theory - Lecture 5 Lecturer: Hawraa Shareef

All symbols are sorted by frequency, from left to right. Putting the dividing line between symbols B and C results in a total of 22 in the left group and a total of 17 in the right group. This minimizes the difference in totals between the two groups. With this division, A and B will each have a code that starts with a 0 bit, and the C, D, and E codes will all start with a 1. Subsequently, the left half of the tree gets a new division between A and B, which puts A on a leaf with code 00 and B on a leaf with code 01. After four division procedures, a tree of codes results. In the final tree, the three symbols with the highest frequencies have all been assigned 2-bit codes, and two symbols with lower counts have 3-bit codes as shown table below:Symbol A B C D E

Code 00 01 10 110 111

Results in 2 bits for A, B and C and per 3 bits for D and E an average bit number of:Information Theory - Lecture 5 Lecturer: Hawraa Shareef

Information Theory - Lecture 5 Lecturer: Hawraa Shareef

2- Huffman coding:

We earlier looked at Shannon code, which is a pretty good construction of a prefix code for a given distribution. However, the best prefix code for a general source code distribution is the Huffman Code.Huffman Algorithm

1- Find 2 symbols with the smallest probability and then merge them to create a

2- Then merge the next 2 symbols with the smallest probability to create a new

3- Repeat steps 1 and 2 until there is only 1 symbol left. At this point, we created

a binary tree. The paths traversed from the root to the leaves are the prefix codes.Example:

Information Theory - Lecture 5 Lecturer: Hawraa Shareef

Information Theory - Lecture 5 Lecturer: Hawraa Shareef

Information Theory - Lecture 5 Lecturer: Hawraa Shareef

Information Theory - Lecture 5 Lecturer: Hawraa Shareef

Huffman Coding Base of JPEG Image Compression

Huffman coding can be used to compress all sorts of data.Example:

Suppose we have a 5×5 raster image with 8-bit color, i.e.256 different colors. The uncompressed image will take 5 x 5 x 8 = 200bits of storage. First, we count up how many times each color occurs in the image. Then we sort the colors in order of decreasing frequency. We end up with a row that looks like this: Now we put the colors together by building a tree such that the colors farthest from the root are the least frequent. The colors are joined in pairs, with a node forming the connection. A node can connect either to another node or to a color. In our example, the tree might look like this:Information Theory - Lecture 5 Lecturer: Hawraa Shareef

Our result is known as a Huffman tree. It can be used for encoding and decoding. Each color is encoded as follows. We create codes by moving from the root of the tree to each color. If we turn right at a node, we write a 1, and if we turn left 0. This process yields a Huffman code table in which each symbol is assigned a bit code such that the most frequently occurring symbol has the shortest code, while the least common symbol is given the longest code. ¾ Because each color has a unique bit code that is not a prefix of any other, the colors can be replaced by their bit codes in the image file. The most frequently occurring color, white, will be represented with just a single bit rather than 8 bits. Black will take two bits. Red and blue will take three. ¾ After these replacements are made, the 200-bit image will be compressed to14 x 1 + 6 x 2 + 3 x 3 + 2 x 3 = 41 bits, which is about 5

bytes compared to 25 bytes in the original image. ¾ Of course, to decode the image the compressed file must include the code table, which takes up some space. Each bit code derived from the Huffman tree unambiguously identifies a color, so the compression loses no information. ¾ This compression technique is used broadly to encode music, images, and certain communication protocols.quotesdbs_dbs29.pdfusesText_35[PDF] codage de huffman exercice corrigé

[PDF] codage arithmétique pdf

[PDF] codage arithmétique algorithme

[PDF] représentation des nombres signés exercices corrigés

[PDF] exercice de systeme de numeration

[PDF] système binaire informatique

[PDF] calcul nombre binaire

[PDF] cours sur le calcul binaire pdf

[PDF] codage et représentation de l'information exercices corrigés

[PDF] le codage informatique

[PDF] exercice corrigé codage source

[PDF] combien d'information sont représentées par 15 bits

[PDF] virgule fixe et virgule flottant pdf

[PDF] virgule fixe exercices corrigés