Dompteur: Taming Audio Adversarial Examples

Dompteur: Taming Audio Adversarial Examples

11 août 2021 audio samples online at github.com/rub-syssec/dompteur. 2 Technical Background. In the following we discuss the background necessary to.

Detecting Adversarial Image Examples in Deep Neural Networks

Detecting Adversarial Image Examples in Deep Neural Networks

Index Terms—Adversarial example deep neural network

Robust Audio Adversarial Example for a Physical Attack

Robust Audio Adversarial Example for a Physical Attack

19 août 2019 done on audio adversarial examples against speech recog- ... 2Our full implementation is available at https://github.com/.

Metamorph: Injecting Inaudible Commands into Over-the-air Voice

Metamorph: Injecting Inaudible Commands into Over-the-air Voice

26 févr. 2020 of this attack i.e.

Effective and Inconspicuous Over-the-air Adversarial Examples with

Effective and Inconspicuous Over-the-air Adversarial Examples with

ABSTRACT. While deep neural networks achieve state-of-the-art performance on many audio classification tasks they are known to be vulnerable to adversarial

Advbox: a toolbox to generate adversarial examples that fool neural

Advbox: a toolbox to generate adversarial examples that fool neural

26 août 2020 available at https://github.com/advboxes/AdvBox. ... misclassified samples were named as Adversarial Examples. ... raw audio.

Universal adversarial examples in speech command classification

Universal adversarial examples in speech command classification

13 févr. 2021 1https://github.com/vadel/AudioUniversalPerturbations ... However they were able to construct audio adversarial examples targeting only ...

Adversarial Machine Learning and Beyond

Adversarial Machine Learning and Beyond

https://phibenz.github.io. Chaoning Zhang https://chaoningzhang.github.io [5] Audio Adversarial Examples: Targeted Attacks on Speech-to-Text; 2018.

Detecting Audio Adversarial Examples with Logit Noising

Detecting Audio Adversarial Examples with Logit Noising

13 déc. 2021 automatic speech recognition system audio adversarial examples

Real world Audio Adversary against Wake-word Detection

Real world Audio Adversary against Wake-word Detection

audio adversary with a differentiable synthesizer. potentially be vulnerable to audio adversarial examples. In ... https://github.com/.

EFFECTIVE AND INCONSPICUOUS OVER-THE-AIR ADVERSARIAL EXAMPLES WITH

EFFECTIVE AND INCONSPICUOUS OVER-THE-AIR ADVERSARIAL EXAMPLES WITH ADAPTIVE FILTERING

Patrick O"Reilly

1, Pranjal Awasthi2, Aravindan Vijayaraghavan1, Bryan Pardo1

1Northwestern University2Google Research

ABSTRACT

While deep neural networks achieve state-of-the-art performance on many audio classification tasks, they are known to be vulnerable to adversarial examples - artificially-generated perturbations of natural instances that cause a network to make incorrect predictions. In this work we demonstrate a novel audio-domain adversarial attack that modifies benign audio using an interpretable and differentiable para- metric transformation - adaptive filtering. Unlike existing state-of- the-art attacks, our proposed method does not require a complex op- timization procedure or generative model, relying only on a simple variant of gradient descent to tune filter parameters. We demonstrate the effectiveness of our method by performing over-the-air attacks against a state-of-the-art speaker verification model and show that our attack is less conspicuous than an existing state-of-the-art attack while matching its effectiveness. Our results demonstrate the poten- tial of transformations beyond direct waveform addition for conceal- ing high-magnitude adversarial perturbations, allowing adversaries to attack more effectively in challenging real-world settings. Index Terms-Adversarial examples, speaker verification1. INTRODUCTION

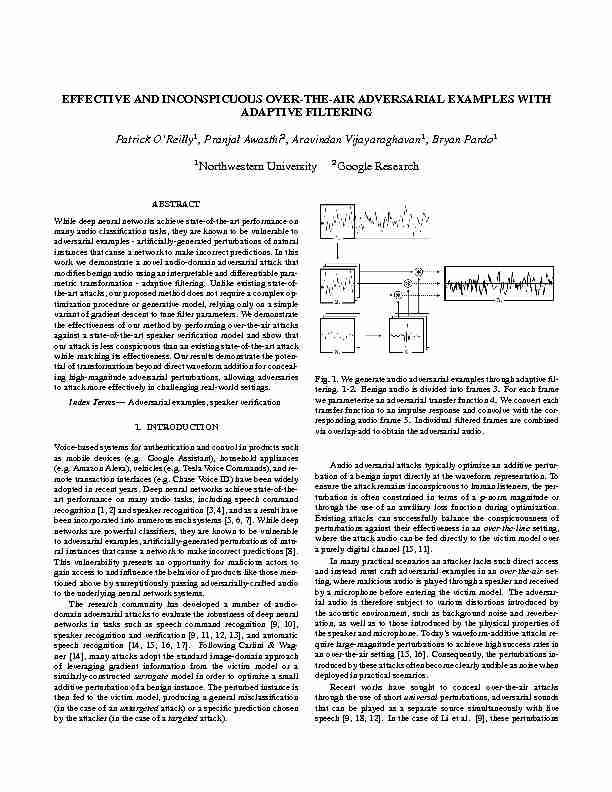

Voice-based systems for authentication and control in products such as mobile devices (e.g. Google Assistant), household appliances (e.g. AmazonAlexa), vehicles(e.g. TeslaVoiceCommands), andre- mote transaction interfaces (e.g. Chase Voice ID) have been widely adopted in recent years. Deep neural networks achieve state-of-the- art performance on many audio tasks, including speech command recognition [1, 2] and speaker recognition [3, 4], and as a result have been incorporated into numerous such systems [5, 6, 7]. While deep networks are powerful classifiers, they are known to be vulnerable to adversarial examples, artificially-generated perturbations of natu- ral instances that cause a network to make incorrect predictions [8]. This vulnerability presents an opportunity for malicious actors to gain access to and influence the behavior of products like those men- tioned above by surreptitiously passing adversarially-crafted audio to the underlying neural network systems. The research community has developed a number of audio- domain adversarial attacks to evaluate the robustness of deep neural networks in tasks such as speech command recognition [9, 10], speaker recognition and verification [9, 11, 12, 13], and automatic speech recognition [14, 15, 16, 17]. Following Carlini & Wag- ner [14], many attacks adopt the standard image-domain approach of leveraging gradient information from the victim model or a similarly-constructedsurrogatemodel in order to optimize a small additive perturbation of a benign instance. The perturbed instance is then fed to the victim model, producing a general misclassification (in the case of anuntargetedattack) or a specific prediction chosenby the attacker (in the case of atargetedattack).Fig. 1. We generate audio adversarial examples through adaptive fil-

tering.1-2.Benign audio is divided into frames3.For each frame we parameterize an adversarial transfer function4.We convert each transfer function to an impulse response and convolve with the cor- responding audio frame5.Individual filtered frames are combined via overlap-add to obtain the adversarial audio. Audio adversarial attacks typically optimize an additive pertur- bation of a benign input directly at the waveform representation. To ensure the attack remains inconspicuous to human listeners, the per- turbation is often constrained in terms of ap-norm magnitude or through the use of an auxiliary loss function during optimization. Existing attacks can successfully balance the conspicuousness of perturbations against their effectiveness in anover-the-linesetting, where the attack audio can be fed directly to the victim model over a purely digital channel [15, 11]. In many practical scenarios an attacker lacks such direct access and instead must craft adversarial examples in anover-the-airset- ting, where malicious audio is played through a speaker and received by a microphone before entering the victim model. The adversar- ial audio is therefore subject to various distortions introduced by the acoustic environment, such as background noise and reverber- ation, as well as to those introduced by the physical properties of the speaker and microphone. Today"s waveform-additive attacks re- quire large-magnitude perturbations to achieve high success rates in an over-the-air setting [15, 16]. Consequently, the perturbations in- deployed in practical scenarios. Recent works have sought to conceal over-the-air attacks through the use of shortuniversalperturbations, adversarial sounds that can be played as a separate source simultaneously with live speech [9, 18, 12]. In the case of Li et al. [9], these perturbations can be disguised as environmental sounds (e.g. phone notifications). However, these attacks still optimize perturbations directly at the waveform, which can result in characteristic noise-like artifacts. Existing attacks at non-waveform representations either produce conspicuous, highly particular sounds [10] or are not demonstrated over-the-air [11]. In the image domain, researchers have sought to address an anal- ogous perceptibility/effectiveness trade-off by moving away from bounded additive perturbations in pixel space. Instead, recent works use differentiable parametric transformations to modify the content of benign images in ways that, while perceptible, may be explained away as natural artifacts of the photographic process (e.g. motion- blur [19], shadows [20], and color adjustment [21, 22]). The result- ing perturbations remain inconspicuous despite largep-norm mag- nitudes, allowing for more potent attacks. However, the transforma- tions employed are specific to the image domain. Inspired by these works, we propose a novel audio-domain at- tack (Section 2) that introduces perturbations through adaptive filter- ing rather than the standard approach of direct addition at the wave- form. We then demonstrate the effectiveness of our proposed attack against a state-of-the-art speaker verification model in a challenging simulated over-the-air setting and corroborate our results in a real- world over-the-air setting. Finally, we describe the results of a lis- tener study that establishes our proposed attack as less conspicuous than a waveform-additive baseline with a state-of-the-art frequency- masking loss [15, 23, 13, 24] while matching its effectiveness. 12. ADAPTIVE FILTERING ATTACK

We propose to attack using adaptive filtering, a differentiable para- metric method that dynamically shapes the frequency content of au- dio. By crafting adversarial features directly in the frequency do- main, we avoid the noise-like artifacts associated with unstructured waveform perturbations.2.1. Overview

We divide an audio inputxintoTfixed-length frames. For thetth audio framex(t), we parameterize a finite impulse response (FIR) filter by specifying the transfer functionHt(f)overFfrequency bands. We defineHas the full adaptive filter over allTframes, i.e. H= [H1(f);:::;HT(f)]. The full adaptive filterHis thus speci- fied byTFtotal parameters, typically a fraction of the number required for an additive perturbation of the input at the waveform. To apply the filter, we map each transfer function to a time-domain im- pulse response via the inverse discrete Fourier transform and apply a Hann window, as in Engel et al. [25]. Each impulse response is then convolved with the corresponding input frame via multiplication in the Fourier domain, and the resulting filtered frames are synthesized via overlap-add with a Hann window to avoid boundary artifacts. We can optimize the parameters ofHadversarially in the way we would a traditional additive perturbation. Letf:Rd7!Rnbe a neural network model mappingd-dimensional audio input to ann- dimensional representation (e.g. embeddings), and letH(x)denote the application of the adaptive filter to the full input audiox(allT frames), as discussed above. Given a benign inputx2Rdand a targety2Rn, we optimizeH2RTFby performing gradient1Audio examples are available athttps://

interactiveaudiolab.github.io/project/ audio-adversarial-examples.htmldescent on an objective of the form: argminH2RTFLadv(f;x;H;y) +Laux(x;H)(1)

Here, the adversarial loss functionLadvmeasures the success of the attack in achieving the outcomef(H(x)) =yand the auxiliary loss functionLauxencourages the filtered audioH(x)to resemble the benign audiox. To constrain the magnitude of the filter parameters, we performprojected gradient descenton the objective [26]. For projection bound, at each optimization iteration the parameters of Hare projected onto a2-norm ball of radiuscentered at0. A trade- off between adversarial success and imperceptibility is achieved us- ing theselectivevariant of projected gradient descent proposed by Bryniarski et al. [27]. All lossesLare formulated such thatL 0 implies the associated constraint has been satisfied (e.g. the adver- sary achieves the target output, the filter introduces no perceptible perturbation). Update steps are then taken along the gradient of the adversarial loss whenLadv>0and along the auxiliary loss oth- erwise. This allows us to avoid manually tuning a weighting of the terms in the objective function.quotesdbs_dbs2.pdfusesText_3[PDF] audio classification

[PDF] audio classification deep learning python

[PDF] audio classification fft python

[PDF] audio classification keras

[PDF] audio classification papers

[PDF] audio element can be programmatically controlled from

[PDF] audio presentation google meet

[PDF] audio presentation ideas

[PDF] audio presentation rubric

[PDF] audio presentation tips

[PDF] audio presentation tools

[PDF] audio presentation zoom

[PDF] audio visual french learning

[PDF] audiology goals