Introduction to Data Science

Introduction to Data Science

LECTURE NOTES. B.TECH II YEAR – I SEM (R20). (2021-2022). DEPARTMENT OF COMPUTER SCIENCE & ENGINEERING. (DATA SCIENCECYBER SECURITY

UNSW SCIENCE School of Maths and Statistics Course outline

UNSW SCIENCE School of Maths and Statistics Course outline

Students will study the fundamentals of Data Science as it is applied in Computer Science notes

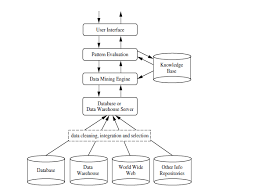

LECTURE NOTES ON DATA MINING& DATA WAREHOUSING

LECTURE NOTES ON DATA MINING& DATA WAREHOUSING

: The data mining system can be classified according to the following criteria: Database Technology. Statistics. Machine Learning. Information Science.

MS&E 226: Fundamentals of Data Science

MS&E 226: Fundamentals of Data Science

MS&E 226: Fundamentals of Data Science. Lecture 2: Linear Regression. Ramesh Johari Note: summary(fm) produces lots of other output too! We are going to.

DATA STRUCTURES LECTURE NOTES

DATA STRUCTURES LECTURE NOTES

Sartaj Sahni Ellis Horowitz

Department of CSE (Emerging Technologies) STATISTICAL

Department of CSE (Emerging Technologies) STATISTICAL

LECTURE NOTES. MALLA REDDY COLLEGE OF ENGINEERING & TECHNOLOGY. (Autonomous As a data science professional you likely hear the word “Bayesian” a lot.

Fundamentals of Data Science for Engineers (SIE 433/533) Tue/Thu

Fundamentals of Data Science for Engineers (SIE 433/533) Tue/Thu

Main: Lecture notes provided and can be downloaded from D2L course website. Recommended reference books: Pattern Recognition and Machine Learning - by C. M.

MS&E 226: Fundamentals of Data Science

MS&E 226: Fundamentals of Data Science

MS&E 226: Fundamentals of Data Science. Lecture 1: Introduction. Ramesh Johari Important note: You can and should try out AI tools for anything in this.

LECTURE NOTES on PROGRAMMING & DATA STRUCTURE

LECTURE NOTES on PROGRAMMING & DATA STRUCTURE

Module 1: (10 Lectures). C Language Fundamentals Arrays and Strings. Character set

LECTURE NOTES ON DATA PREPARATION AND ANALYSIS

LECTURE NOTES ON DATA PREPARATION AND ANALYSIS

In terms of methodology big data analytics differs significantly from the traditional statistical approach of experimental design. Analytics starts with data.

Foundations of Data Science

Foundations of Data Science

04-Jan-2018 6 Algorithms for Massive Data Problems: Streaming Sketching

Data Science: Theories Models

Data Science: Theories Models

and Analytics

Data Structures and Algorithms

Data Structures and Algorithms

Lecture Notes for. Data Structures and Algorithms. Revised each year by John Bullinaria. School of Computer Science. University of Birmingham.

LECTURE NOTES ON DATA STRUCTURES

LECTURE NOTES ON DATA STRUCTURES

Demonstrate several searching and sorting algorithms. III. Implement linear and non-linear data structures. IV. Demonstrate various tree and graph traversal

CS 391 E1 - Fall 19 - Foundations of Data Science – Syllabus

CS 391 E1 - Fall 19 - Foundations of Data Science – Syllabus

22-Oct-2019 Prediction methods e.g. regression and common measures. Piazza?(Q&A

STAT 1291: Data Science

STAT 1291: Data Science

This entire course is about doing data science using R. • Fridays classes (11 or 12 AM) will meet at STAT LAB (Posvar 1201) whenever possible. • We will begin

M.Sc. (IT) DATA SCIENCE

M.Sc. (IT) DATA SCIENCE

Course Co-ordinator. : Ms. Preeti Bharanuke. Assistant Professor M.Sc.(I.T.). Institute of Distance and Open Learning

Data Science and Machine Learning - Mathematical and Statistical

Data Science and Machine Learning - Mathematical and Statistical

08-May-2022 write a book about data science and machine learning that can be ... fX(x) and fX

Probability and Statistics for Data Science

Probability and Statistics for Data Science

These notes were developed for the course Probability and Statistics for Data Science at the. Center for Data Science in NYU. The goal is to provide an

Foundations of Data Science - Department of Computer Science

Foundations of Data Science - Department of Computer Science

Contents 1 Introduction 9 2 High-Dimensional Space 12 2 1 Introduction 12 2 2 The Law of Large

Foundations of Data Science

Avrim Blum, John Hopcroft, and Ravindran Kannan

Thursday 4

thJanuary, 2018Copyright 2015. All rights reserved

1Contents

1 Introduction 9

2 High-Dimensional Space 12

2.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

122.2 The Law of Large Numbers . . . . . . . . . . . . . . . . . . . . . . . . . .

122.3 The Geometry of High Dimensions . . . . . . . . . . . . . . . . . . . . . .

152.4 Properties of the Unit Ball . . . . . . . . . . . . . . . . . . . . . . . . . . .

172.4.1 Volume of the Unit Ball . . . . . . . . . . . . . . . . . . . . . . . .

172.4.2 Volume Near the Equator . . . . . . . . . . . . . . . . . . . . . . .

192.5 Generating Points Uniformly at Random from a Ball . . . . . . . . . . . .

222.6 Gaussians in High Dimension . . . . . . . . . . . . . . . . . . . . . . . . .

232.7 Random Projection and Johnson-Lindenstrauss Lemma . . . . . . . . . . .

252.8 Separating Gaussians . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

272.9 Fitting a Spherical Gaussian to Data . . . . . . . . . . . . . . . . . . . . .

292.10 Bibliographic Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

312.11 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

323 Best-Fit Subspaces and Singular Value Decomposition (SVD) 40

3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

403.2 Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

413.3 Singular Vectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

423.4 Singular Value Decomposition (SVD) . . . . . . . . . . . . . . . . . . . . .

453.5 Best Rank-kApproximations . . . . . . . . . . . . . . . . . . . . . . . . .47

3.6 Left Singular Vectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

483.7 Power Method for Singular Value Decomposition . . . . . . . . . . . . . . .

513.7.1 A Faster Method . . . . . . . . . . . . . . . . . . . . . . . . . . . .

513.8 Singular Vectors and Eigenvectors . . . . . . . . . . . . . . . . . . . . . . .

543.9 Applications of Singular Value Decomposition . . . . . . . . . . . . . . . .

543.9.1 Centering Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

543.9.2 Principal Component Analysis . . . . . . . . . . . . . . . . . . . . .

563.9.3 Clustering a Mixture of Spherical Gaussians . . . . . . . . . . . . .

563.9.4 Ranking Documents and Web Pages . . . . . . . . . . . . . . . . .

623.9.5 An Application of SVD to a Discrete Optimization Problem . . . .

633.10 Bibliographic Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

653.11 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

674 Random Walks and Markov Chains 76

4.1 Stationary Distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

804.2 Markov Chain Monte Carlo . . . . . . . . . . . . . . . . . . . . . . . . . .

814.2.1 Metropolis-Hasting Algorithm . . . . . . . . . . . . . . . . . . . . .

834.2.2 Gibbs Sampling . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

844.3 Areas and Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

862

4.4 Convergence of Random Walks on Undirected Graphs . . . . . . . . . . . .88

4.4.1 Using Normalized Conductance to Prove Convergence . . . . . . . .

944.5 Electrical Networks and Random Walks . . . . . . . . . . . . . . . . . . . .

974.6 Random Walks on Undirected Graphs with Unit Edge Weights . . . . . . .

1024.7 Random Walks in Euclidean Space . . . . . . . . . . . . . . . . . . . . . .

1094.8 The Web as a Markov Chain . . . . . . . . . . . . . . . . . . . . . . . . . .

1124.9 Bibliographic Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1164.10 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1185 Machine Learning 129

5.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1295.2 The Perceptron algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . .

1305.3 Kernel Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1325.4 Generalizing to New Data . . . . . . . . . . . . . . . . . . . . . . . . . . .

1345.5 Overtting and Uniform Convergence . . . . . . . . . . . . . . . . . . . . .

1355.6 Illustrative Examples and Occam's Razor . . . . . . . . . . . . . . . . . . .

1385.6.1 Learning Disjunctions . . . . . . . . . . . . . . . . . . . . . . . . .

1385.6.2 Occam's Razor . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1395.6.3 Application: Learning Decision Trees . . . . . . . . . . . . . . . . .

1 405.7 Regularization: Penalizing Complexity . . . . . . . . . . . . . . . . . . . .

1415.8 Online Learning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1415.8.1 An Example: Learning Disjunctions . . . . . . . . . . . . . . . . . .

14 25.8.2 The Halving Algorithm . . . . . . . . . . . . . . . . . . . . . . . . .

1435.8.3 The Perceptron Algorithm . . . . . . . . . . . . . . . . . . . . . . .

1435.8.4 Extensions: Inseparable Data and Hinge Loss . . . . . . . . . . . .

1 455.9 Online to Batch Conversion . . . . . . . . . . . . . . . . . . . . . . . . . .

1465.10 Support-Vector Machines . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1475.11 VC-Dimension . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1485.11.1 Denitions and Key Theorems . . . . . . . . . . . . . . . . . . . . .

1495.11.2 Examples: VC-Dimension and Growth Function . . . . . . . . . . .

1515.11.3 Proof of Main Theorems . . . . . . . . . . . . . . . . . . . . . . . .

15 35.11.4 VC-Dimension of Combinations of Concepts . . . . . . . . . . . . .

15 65.11.5 Other Measures of Complexity . . . . . . . . . . . . . . . . . . . . .

1565.12 Strong and Weak Learning - Boosting . . . . . . . . . . . . . . . . . . . . .

1575.13 Stochastic Gradient Descent . . . . . . . . . . . . . . . . . . . . . . . . . .

1605.14 Combining (Sleeping) Expert Advice . . . . . . . . . . . . . . . . . . . . .

1625.15 Deep Learning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1645.15.1 Generative Adversarial Networks (GANs) . . . . . . . . . . . . . . .

1705.16 Further Current Directions . . . . . . . . . . . . . . . . . . . . . . . . . . .

1715.16.1 Semi-Supervised Learning . . . . . . . . . . . . . . . . . . . . . . .

1715.16.2 Active Learning . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1745.16.3 Multi-Task Learning . . . . . . . . . . . . . . . . . . . . . . . . . .

17 45.17 Bibliographic Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1753

5.18 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .176

6 Algorithms for Massive Data Problems: Streaming, Sketching, and

Sampling 181

6.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1816.2 Frequency Moments of Data Streams . . . . . . . . . . . . . . . . . . . . .

1826.2.1 Number of Distinct Elements in a Data Stream . . . . . . . . . . .

1836.2.2 Number of Occurrences of a Given Element. . . . . . . . . . . . . .

1866.2.3 Frequent Elements . . . . . . . . . . . . . . . . . . . . . . . . . . .

1876.2.4 The Second Moment . . . . . . . . . . . . . . . . . . . . . . . . . .

1896.3 Matrix Algorithms using Sampling . . . . . . . . . . . . . . . . . . . . . .

1926.3.1 Matrix Multiplication using Sampling . . . . . . . . . . . . . . . . .

1936.3.2 Implementing Length Squared Sampling in Two Passes . . . . . . .

1976.3.3 Sketch of a Large Matrix . . . . . . . . . . . . . . . . . . . . . . . .

1976.4 Sketches of Documents . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2016.5 Bibliographic Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2036.6 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2047 Clustering 208

7.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2087.1.1 Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2087.1.2 Two General Assumptions on the Form of Clusters . . . . . . . . .

2097.1.3 Spectral Clustering . . . . . . . . . . . . . . . . . . . . . . . . . . .

2117.2k-Means Clustering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .211

7.2.1 A Maximum-Likelihood Motivation . . . . . . . . . . . . . . . . . .

2117.2.2 Structural Properties of thek-Means Objective . . . . . . . . . . .212

7.2.3 Lloyd's Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2137.2.4 Ward's Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2157.2.5k-Means Clustering on the Line . . . . . . . . . . . . . . . . . . . .215

7.3k-Center Clustering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .215

7.4 Finding Low-Error Clusterings . . . . . . . . . . . . . . . . . . . . . . . . .

2167.5 Spectral Clustering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2167.5.1 Why Project? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2167.5.2 The Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2187.5.3 Means Separated by

(1) Standard Deviations . . . . . . . . . . . . 2197.5.4 Laplacians . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2217.5.5 Local spectral clustering . . . . . . . . . . . . . . . . . . . . . . . .

22 17.6 Approximation Stability . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2247.6.1 The Conceptual Idea . . . . . . . . . . . . . . . . . . . . . . . . . .

2247.6.2 Making this Formal . . . . . . . . . . . . . . . . . . . . . . . . . . .

2247.6.3 Algorithm and Analysis . . . . . . . . . . . . . . . . . . . . . . . .

2257.7 High-Density Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2277.7.1 Single Linkage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

22 74

7.7.2 Robust Linkage . . . . . . . . . . . . . . . . . . . . . . . . . . . . .228

7.8 Kernel Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2287.9 Recursive Clustering based on Sparse Cuts . . . . . . . . . . . . . . . . . .

2297.10 Dense Submatrices and Communities . . . . . . . . . . . . . . . . . . . . .

2307.11 Community Finding and Graph Partitioning . . . . . . . . . . . . . . . . .

2337.12 Spectral clustering applied to social networks . . . . . . . . . . . . . . . . .

2367.13 Bibliographic Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2397.14 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2408 Random Graphs 245

8.1 TheG(n;p) Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .245

8.1.1 Degree Distribution . . . . . . . . . . . . . . . . . . . . . . . . . . .

24 68.1.2 Existence of Triangles inG(n;d=n) . . . . . . . . . . . . . . . . . .250

8.2 Phase Transitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2528.3 Giant Component . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2618.3.1 Existence of a giant component . . . . . . . . . . . . . . . . . . . .

2618.3.2 No other large components . . . . . . . . . . . . . . . . . . . . . . .

26 38.3.3 The case ofp <1=n. . . . . . . . . . . . . . . . . . . . . . . . . . .264

8.4 Cycles and Full Connectivity . . . . . . . . . . . . . . . . . . . . . . . . . .

2658.4.1 Emergence of Cycles . . . . . . . . . . . . . . . . . . . . . . . . . .

26 58.4.2 Full Connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2668.4.3 Threshold forO(lnn) Diameter . . . . . . . . . . . . . . . . . . . .268

8.5 Phase Transitions for Increasing Properties . . . . . . . . . . . . . . . . . .

2708.6 Branching Processes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2728.7 CNF-SAT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2778.7.1 SAT-solvers in practice . . . . . . . . . . . . . . . . . . . . . . . . .

2788.7.2 Phase Transitions for CNF-SAT . . . . . . . . . . . . . . . . . . . .

2798.8 Nonuniform Models of Random Graphs . . . . . . . . . . . . . . . . . . . .

2848.8.1 Giant Component in Graphs with Given Degree Distribution . . . .

2858.9 Growth Models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2868.9.1 Growth Model Without Preferential Attachment . . . . . . . . . . .

2878.9.2 Growth Model With Preferential Attachment . . . . . . . . . . . .

2938.10 Small World Graphs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2 948.11 Bibliographic Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2998.12 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3019 Topic Models, Nonnegative Matrix Factorization, Hidden Markov Mod-

els, and Graphical Models 3109.1 Topic Models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3109.2 An Idealized Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3139.3 Nonnegative Matrix Factorization - NMF . . . . . . . . . . . . . . . . . . .

3159.4 NMF with Anchor Terms . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3179.5 Hard and Soft Clustering . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3185

9.6 The Latent Dirichlet Allocation Model for Topic Modeling . . . . . . . . .320

9.7 The Dominant Admixture Model . . . . . . . . . . . . . . . . . . . . . . .

3229.8 Formal Assumptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3249.9 Finding the Term-Topic Matrix . . . . . . . . . . . . . . . . . . . . . . . .

3279.10 Hidden Markov Models . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3329.11 Graphical Models and Belief Propagation . . . . . . . . . . . . . . . . . . .

3379.12 Bayesian or Belief Networks . . . . . . . . . . . . . . . . . . . . . . . . . .

3389.13 Markov Random Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3399.14 Factor Graphs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3409.15 Tree Algorithms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3419.16 Message Passing in General Graphs . . . . . . . . . . . . . . . . . . . . . .

3429.17 Graphs with a Single Cycle . . . . . . . . . . . . . . . . . . . . . . . . . .

3449.18 Belief Update in Networks with a Single Loop . . . . . . . . . . . . . . . .

3469.19 Maximum Weight Matching . . . . . . . . . . . . . . . . . . . . . . . . . .

3479.20 Warning Propagation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3519.21 Correlation Between Variables . . . . . . . . . . . . . . . . . . . . . . . . .

3519.22 Bibliographic Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3559.23 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

35710 Other Topics 360

10.1 Ranking and Social Choice . . . . . . . . . . . . . . . . . . . . . . . . . . .

36010.1.1 Randomization . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

36210.1.2 Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

36310.2 Compressed Sensing and Sparse Vectors . . . . . . . . . . . . . . . . . . .

36410.2.1 Unique Reconstruction of a Sparse Vector . . . . . . . . . . . . . .

36510.2.2 Eciently Finding the Unique Sparse Solution . . . . . . . . . . . .

36610.3 Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

36810.3.1 Biological . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

36810.3.2 Low Rank Matrices . . . . . . . . . . . . . . . . . . . . . . . . . . .

36910.4 An Uncertainty Principle . . . . . . . . . . . . . . . . . . . . . . . . . . . .

37010.4.1 Sparse Vector in Some Coordinate Basis . . . . . . . . . . . . . . .

37010.4.2 A Representation Cannot be Sparse in Both Time and Frequency

Domains . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37110.5 Gradient . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

37310.6 Linear Programming . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

37510.6.1 The Ellipsoid Algorithm . . . . . . . . . . . . . . . . . . . . . . . .

37 510.7 Integer Optimization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

37710.8 Semi-Denite Programming . . . . . . . . . . . . . . . . . . . . . . . . . .

37810.9 Bibliographic Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

38010.10Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3816

11 Wavelets 385

11.1 Dilation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

38511.2 The Haar Wavelet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

38611.3 Wavelet Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

39011.4 Solving the Dilation Equation . . . . . . . . . . . . . . . . . . . . . . . . .

39011.5 Conditions on the Dilation Equation . . . . . . . . . . . . . . . . . . . . .

39211.6 Derivation of the Wavelets from the Scaling Function . . . . . . . . . . . .

39411.7 Sucient Conditions for the Wavelets to be Orthogonal . . . . . . . . . . .

39811.8 Expressing a Function in Terms of Wavelets . . . . . . . . . . . . . . . . .

40111.9 Designing a Wavelet System . . . . . . . . . . . . . . . . . . . . . . . . . .

40211.10Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40211.11 Bibliographic Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40211.12 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40312 Appendix 406

12.1 Denitions and Notation . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40612.2 Asymptotic Notation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40612.3 Useful Relations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40812.4 Useful Inequalities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

41312.5 Probability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

42012.5.1 Sample Space, Events, and Independence . . . . . . . . . . . . . . .

42012.5.2 Linearity of Expectation . . . . . . . . . . . . . . . . . . . . . . . .

42112.5.3 Union Bound . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

42212.5.4 Indicator Variables . . . . . . . . . . . . . . . . . . . . . . . . . . .

quotesdbs_dbs9.pdfusesText_15[PDF] fyre festival marketing company

[PDF] gare de l'est train station map

[PDF] gcss army finance test 1 answers

[PDF] general characteristics of xeroderma pigmentosum

[PDF] genetic cause of fragile x syndrome

[PDF] git bash manual command

[PDF] google chrome extension security check

[PDF] google maps paris quartier latin

[PDF] green new zealand

[PDF] greve jeudi 9 janvier 2020 le parisien

[PDF] greve ratp paris 5 decembre

[PDF] grille évaluation langage oral cp

[PDF] harbor freight jack stand recall 38846

[PDF] having child care difficulties