Noun Phrases

Noun Phrases

Adjectives. An adjective is a describing word. It tells you more about a noun. a black slug the creepy beetles a tiny ant a difficult job.

UNIT 2 THE NOUN PHRASE

UNIT 2 THE NOUN PHRASE

You will study the main types of nouns and the important aspects of noun phrases like gender and number. Finally you will also understand how noun phrases are

of 5 NOUN PHRASE A noun phrase is a group of words that modify

of 5 NOUN PHRASE A noun phrase is a group of words that modify

Important points to remember about noun phrase. i. A noun phrase always has a noun ii. A noun phrase does not have an action verb. Only subjects have a verb

Noun Phrase Coreference as Clustering

Noun Phrase Coreference as Clustering

Given a description of each noun phrase and a method for measuring the distance between two noun phrases a cluster- ing algorithm can then group noun phrases

Composing Noun Phrase Vector Representations

Composing Noun Phrase Vector Representations

02-Aug-2019 component of a noun-noun phrase i.e. the (syn- tactic) modifier

Chapter 3 Noun Phrases Pronouns

Chapter 3 Noun Phrases Pronouns

A noun phrase is a noun or pronoun head and all of its modifiers (or the coordination of more than one NP--to be discussed in Chapter 6). Some nouns require the.

9 Phrases

9 Phrases

However given the typical textbook definition of pronoun as a word that can replace either nouns or noun phrases

NOUN PHRASE

NOUN PHRASE

NOUN PHRASE. Dosen. Dr. Ali Mustadi M.Pd. NIP. 19780710 200801 1 012. Page 2. Frasa Rumus Noun phrase (Rule 2). Ket: Penjelasan rule no 2. O Si A S C O M P.

The Use of Participles and Gerunds

The Use of Participles and Gerunds

03-Jul-2020 NP means a noun phrase. AmE means American English and BrE

Conundrums in Noun Phrase Coreference Resolution: Making

Conundrums in Noun Phrase Coreference Resolution: Making

Noun phrase coreference resolution is the pro- cess of determining whether two noun phrases. (NPs) refer to the same real-world entity or con- cept. It is

Parsing Noun Phrases in the Penn Treebank

Parsing Noun Phrases in the Penn Treebank

The parsing of noun phrases (NPs) involves the same difficulties as may never be found even if the correct dominating noun phrase has been found. As an.

Noun Phrase Coreference as Clustering

Noun Phrase Coreference as Clustering

Given a description of each noun phrase and a method for measuring the distance between two noun phrases a cluster- ing algorithm can then group noun phrases

9 Phrases

9 Phrases

Definition of phrase. Modification and complementation. Adverb phrases. Prepositional phrases. Adjective phrases. Noun phrases. Verb phrases introduction.

Noun phrase reference in Japanese-to-English machine translation

Noun phrase reference in Japanese-to-English machine translation

phrase is used not to refer to anything but rather normally with a copula verb

No Noun Phrase Left Behind: Detecting and Typing Unlinkable Entities

No Noun Phrase Left Behind: Detecting and Typing Unlinkable Entities

Entity linking systems link noun-phrase men- tions in text to their corresponding Wikipedia articles. However NLP applications would.

Effects of Noun Phrase Bracketing in Dependency Parsing and

Effects of Noun Phrase Bracketing in Dependency Parsing and

Jun 19 2011 nal noun phrase annotation

Analyzing Embedded Noun Phrase Structures Derived from

Analyzing Embedded Noun Phrase Structures Derived from

ded sentences make modified noun phrases more expressive. In embedded noun phrase struc- tures a noun phrase modified by an embedded sentence is usually

Unsupervised Pronoun Resolution via Masked Noun-Phrase

Unsupervised Pronoun Resolution via Masked Noun-Phrase

Aug 1 2021 In this work

Noun Phrase Analysis in Large Unrestricted Text for Information

Noun Phrase Analysis in Large Unrestricted Text for Information

This paper reports on the application of a few simple yet robust and efficient noun- phrase analysis techniques to create bet- ter indexing phrases for

Conundrums in Noun Phrase Coreference Resolution: Making

Conundrums in Noun Phrase Coreference Resolution: Making

Conundrums in Noun Phrase Coreference Resolution: Making Sense of the State-of-the-Art. Veselin Stoyanov. Cornell University. Ithaca NY ves@cs.cornell.edu.

The English Noun Phrase - Cambridge

The English Noun Phrase - Cambridge

The English Noun Phrase The Nature of Linguistic Categorization © in this web service Cambridge University Press www cambridge Cambridge University Press 978-0-521-18395-6 - The English Noun Phrase: The Nature of Linguistic Categorization Evelien Keizer Frontmatter More information

Chapter 3 Noun Phrases Pronouns

Chapter 3 Noun Phrases Pronouns

Jan 26 2005 · A noun phrase is a noun or pronoun head and all of its modifiers (or the coordination of more than one NP--to be discussed in Chapter 6) Some nouns require the presence of a determiner as a modifier Most pronouns are typically not modified at all and no pronoun requires the presence of a determiner

Noun Phrases - Carnegie Mellon University

Noun Phrases - Carnegie Mellon University

Gender/Noun Class • English has gender on third person singular pronouns: “she” vs “he” • Genders may correspond to biological gender • But they extend to inanimate objects and become noun classes that aren’t completely connected to biological gender – In gender langages tables and chairs have gender

UNIT 1: NOUNS Lesson 1: Identifying nouns

UNIT 1: NOUNS Lesson 1: Identifying nouns

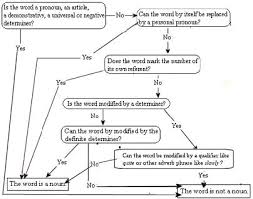

UNIT 1: NOUNS Lesson 1: Identifying nouns UNIT 1: NOUNS Lesson 1: Identifying nouns Nounsare commonly de¢ned as words that refer to a person place thing or idea How can you identify a noun? Quick tip 1 1 If you can put the wordthein front of a word and it sounds like a unit the wordis a noun

Noun Phrase Structure - University at Buffalo

Noun Phrase Structure - University at Buffalo

(iii) various sorts of noun phrases which lack a head noun These three types are discussed in sections 1 2 and 3 respectively 1 Simple noun phrases The most common noun phrases in many languages contain a single word which is either a noun or a pronoun In most if not all languages pronouns generally occur alone in noun phrases without

Searches related to noun phrase pdf filetype:pdf

Searches related to noun phrase pdf filetype:pdf

Recognize a noun phrase when you find one A noun phrase includes a noun—a person place or thing—and the modifiers that distinguish it You can find the noun dog in a sentence for example but you do not know which canine the writer means until you consider the entire noun phrase: that dog Aunt

[PDF] Noun Phrase in English: Its Form Function and Distribution in Text

[PDF] Noun Phrase in English: Its Form Function and Distribution in Text

The reasons are to find as many various noun phrases as possible and to overview the similarities and differences in both languages The noun + noun structure

[PDF] Nouns and Noun Phrases - OAPEN

[PDF] Nouns and Noun Phrases - OAPEN

26 sept 2012 · Numeral Phrase *) Noun phrase is written in full when the NP-DP distinction is not relevant Symbols abbreviations and conventions used in

[PDF] Noun Phrases

[PDF] Noun Phrases

Nouns A noun names a person place idea thing or feeling a slug the beetles an ant a job In front of a noun we often have a an the determiners

[PDF] The Noun Phrase - Grammar Bytes

[PDF] The Noun Phrase - Grammar Bytes

A noun phrase includes a noun—a person place or thing—and the modifiers that distinguish it You can find the noun dog in a sentence for example but you do

[PDF] Noun-phrasespdf

[PDF] Noun-phrasespdf

13 fév 2017 · This article covers number in noun phrases and in agreement between nouns and verbs • We will look at number in noun phrases first Page 10 To

[PDF] 14 Noun Phrases in English

[PDF] 14 Noun Phrases in English

It is useful to begin with to recognize that English nouns fall into four classes: pronouns proper nouns count nouns and mass nouns Count nouns and mass

(PDF) The Noun Phrase - ResearchGate

(PDF) The Noun Phrase - ResearchGate

25 mar 2017 · PDF On Jan 8 2004 Jan Rijkhoff published The Noun Phrase Find read and cite all the research you need on ResearchGate

the noun phrase: formal and functional perspectives - ResearchGate

the noun phrase: formal and functional perspectives - ResearchGate

PDF Languages have syntactic units of different types and sizes Some of these phrases are obligatory such as noun phrase and verb phrase;

[PDF] 9 Phrases

[PDF] 9 Phrases

In this chapter we will present the three less complex types first— adverb prepositional and adjective The reason for this seemingly backwards ap- proach is

[PDF] Brief grammar 1-1pdf

[PDF] Brief grammar 1-1pdf

1 NOUN PHRASES: THE BASICS 2 NOUNS 2 1 Noun phrases headed by common Nouns A declarative sentence in Euskara contains: a verb and its arguments

What is a noun phrase?

- A noun phrase is a noun or pronoun head and all of its modifiers (or the coordination of more than one NP--to be discussed in Chapter 6). Some nouns require the presence of a determiner as a modifier. Most pronouns are typically not modified at all and no pronoun requires the presence of a determiner.

How do you recognize a noun phrase?

- Recognize a noun phrase when you find one. noun phrase includes a noun—a person, place, or thing—and the modifiers that distinguish it.

Can nouns be modifiers?

- The most common way in which nouns occur as modifiers of nouns is in genitive constructions, in which it is really a noun phrase rather than just a noun that is modifying the head noun. These are discussed in section 2.1 below. However, some, but not all, languages allow nouns to modify nouns without possessive meaning.

What is a possessor phrase without a noun?

- theone[=wage]ofthose[workers] (literally:theofthose) In fact, English also allows possessor phrases without a noun to function as noun phrases, as in (150). (150) Your car is nice, but Johns is nicer.

Proceedings of the 7th Annual Meeting of the ACL and the th IJCNLP of the AFNLP, pages 656-664,Suntec, Singapore, 2-7 August 2009.c

2009 ACL and AFNLPConundrums in Noun Phrase Coreference Resolution:

Making Sense of the State-of-the-Art

Veselin Stoyanov

Cornell University

Ithaca, NY

ves@cs.cornell.eduNathan GilbertUniversity of Utah

Salt Lake City, UT

ngilbert@cs.utah.eduClaire CardieCornell University

Ithaca, NY

cardie@cs.cornell.eduEllen RiloffUniversity of Utah

Salt Lake City, UT

riloff@cs.utah.eduAbstract

We aim to shed light on the state-of-the-art in NP coreference resolution by teasing apart the differ- ences in the MUC and ACE task definitions, the as- sumptions made in evaluation methodologies, and inherent differences in text corpora. First, we exam- resolution: named entity recognition, anaphoric- ity determination, and coreference element detec- tion. We measure the impact of each subproblem on coreference resolution and confirm that certain as- sumptions regarding these subproblems in the eval- uation methodology can dramatically simplify the overall task. Second, we measure the performance of a state-of-the-art coreference resolver on several classes of anaphora and use these results to develop a quantitative measure for estimating coreference resolution performance on new data sets.1 Introduction

As is common for many natural language process-

ing problems, the state-of-the-art in noun phrase (NP) coreference resolution is typically quantified based on system performance on manually anno- tated text corpora. In spite of the availability of several benchmark data sets (e.g. MUC-6 (1995),ACE NIST (2004)) and their use in many formal

evaluations, as a field we can make surprisingly few conclusive statements about the state-of-the- art in NP coreference resolution. In particular,it remains difficult to assess the ef- fectiveness of different coreference resolution ap- proaches, even in relative terms. For example, the91.5 F-measure reported by McCallum and Well-

ner (2004) was produced by a system using perfect information for several linguistic subproblems. In contrast, the 71.3 F-measure reported by Yang et al. (2003) represents a fully automatic end-to-end resolver. It is impossible to assess which approach truly performs best because of the dramatically different assumptions of each evaluation.Results vary widely across data sets.Corefer-

ence resolution scores range from 85-90% on theACE 2004 and 2005 data sets to a much lower 60-

70% on the MUC 6 and 7 data sets (e.g. Soon et al.(2001) and Yang et al. (2003)). What accounts for

these differences? Are they due to properties of the documents or domains? Or do differences in ferences in performance? Given a new text collec- tionanddomain, whatlevelofperformanceshould we expect?We have little understanding of which aspects

of the coreference resolution problem are handled well or poorly by state-of-the-art systems. Ex- cept for some fairly general statements, for exam- ple that proper names are easier to resolve than pronouns, which are easier than common nouns, there has been little analysis of which aspects of the problem have achieved success and which re- main elusive. The goal of this paper is to take initial steps to- ward making sense of the disparate performance results reported for NP coreference resolution. For our investigations, we employ a state-of-the-art classification-based NP coreference resolver and focus on the widely used MUC and ACE corefer- ence resolution data sets.We hypothesize that performance variation

within and across coreference resolvers is, at least in part, a function of (1) the (sometimes unstated) assumptions in evaluation methodologies, and (2) the relative difficulty of the benchmark text cor- pora. With these in mind, Section 3 first examines three subproblems that play an important role in coreference resolution:named entity recognition, anaphoricity determination, andcoreference ele- ment detection. We quantitatively measure the im- pact of each of these subproblems on coreference resolution performance as a whole. Our results suggest that the availability of accurate detectors for anaphoricity or coreference elements could substantially improve the performance of state-of- the-art resolvers, while improvements to named entity recognition likely offer little gains. Our re- sults also confirm that the assumptions adopted in656MUCACE

Relative Pronounsnoyes

Gerundsnoyes

Nested non-NP nounsyesno

Nested NEsnoGPE & LOC premod

Semantic Typesall7 classes only

Singletonsnoyes

Table 1:Coreference Definition Differences for MUC andACE. (GPE refers to geo-political entities.)

some evaluations dramatically simplify the resolu- tion task, rendering it an unrealistic surrogate for the original problem.In Section 4, we quantify the difficulty of a

text corpus with respect to coreference resolution by analyzing performance on different resolution classes. Our goals are twofold: to measure the level of performance of state-of-the-art corefer- ence resolvers on different types of anaphora, and to develop a quantitative measure for estimating coreference resolution performance on new data sets. We introduce acoreference performance pre- diction (CPP)measure and show that it accurately predicts the performance of our coreference re- solver. As a side effect of our research, we pro- vide a new set of much-needed benchmark results for coreference resolution under common sets of fully-specified evaluation assumptions.2 Coreference Task Definitions

This paper studies the six most commonly used

coreference resolution data sets. Two of those are fromtheMUCconferences(MUC-6, 1995; MUC-7, 1997) and four are from the Automatic Con-

tent Evaluation (ACE) Program (NIST, 2004). In thissection, weoutlinethedifferencesbetweentheMUC and ACE coreference resolution tasks, and

define terminology for the rest of the paper.Noun phrase coreference resolutionis the pro-

cess of determining whether two noun phrases (NPs) refer to the same real-world entity or con- cept. It is related to anaphora resolution: a NP is said to beanaphoricif it depends on another NP for interpretation. Consider the following:John Hall is the new CEO. He starts on Monday.

Here,heis anaphoric because it depends on its an-

tecedent,John Hall, for interpretation. The twoNPs also corefer because each refers to the same

person, JOHNHALL.As discussed in depth elsewhere (e.g. van

Deemter and Kibble (2000)), the notions of coref-erence and anaphora are difficult to define pre- cisely and to operationalize consistently. Further- more, theconnectionsbetweenthemareextremely complex and go beyond the scope of this paper. Given these complexities, it is not surprising that the annotation instructions for the MUC and ACE data sets reflect different interpretations and sim- plificationsofthegeneralcoreferencerelation. We outline some of these differences below.Syntactic Types.To avoid ambiguity, we will

use the termcoreference element (CE)to refer to the set of linguistic expressions that participate in the coreference relation, as defined for each of the MUC and ACE tasks.1At times, it will be im-

portant to distinguish between the CEs that are in- cluded in the gold standard - theannotated CEs - from those that are generated by the corefer- ence resolution system - theextracted CEs.At a high level, both the MUC and ACE eval-

uations define CEs as nouns, pronouns, and noun phrases. However, the MUC definition excludes (1) "nested" named entities (NEs) (e.g. "Amer- ica" in "Bank of America"), (2) relative pronouns, and (3) gerunds, but allows (4) nested nouns (e.g. "union" in "union members"). The ACE defini- tion, on the other hand, includes relative pronouns and gerunds, excludesallnested nouns that are not themselves NPs, and allows premodifier NE men- tions of geo-political entities and locations, such as "Russian" in "Russian politicians".Semantic Types.ACE restricts CEs to entities

that belong to one of seven semantic classes: per- son, organization, geo-political entity, location, fa- cility, vehicle, and weapon. MUC has no semantic restrictions.Singletons.The MUC data sets include annota-

tions only for CEs that are coreferent with at least one other CE. ACE, on the other hand, permits "singleton" CEs, which are not coreferent with any other CE in the document.These substantial differences in the task defini-

tions (summarized in Table 1) make it extremely difficult to compare performance across the MUC and ACE data sets. In the next section, we take a closer look at the coreference resolution task, ana- lyzing the impact of various subtasks irrespective of the data set differences.1 We define the term CE to be roughly equivalent to (a) the notion ofmarkablein the MUC coreference resolution definition and (b) the structures that can bementionsin the descriptions of ACE.6573 Coreference Subtask Analysis

Coreference resolution is a complex task that

requires solving numerous non-trivial subtasks such as syntactic analysis, semantic class tagging, pleonastic pronoun identification and antecedent identification to name a few. This section exam- ines the role of three such subtasks -named en- tity recognition,anaphoricity determination, and coreference element detection- in the perfor- mance of an end-to-end coreference resolution system. First, however, we describe the corefer- ence resolver that we use for our study.3.1 TheRECONCILEACL09Coreference

Resolver

We use the RECONCILEcoreference resolution

platform (Stoyanov et al., 2009) to configure a coreference resolver that performs comparably to state-of-the-art systems (when evaluated on theMUC and ACE data sets under comparable as-

sumptions). This system is a classification-based coreference resolver, modeled after the systems ofNg and Cardie (2002b) and Bengtson and Roth

(2008). First it classifies pairs of CEs as coreferent or not coreferent, pairing each identified CE with all preceding CEs. The CEs are then clustered into coreference chains2based on the pairwise de-

cisions. RECONCILEhas a pipeline architecture with four main steps: preprocessing, feature ex- traction, classification, and clustering. We will refer to the specific configuration of RECONCILE used for this paper as RECONCILEACL09.Preprocessing.The RECONCILEACL09prepro-

cessor applies a series of language analysis tools (mostly publicly available software packages) to the source texts. The OpenNLP toolkit (Baldridge,J., 2005)performstokenization, sentencesplitting,

and part-of-speech tagging. The Berkeley parser (Petrov and Klein, 2007) generates phrase struc- ture parse trees, and the de Marneffe et al. (2006) system produces dependency relations. We em- ploy the Stanford CRF-based Named Entity Rec- ognizer (Finkel et al., 2004) for named entity tagging. With these preprocessing components,RECONCILEACL09uses heuristics to correctly ex-

tract approximately 90% of the annotated CEs for the MUC and ACE data sets.Feature Set.To achieve roughly state-of-the-

art performance, RECONCILEACL09employs a2 A coreferencechainrefers to the set of CEs that refer to a particular entity.datasetdocsCEschainsCEs/chtr/tst splitMUC66042329604.430/30 (st)

MUC750429710813.930/20 (st)

ACE-2159263011482.3130/29 (st)

ACE03105310613402.374/31

ACE04128303713322.390/38

ACE058119917752.657/24

Table 2:Dataset characteristics including the number of documents, annotated CEs, coreference chains, annotatedquotesdbs_dbs17.pdfusesText_23[PDF] nouveau cas de coronavirus en france

[PDF] nouveau cecrl 2018

[PDF] nouveau code de procédure pénal camerounais gratuit pdf

[PDF] nouveau plan comptable ohada 2018 excel

[PDF] nouveau projet immobilier cgi rabat

[PDF] nouvel examen oqlf

[PDF] nouvelle attestation de déplacement dérogatoire

[PDF] nouvelle carte coronavirus ile de france

[PDF] nouvelle spécialité anglais monde contemporain

[PDF] nova awards 2019

[PDF] nova bear dumpling

[PDF] nova bear dumpling maker

[PDF] nova scotia court records

[PDF] nova scotia courts