Appendix N: Derivation of the Logarithm Change of Base Formula

We set out to prove the logarithm change of base formula: logb x = loga x loga b. To do so we let y = logb x and apply these as exponents on the base.

MATHEMATICS 0110A CHANGE OF BASE Suppose that we have

Let y = logb a. Then we know that this means that by = a. We can take logarithms to base c

Change of Base

6.2 Properties of Logarithms

out the inverse relationship between these two change of base formulas. To change the base of Prove the Quotient Rule and Power Rule for Logarithms.

S&Z . & .

Lesson 5-2 - Using Properties and the Change of Base Formula

You can prove the Change of Base. Formula blog X x because exponents and logarithms are inverses. Take the log base a of both sides: log

Elementary Functions The logarithm as an inverse function

The positive constant b is called the base (of the logarithm.) Smith (SHSU) Let's call this the “change of base” equation or “change of base” property.

. Logarithms (slides to )

Introduction to Algorithms

I can prove this using the definition of big-Omega: This tells us that every positive power of the logarithm of n to the base b where b ¿ 1

cs lect fall notes

3.3 The logarithm as an inverse function

Let's call this the “change of base” equation. Example. Suppose we want to compute log2(17) but our calculator only allows us to use the natural logarithm ln.

Lecture Notes . Logarithms

CS 161 Lecture 3 - 1 Solving recurrences

n ≥ 2 and the base cases of the induction proof (which is not the same as Plugging the numbers into the recurrence formula

lecture

Logarithms Math 121 Calculus II

Proof. By the inverse of the Fundamental Theorem of Calculus since lnx is defined as an In particular

logs

Section 4.3 Logarithmic Functions

expression. Properties of Logs: Change of Base. Proof: Let . Rewriting as an exponential gives . Taking the log base c of both sides of this equation gives.

logarithms

CS 161 Lecture 3 Jessica Su (some parts copied from CLRS)

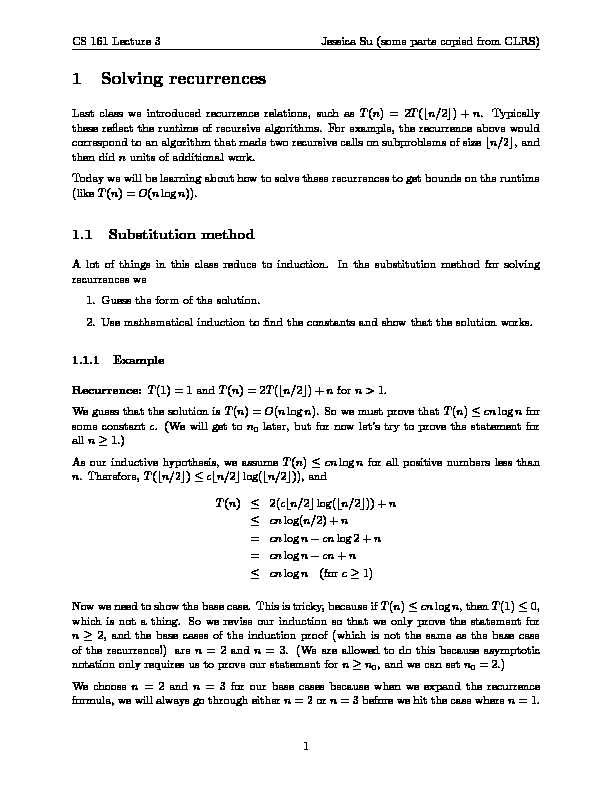

CS 161 Lecture 3 Jessica Su (some parts copied from CLRS) 1 Solving recurrences

Last class we introduced recurrence relations, such asT(n) = 2T(bn=2c) +n. Typically these re ect the runtime of recursive algorithms. For example, the recurrence above would correspond to an algorithm that made two recursive calls on subproblems of sizebn=2c, and then didnunits of additional work. Today we will be learning about how to solve these recurrences to get bounds on the runtime (likeT(n) =O(nlogn)).1.1 Substitution method

A lot of things in this class reduce to induction. In the substitution method for solving recurrences we1. Guess the form of the solution.

2. Use mathematical induction to nd the constants and show that the solution works.

1.1.1 Example

Recurrence:T(1) = 1 andT(n) = 2T(bn=2c) +nforn >1. We guess that the solution isT(n) =O(nlogn). So we must prove thatT(n)cnlognfor some constantc. (We will get ton0later, but for now let's try to prove the statement for alln1.) As our inductive hypothesis, we assumeT(n)cnlognfor all positive numbers less than n. Therefore,T(bn=2c)cbn=2clog(bn=2c)), andT(n)2(cbn=2clog(bn=2c)) +n

cnlog(n=2) +n =cnlogncnlog2 +n =cnlogncn+n cnlogn(forc1) Now we need to show the base case. This is tricky, because ifT(n)cnlogn, thenT(1)0, which is not a thing. So we revise our induction so that we only prove the statement for n2, and the base cases of the induction proof (which is not the same as the base case of the recurrence!) aren= 2 andn= 3. (We are allowed to do this because asymptotic notation only requires us to prove our statement fornn0, and we can setn0= 2.) We choosen= 2 andn= 3 for our base cases because when we expand the recurrence formula, we will always go through eithern= 2 orn= 3 before we hit the case wheren= 1. 1 CS 161 Lecture 3 Jessica Su (some parts copied from CLRS) So proving the inductive step as above, plus proving the bound works forn= 2 andn= 3, suces for our proof that the bound works for alln >1. Plugging the numbers into the recurrence formula, we getT(2) = 2T(1) + 2 = 4 and T(3) = 2T(1) + 3 = 5. So now we just need to choose acthat satises those constraints on T(2) andT(3). We can choosec= 2, because 422log2 and 523log3. Therefore, we have shown thatT(n)2nlognfor alln2, soT(n) =O(nlogn).1.1.2 Warnings

Warning:Using the substitution method, it is easy to prove a weaker bound than the one you're supposed to prove. For instance, if the runtime isO(n), you might still be able to substitutecn2into the recurrence and prove that the bound isO(n2). Which is technically true, but don't let it mislead you into thinking it's the best bound on the runtime. People often get burned by this on exams! Warning:You must prove the exact form of the induction hypothesis. For example, in the recurrenceT(n) = 2T(bn=2c) +n, we could falsely \prove"T(n) =O(n) by guessing T(n)cnand then arguingT(n)2(cbn=2c) +ncn+n=O(n). Here we needed to proveT(n)cn, notT(n)(c+ 1)n. Accumulated over many recursive calls, those \plus ones" add up.1.2 Recursion tree

A recursion tree is a tree where each node represents the cost of a certain recursive sub- problem. Then you can sum up the numbers in each node to get the cost of the entire algorithm. Note: We would usually use a recursion tree to generate possible guesses for the runtime, and then use the substitution method to prove them. However, if you are very careful when drawing out a recursion tree and summing the costs, you can actually use a recursion tree as a direct proof of a solution to a recurrence. If we are only using recursion trees to generate guesses and not prove anything, we can tolerate a certain amount of \sloppiness" in our analysis. For example, we can ignore oors and ceilings when solving our recurrences, as they usually do not aect the nal guess.1.2.1 Example

Recurrence:T(n) = 3T(bn=4c) + (n2)

We drop the

oors and write a recursion tree forT(n) = 3T(n=4) +cn2. 2 CS 161 Lecture 3 Jessica Su (some parts copied from CLRS) The top node has costcn2, because the rst call to the function doescn2units of work, aside from the work done inside the recursive subcalls. The nodes on the second layer all have costc(n=4)2, because the functions are now being called on problems of sizen=4, and the functions are doingc(n=4)2units of work, aside from the work done inside their recursive subcalls, etc. The bottom layer (base case) is special because each of them contributeT(1) to the cost. Analysis:First we nd the height of the recursion tree. Observe that a node at depth ire CS 161 Lecture 3 Jessica Su (some parts copied from CLRS)1 Solving recurrences

Last class we introduced recurrence relations, such asT(n) = 2T(bn=2c) +n. Typically these re ect the runtime of recursive algorithms. For example, the recurrence above would correspond to an algorithm that made two recursive calls on subproblems of sizebn=2c, and then didnunits of additional work. Today we will be learning about how to solve these recurrences to get bounds on the runtime (likeT(n) =O(nlogn)).1.1 Substitution method

A lot of things in this class reduce to induction. In the substitution method for solving recurrences we1. Guess the form of the solution.

2. Use mathematical induction to nd the constants and show that the solution works.

1.1.1 Example

Recurrence:T(1) = 1 andT(n) = 2T(bn=2c) +nforn >1. We guess that the solution isT(n) =O(nlogn). So we must prove thatT(n)cnlognfor some constantc. (We will get ton0later, but for now let's try to prove the statement for alln1.) As our inductive hypothesis, we assumeT(n)cnlognfor all positive numbers less than n. Therefore,T(bn=2c)cbn=2clog(bn=2c)), andT(n)2(cbn=2clog(bn=2c)) +n

cnlog(n=2) +n =cnlogncnlog2 +n =cnlogncn+n cnlogn(forc1) Now we need to show the base case. This is tricky, because ifT(n)cnlogn, thenT(1)0, which is not a thing. So we revise our induction so that we only prove the statement for n2, and the base cases of the induction proof (which is not the same as the base case of the recurrence!) aren= 2 andn= 3. (We are allowed to do this because asymptotic notation only requires us to prove our statement fornn0, and we can setn0= 2.) We choosen= 2 andn= 3 for our base cases because when we expand the recurrence formula, we will always go through eithern= 2 orn= 3 before we hit the case wheren= 1. 1 CS 161 Lecture 3 Jessica Su (some parts copied from CLRS) So proving the inductive step as above, plus proving the bound works forn= 2 andn= 3, suces for our proof that the bound works for alln >1. Plugging the numbers into the recurrence formula, we getT(2) = 2T(1) + 2 = 4 and T(3) = 2T(1) + 3 = 5. So now we just need to choose acthat satises those constraints on T(2) andT(3). We can choosec= 2, because 422log2 and 523log3. Therefore, we have shown thatT(n)2nlognfor alln2, soT(n) =O(nlogn).1.1.2 Warnings

Warning:Using the substitution method, it is easy to prove a weaker bound than the one you're supposed to prove. For instance, if the runtime isO(n), you might still be able to substitutecn2into the recurrence and prove that the bound isO(n2). Which is technically true, but don't let it mislead you into thinking it's the best bound on the runtime. People often get burned by this on exams! Warning:You must prove the exact form of the induction hypothesis. For example, in the recurrenceT(n) = 2T(bn=2c) +n, we could falsely \prove"T(n) =O(n) by guessing T(n)cnand then arguingT(n)2(cbn=2c) +ncn+n=O(n). Here we needed to proveT(n)cn, notT(n)(c+ 1)n. Accumulated over many recursive calls, those \plus ones" add up.1.2 Recursion tree

A recursion tree is a tree where each node represents the cost of a certain recursive sub- problem. Then you can sum up the numbers in each node to get the cost of the entire algorithm. Note: We would usually use a recursion tree to generate possible guesses for the runtime, and then use the substitution method to prove them. However, if you are very careful when drawing out a recursion tree and summing the costs, you can actually use a recursion tree as a direct proof of a solution to a recurrence. If we are only using recursion trees to generate guesses and not prove anything, we can tolerate a certain amount of \sloppiness" in our analysis. For example, we can ignore oors and ceilings when solving our recurrences, as they usually do not aect the nal guess.1.2.1 Example

Recurrence:T(n) = 3T(bn=4c) + (n2)

We drop the

oors and write a recursion tree forT(n) = 3T(n=4) +cn2. 2 CS 161 Lecture 3 Jessica Su (some parts copied from CLRS) The top node has costcn2, because the rst call to the function doescn2units of work, aside from the work done inside the recursive subcalls. The nodes on the second layer all have costc(n=4)2, because the functions are now being called on problems of sizen=4, and the functions are doingc(n=4)2units of work, aside from the work done inside their recursive subcalls, etc. The bottom layer (base case) is special because each of them contributeT(1) to the cost. Analysis:First we nd the height of the recursion tree. Observe that a node at depth ire- log base change formula proof