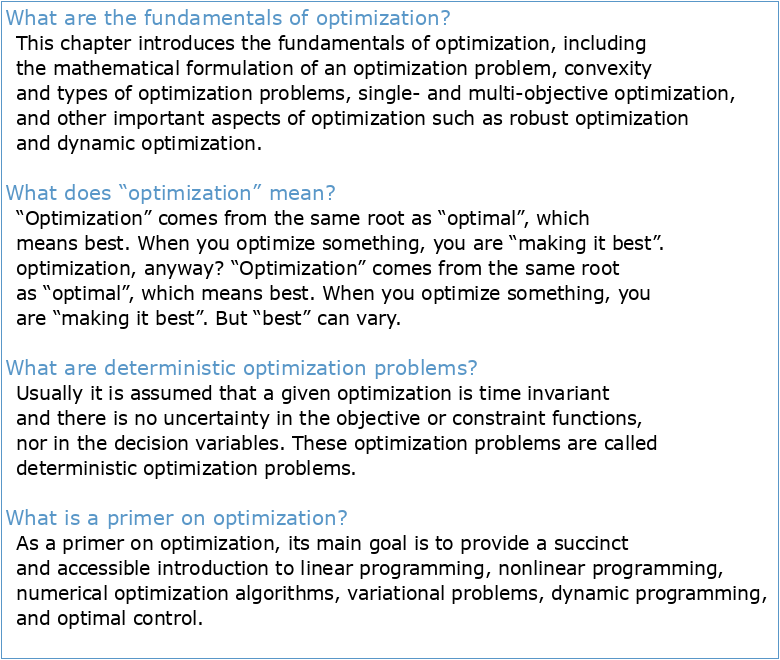

What are the fundamentals of optimization?

This chapter introduces the fundamentals of optimization, including the mathematical formulation of an optimization problem, convexity and types of optimization problems, single- and multi-objective optimization, and other important aspects of optimization such as robust optimization and dynamic optimization.

What does “optimization” mean?

“Optimization” comes from the same root as “optimal”, which means best. When you optimize something, you are “making it best”. optimization, anyway? “Optimization” comes from the same root as “optimal”, which means best. When you optimize something, you are “making it best”. But “best” can vary.

What are deterministic optimization problems?

Usually it is assumed that a given optimization is time invariant and there is no uncertainty in the objective or constraint functions, nor in the decision variables. These optimization problems are called deterministic optimization problems.

What is a primer on optimization?

As a primer on optimization, its main goal is to provide a succinct and accessible introduction to linear programming, nonlinear programming, numerical optimization algorithms, variational problems, dynamic programming, and optimal control.

1 Local Optimisation

Local optimisation algorithms are exact methods that guarantee the convergence to the local optimum in a neighbourhood of search. They are the most investigated optimisation techniques and have their roots in the calculus of variations and the work of Euler and Lagrange. The development of linear programming falls back to the 1940s, and it was the

nition 7.2.1

The real function (mathcal {L}:varOmega times mathbb {R}^mrightarrow mathbb {R})defined as is the Lagrangian, and the coefficients (lambda in mathbb {R}^m) are called Lagrange multipliers. See full list on link.springer.com

nition 7.2.2

Given a point x in the feasible region, the active set (mathcal {A}(mathbf {x}))is defined as where (mathcal {I}={1,ldots ,m})is the index set of the constraint. See full list on link.springer.com

nition 7.2.3

The linear independence constraint qualification (LICQ) holds if the set of active constraint gradients ({ abla c_i (mathbf {x}),i in mathcal {A}(mathbf {x})})is linearly independent, that is, Note that if this condition holds, none of the active constraint gradients can be zero. See full list on link.springer.com

Rem 7.2.1

Suppose that x∗ is a local solution of the constrained non-linear programming (NLP) problem and that the LICQ holds at x∗. Then a Lagrange multiplier vector λ∗ exists such that the following conditions are satisfied at the point (x∗, λ∗) These conditions are known as the Karush-Kuhn-Tucker (KKT) conditions. See full list on link.springer.com

Rk 7.2.1

The last condition implies that the Lagrange multipliers corresponding to inactive inequality constraints are zero; hence it is possible to rewrite the first equation as The optimality condition presented above gives information on how the derivatives of objective and constraints are related at the minimum point x∗. Another fundamental first-order

nition 7.2.4

Given a feasible point x ∈ D, a sequence ({{mathbf {x}}_k}_{k=0}^ {infty }) with xk ∈ Ω is a feasible sequence if, for all (kin mathbb {N}), xk ∈ D∖{x∗} and Given a feasible sequence, the set of the limiting directions w ∈ Ω∖{0} is called the cone of the feasible directions, C(x). Moving along any vector of this cone (with vertex in a loc

Rem 7.2.2

If x ∗ is a local solution of the optimisation problem and f is differentiable in x ∗ , then For the directions w for which ∇f(x∗) ⋅ w = 0, it is not possible to determine, from first derivative information alone, whether a move along this direction will increase or decrease the objective function. It is necessary to examine the second derivatives

nition 7.2.5

Given a pair (x∗, λ∗) satisfying the KKT conditions is called the critical cone. Indeed for w ∈ C(λ∗) from the first KKT condition it follows that If x∗ is a local solution, then the curvature of the Lagrangian along the directions in C(λ∗) must be non-negative in the case of qualified constraints. A positive curvature is instead a sufficient condi

Rem 7.2.3

Let f and c be twice continuously differentiable; x∗ is a local solution of the constrained problem and that the LICQ condition is satisfied. Let (lambda ^*in mathbb {R}^m) be the Lagrange multiplier for which the pair (x∗, λ∗) satisfies the KKT conditions. Then See full list on link.springer.com

Fonction publique et gestion des ressources humaines

GUIDE METHODOLOGIQUE LA FONCTION ACHAT DES GHT

Organisation et missions de la fonction Achats

La fonction achat

RAPPORT NATIONAL

RAPPORT MONDIAL ACTUALISÉ SUR LE SIDA 2023

Plan Stratégique National Intégré révisé 2023-2026 de lutte contre

Rapport sur les résultats 2023 Global Fund

Optimisation (MML1E31) Notes de cours

Introduction à l’optimisation

Introduction à loptimisation par Métaheuristiques