Scatterplots and Correlation

For a correlation coefficient of zero the points have no direction

scatterplots and correlation notes

Covariance and Correlation

28-Jul-2017 The reverse is not true in general: if the covariance of two random variables is 0 they can still be dependent! Page 2. –2–. Properties of ...

covariance

Part 2: Analysis of Relationship Between Two Variables

coefficients a and b to produce a minimum value of the error Q. a. 0. = intercept When the true correlation coefficient is not expected to be zero.

lecture. .regression.all

An Angular Transformation for the Serial Correlation Coefficient

In fact when p * 0 the distributions are quite distinct. He deduces that 'compared with the transformation of the ordinary correlation coefficient

Lecture 24: Partial correlation multiple regression

http://www.ernestoamaral.com/docs/soci420-17fall/Lecture24.pdf

On the Appropriateness of the Correlation Coefficient with a 0 1

a 0 1 Dependent Variable. JOHN NETER and E. SCOTT MAYNES*. This article deals with the use and misuse of the correlation coefficient when the.

correlation coefficient −0.6 −0.4 −0.2 0 0.2 0.4 0.6

Page 1. 40. oW. 20 o. W. 0 o. 20o. E. 40 o. E. 50 o S. 40 o S. 30 o S. 20 o S. 10 o S. 0 o correlation coefficient. −0.6. −0.4. −0.2. 0. 0.2. 0.4. 0.6.

os f

A General Correlation Coefficient for Directional Data and Related

(2.1) to define a correlation coefficient for the bivariate circular case. If 0 and b are circular variables 0 4

Performance of Some Correlation Coefficients When Applied to Zero

01-Nov-2007 Key words: zero-clustered data Pearson correlation

Conditions for Rank Correlation to Be Zero

the two rankings to be zero. This correlation is measured in turn

- 1 -

- 1 - Will Monroe

CS 109Lecture Notes #15

July 28, 2017

Covariance and CorrelationBased on a chapter by Chris PiechCovariance and Correlation

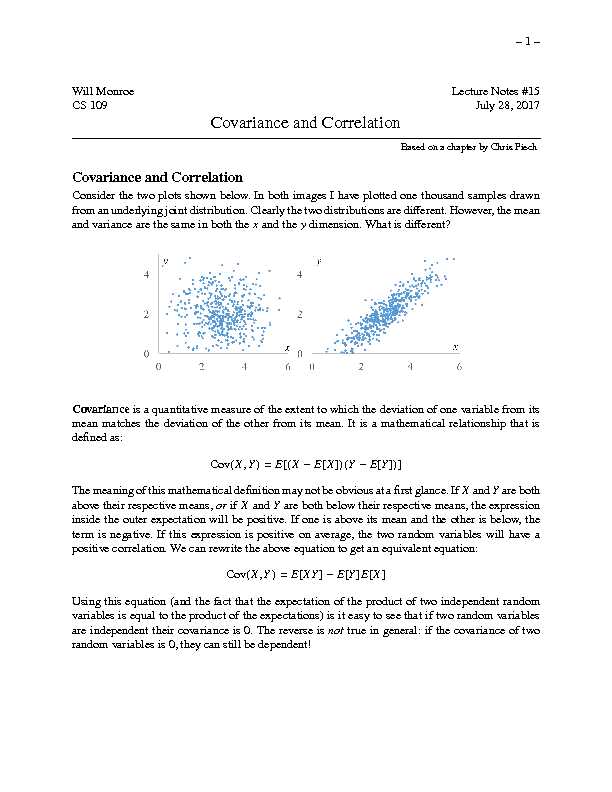

Consider the two plots shown below. In both images I have plotted one thousand samples drawnfrom an underlying joint distribution. Clearly the two distributions are different. However, the mean

and variance are the same in both thexand theydimension. What is different?Covarianceis a quantitative measure of the extent to which the deviation of one variable from its

mean matches the deviation of the other from its mean. It is a mathematical relationship that is defined as:Cov(X;Y)=E[(XE[X])(YE[Y])]

Themeaningofthismathematicaldefinitionmaynotbeobviousatafirstglance.IfXandYareboth above their respective means,orifXandYare both below their respective means, the expression inside the outer expectation will be positive. If one is above its mean and the other is below, the term is negative. If this expression is positive on average, the two random variables will have a positive correlation. We can rewrite the above equation to get an equivalent equation:Cov(X;Y)=E[XY]E[Y]E[X]

Using this equation (and the fact that the expectation of the product of two independent randomvariables is equal to the product of the expectations) is it easy to see that if two random variables

are independent their covariance is 0. The reverse isnottrue in general: if the covariance of two random variables is 0, they can still be dependent! - 2 -Properties of Covariance

Say thatXandYare arbitrary random variables:

Cov(X;Y)=Cov(Y;X)

Cov(X;X)=E[X2]E[X]E[X]=Var(X)

Cov(aX+b;Y)=aCov(X;Y)

LetX=X1+X2++Xnand letY=Y1+Y2++Ym. The covariance ofXandYis:Cov(X;Y)=n

X i=1m X j=1Cov(Xi;Yj)Cov(X;X)=Var(X)=n

X i=1n X j=1Cov(Xi;Xj) That last property gives us a third way to calculate variance.Correlation

Covariance is interesting because it is a quantitative measurement of the relationship between two variables. Correlation between two random variables,(X;Y)is the covariance of the two variables normalized by the variance of each variable. This normalization cancels the units out and normalizes the measure so that it is always in the range [0, 1]: (X;Y)=Cov(X;Y)pVar(X)Var(Y)Correlation measures linearity betweenXandY.

(X;Y)=1Y=aX+bwherea=y=x (X;Y)=1Y=aX+bwherea=y=x (X;Y)=0absence of linear relationship If(X;Y)=0we say thatXandYare "uncorrelated." If two variables are independent, then theircorrelation will be 0. However, like with covariance. it doesn"t go the other way. A correlation of 0

does not imply independence. When people use the term correlation, they are actually referring to a specific type of correlation called "Pearson" correlation. It measures the degree to which there is a linear relationship between the two variables. An alternative measure is "Spearman" correlation, which has a formula almost identical to the correlation defined above, with the exception that the underlying random variables are first transformed into their rank. Spearman correlation is outside the scope of CS109. - 1 -Will Monroe

CS 109Lecture Notes #15

July 28, 2017

Covariance and CorrelationBased on a chapter by Chris PiechCovariance and Correlation

Consider the two plots shown below. In both images I have plotted one thousand samples drawnfrom an underlying joint distribution. Clearly the two distributions are different. However, the mean

and variance are the same in both thexand theydimension. What is different?Covarianceis a quantitative measure of the extent to which the deviation of one variable from its

mean matches the deviation of the other from its mean. It is a mathematical relationship that is defined as:Cov(X;Y)=E[(XE[X])(YE[Y])]

Themeaningofthismathematicaldefinitionmaynotbeobviousatafirstglance.IfXandYareboth above their respective means,orifXandYare both below their respective means, the expression inside the outer expectation will be positive. If one is above its mean and the other is below, the term is negative. If this expression is positive on average, the two random variables will have a positive correlation. We can rewrite the above equation to get an equivalent equation:Cov(X;Y)=E[XY]E[Y]E[X]

Using this equation (and the fact that the expectation of the product of two independent randomvariables is equal to the product of the expectations) is it easy to see that if two random variables

are independent their covariance is 0. The reverse isnottrue in general: if the covariance of two random variables is 0, they can still be dependent! - 2 -Properties of Covariance

Say thatXandYare arbitrary random variables:

Cov(X;Y)=Cov(Y;X)

Cov(X;X)=E[X2]E[X]E[X]=Var(X)

Cov(aX+b;Y)=aCov(X;Y)

LetX=X1+X2++Xnand letY=Y1+Y2++Ym. The covariance ofXandYis:Cov(X;Y)=n

X i=1m X j=1Cov(Xi;Yj)Cov(X;X)=Var(X)=n

X i=1n X j=1Cov(Xi;Xj) That last property gives us a third way to calculate variance.Correlation

Covariance is interesting because it is a quantitative measurement of the relationship between two variables. Correlation between two random variables,(X;Y)is the covariance of the two variables normalized by the variance of each variable. This normalization cancels the units out and normalizes the measure so that it is always in the range [0, 1]: (X;Y)=Cov(X;Y)pVar(X)Var(Y)Correlation measures linearity betweenXandY.

(X;Y)=1Y=aX+bwherea=y=x (X;Y)=1Y=aX+bwherea=y=x (X;Y)=0absence of linear relationship If(X;Y)=0we say thatXandYare "uncorrelated." If two variables are independent, then theircorrelation will be 0. However, like with covariance. it doesn"t go the other way. A correlation of 0

does not imply independence. When people use the term correlation, they are actually referring to a specific type of correlation called "Pearson" correlation. It measures the degree to which there is a linear relationship between the two variables. An alternative measure is "Spearman" correlation, which has a formula almost identical to the correlation defined above, with the exception that the underlying random variables are first transformed into their rank. Spearman correlation is outside the scope of CS109.