Least Squares

Least Squares

17 сент. 2013 г. The MAtlAB function polyfit computes least squares polynomial fits by setting up the design matrix and using backslash to find the ...

Magic Squares

Magic Squares

2 окт. 2011 г. Write a MAtlAB function ismagic(A) that checks if A is a magic square. 10.2 Magic sum. Show that. 1 n n2. ∑ k=1 k = n3 + n. 2 . 10.3 durerperm ...

Some important Built-in function in MATLAB 1. Square root b=sqrt(x

Some important Built-in function in MATLAB 1. Square root b=sqrt(x

14 мар. 2020 г. Some important Built-in function in MATLAB. 1. Square root b=sqrt(x) b=sqrt(4). 2. 2. Remainder of dividing x/y a=rem(xy) a=rem(10

Objective 1 Triangular Wave 2 Square Wave 3 Discrete Time

Objective 1 Triangular Wave 2 Square Wave 3 Discrete Time

2 Square Wave. MATLAB has a built-in function square to generate a periodic square waveform. Following example will help you draw such a waveform. 2.1

- 1 - Some MATLAB Built-in Functions Function Description sqrt(x

- 1 - Some MATLAB Built-in Functions Function Description sqrt(x

Some MATLAB Built-in Functions. Function. Description sqrt(x). Square root of x nthroot(xn) nth root of x abs(x). Absolute value of x exp(x). Exponential (ex).

Eigenvalues and Singular Values

Eigenvalues and Singular Values

16 сент. 2013 г. The Matlab function condeig computes eigenvalue condition numbers. ... The qr function in Matlab factors any matrix real or complex

Quadrature

Quadrature

area—plot the function on graph paper and count the number of little squares that The function functions in Matlab itself usually expect the first argument to.

Iteration

Iteration

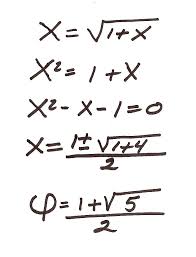

2 окт. 2011 г. Matlab responds with x = 3. Next enter this statement x = sqrt(1 + x). The abbreviation sqrt is the Matlab name for the square root function.

Total Least Squares Approach to Modeling: A Matlab Toolbox

Total Least Squares Approach to Modeling: A Matlab Toolbox

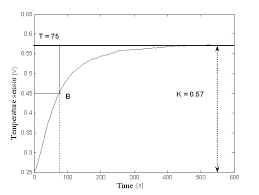

In this section we show some applications of the TLS method in static and dynamical modeling by using the created Matlab functions [14]. 3.1 Linear regression

Implementing the 2D square lattice Boltzmann method in Matlab

Implementing the 2D square lattice Boltzmann method in Matlab

23 февр. 2023 г. In contrast to LGA. LBM deals with distribution function values instead of single particles. The exact denomination for the following described ...

General Linear Least-Squares and Nonlinear Regression

General Linear Least-Squares and Nonlinear Regression

Applied Numerical Methods with MATLAB for Engineers Chapter 15 & Teaching The least-squares ... function that returns the sum of the squares of the.

Objective 1 Triangular Wave 2 Square Wave 3 Discrete Time

Objective 1 Triangular Wave 2 Square Wave 3 Discrete Time

MATLAB has a built-in function sawtooth to generate a periodic triangular waveform. Following example will help you draw such a waveform. 1.1 Example. Generate

Magic Squares

Magic Squares

2011?10?2? An n-by-n magic square is an array containing the integers from 1 to n2 ... squares of order n but the MAtlAB function magic(n) generates a ...

Least Squares

Least Squares

2013?9?17? The MAtlAB function polyfit computes least squares polynomial fits by setting up the design matrix and using backslash to find the ...

Some important Built-in function in MATLAB 1. Square root b=sqrt(x

Some important Built-in function in MATLAB 1. Square root b=sqrt(x

2020?3?14? Some important Built-in function in MATLAB. 1. Square root b=sqrt(x) b=sqrt(4). 2. 2. Remainder of dividing x/y a=rem(xy) a=rem(10

Eigenvalues and Singular Values

Eigenvalues and Singular Values

2013?9?16? A singular value and pair of singular vectors of a square or ... qr function in Matlab factors any matrix real or complex

Iteration

Iteration

2011?10?2? The abbreviation sqrt is the Matlab name for the square root function. The quantity on the right. /. 1 + x

DEPARTMENTS OF MATHEMATICS

DEPARTMENTS OF MATHEMATICS

1999?1?4? MAtlAB has included since at least version 3 a function sqrtm for computing a square root of a matrix. The function works by reducing the ...

INTRODUCTION TO MATLAB FOR ENGINEERING STUDENTS

INTRODUCTION TO MATLAB FOR ENGINEERING STUDENTS

The function diary is useful if you want to save a complete MATLAB session. of vectors in MATLAB are enclosed by square brackets and are separated by ...

Total Least Squares Approach to Modeling: A Matlab Toolbox

Total Least Squares Approach to Modeling: A Matlab Toolbox

In this section we show some applications of the TLS method in static and dynamical modeling by using the created Matlab functions [14]. 3.1 Linear regression

MATLAB Basic Functions Reference - MathWorks

MATLAB Basic Functions Reference - MathWorks

Tasks represent a series of MATLAB commands To see the commands that the task runs show the generated code Common tasks available from the Live Editor tab on the desktop toolstrip: • Clean Missing Data • Clean Outlier • Find Change Points • Find Local Extrema • Remove Trends • Smooth Data mathworks com/help/matlab

Chapter 10 Magic Squares - MathWorks

Chapter 10 Magic Squares - MathWorks

Ann-by-nmagic square is an array containing the integers from 1 ton2arranged so that each of the rows each of the columns and the two principaldiagonals have the same sum For eachn >2 there are many di?erent magicsquares of ordern but theMatlabfunctionmagic(n)generates a particular one Matlabcan generate Lo Shu with = magic(3) which produces

MATLAB Function Example Handout - University of Wyoming

MATLAB Function Example Handout - University of Wyoming

ical function libraries The Matlab Optimization and Curve Fitting Toolboxes include functions for one-norm and in?nity-norm problems We will limit ourselves to least squares in this book 5 3 censusgui The NCM program censusgui involves several di?erent linear models The data

Chapter 1 Iteration - MathWorks

Chapter 1 Iteration - MathWorks

In Matlab and most other programming languages the equals sign is the assignment operator It says compute the value on the right and store it in the variable on the left So the statement x = sqrt(1 + x) takes the current value of x computes sqrt(1 + x) and stores the result back in x

Functions and Scripts - Electrical Engineering and Computer

Functions and Scripts - Electrical Engineering and Computer

Many functions are programmed inside MATLAB as built-in functions and can be used in mathematical expressions simply by typing their name with an argument; examples are sin(x) cos(x) sqrt(x) and exp(x) MATLAB has a plethora of built-in functions for mathematical and scientific computations

Chapter 1 Introduction to MATLAB - MathWorks

Chapter 1 Introduction to MATLAB - MathWorks

MATLAB An introduction to MATLAB through a collection of mathematical and com-putational projects is provided by Moler’s free online Experiments with MATLAB [6] A list of over 1500 Matlab-based books by other authors and publishers in several languages is available at [12] Three introductions to Matlab are of par-

MATLAB Getting Started Guide - Massachusetts Institute of

MATLAB Getting Started Guide - Massachusetts Institute of

The load function reads binary files containing matrices generated by earlier MATLAB sessions or reads text files containing numeric data The text file should be organized as a rectangular table of numbers separated by blanks with one row per line and an equal number of elements in each row

MATLAB Function Tips - Michigan State University

MATLAB Function Tips - Michigan State University

function [xsqrd xcubd] = square(x) xsqrd = x^2 xcubd = x^3 There are two possible places to position this code If you are using MATLAB 5 0 or later this function code can be placed at the end of the main program in the same file as the main program It could also be placed in its own file which must use the function name or for our example

Matlab Introduction - California State University Long Beach

Matlab Introduction - California State University Long Beach

The batch commands in a file are then executed by typing the name of the file at the Matlab command prompt The advantage to using a ' m' file is that you can make small changes to your code (even in different Matlab sessions) without having to remember and retype the entire set of commands

6057 Introduction to MATLAB Homework 2 - MIT OpenCourseWare

6057 Introduction to MATLAB Homework 2 - MIT OpenCourseWare

Use magenta square symbols of marker size 10 and line width 4 and no line connecting them You may have to change the x limits to see all 6 symbols (xlim) If the relationship really is exponential it will look linear on a log plot 2 Subplot and axis modes Make a new Square Tight 100 100 200 figure that has a 2x2 grid of axes () subplot 200

MATLAB Commands and Functions - College of Science and

MATLAB Commands and Functions - College of Science and

MATLAB Commands – 11 M-Files eval Interpret strings containing Matlab expressions feval Function evaluation function Creates a user-defined function M-file global Define global variables nargin Number of function input arguments nargout Number of function output arguments script Script M-files Timing cputime CPU time in seconds

Searches related to function square matlab filetype:pdf

Searches related to function square matlab filetype:pdf

MATLAB is installed on the engineering instructional facility You can find it in the Start>Programs menu You can also install MATLAB on your own computer This is a somewhat involved process –you need to first register your name at mathworks then wait until they create an account for you there then download MATLAB and activate it

What are the functions of MATLAB?

- MATLAB Function Example Handout. MatLab is a high performance numeric computing environment, which includes numerical analysis, matrix computation, signal processing, and graphics to provide answers to the most troubling of mathematical problems. This handout provides different examples to show the different aspects of MatLab.

How to generate unit step function in MATLAB?

- function [x]=unitstep (x) %This is a unit step "function". The vector keeping track of time is the %input. If time is negative then a zero is returned. If time is zero than %0.5 is returned.

How to generate square wave in MATLAB?

- Square wave is generated using “square” function in Matlab. The command sytax – square (t,dutyCycle) – generates a square wave with period for the given time base. The command behaves similar to “ sin ” command (used for generating sine waves), but in this case it generates a square wave instead of a sine wave.

Chapter 5

Least Squares

The termleast squaresdescribes a frequently used approach to solving overdeter- mined or inexactly specified systems of equations in an approximate sense. Instead of solving the equations exactly, we seek only to minimize the sum of the squares of the residuals. The least squares criterion has important statistical interpretations. If ap- propriate probabilistic assumptions about underlying error distributions are made, least squares produces what is known as themaximum-likelihoodestimate of the pa- rameters. Even if the probabilistic assumptions are not satisfied, years of experience have shown that least squares produces useful results. The computational techniques for linear least squares problems make use of orthogonal matrix factorizations.5.1 Models and Curve Fitting

A very common source of least squares problems is curve fitting. Lettbe the independent variable and lety(t) denote an unknown function oftthat we want to approximate. Assume there aremobservations, i.e., values ofymeasured at specified values oft: y i=y(ti), i= 1,...,m. The idea is to modely(t) by a linear combination ofnbasis functions: y(t)≈β1ϕ1(t) +···+βnϕn(t). Thedesign matrixXis a rectangular matrix of ordermbynwith elements x i;j=ϕj(ti). The design matrix usually has more rows than columns. In matrix-vector notation, the model is y≈Xβ.September 17, 2013

12Chapter 5. Least Squares

The symbol≈stands for "is approximately equal to." We are more precise about this in the next section, but our emphasis is onleast squaresapproximation. The basis functionsϕj(t) can be nonlinear functions oft, but the unknown parameters,βj, appear in the model linearly. The system of linear equationsXβ≈y

isoverdeterminedif there are more equations than unknowns. TheMatlabback- slash operator computes a least squares solution to such a system. beta = X\y The basis functions might also involve some nonlinear parameters,α1,...,αp. The problem isseparableif it involves both linear and nonlinear parameters: y(t)≈β1ϕ1(t,α) +···+βnϕn(t,α). The elements of the design matrix depend upon bothtandα: x i;j=ϕj(ti,α). Separable problems can be solved by combining backslash with theMatlabfunc- tionfminsearchor one of the nonlinear minimizers available in the Optimization Toolbox. The new Curve Fitting Toolbox provides a graphical interface for solving nonlinear fitting problems.Some common models include the following:

•Straight line: If the model is also linear int, it is a straight line: y(t)≈β1t+β2. •Polynomials: The coefficientsβjappear linearly.Matlaborders polynomials with the highest power first: j(t) =tn-j, j= 1,...,n, TheMatlabfunctionpolyfitcomputes least squares polynomial fits by setting up the design matrix and using backslash to find the coefficients. •Rational functions: The coefficients in the numerator appear linearly; the coefficients in the denominator appear nonlinearly: j(t) =tn-j1tn-1+···+αn-1t+αn,

1tn-1+···+αn-1t+αn.

5.2. Norms3

•Exponentials: The decay rates,λj, appear nonlinearly: j(t) =e-jt, •Log-linear: If there is only one exponential, taking logs makes the model linear but changes the fit criterion: y(t)≈Ket, logy≈β1t+β2,withβ1=λ, β2= logK. •Gaussians: The means and variances appear nonlinearly: j(t) =e-( tj j) 2 y(t)≈β1e-(t1 1) 2 +···+βne-(tn n)2.5.2 Norms

Theresidualsare the differences between the observations and the model: r i=yi-n∑ 1β jϕj(ti,α), i= 1,...,m.Or, in matrix-vector notation,

r=y-X(α)β. We want to find theα's andβ's that make the residuals as small as possible. What do we mean by "small"? In other words, what do we mean when we use the '≈' symbol? There are several possibilities. •Interpolation: If the number of parameters is equal to the number of obser- vations, we might be able to make the residuals zero. For linear problems, this will mean thatm=nand that the design matrixXis square. IfXis nonsingular, theβ's are the solution to a square system of linear equations:β=X\y.

•Least squares: Minimize the sum of the squares of the residuals: ∥r∥2=m∑ 1r 2i.4Chapter 5. Least Squares

•Weighted least squares: If some observations are more important or more accurate than others, then we might associate different weights,wj, with different observations and minimize ∥r∥2w=m∑ 1w ir2i. For example, if the error in theith observation is approximatelyei, then choosewi= 1/ei. Any algorithm for solving an unweighted least squares problem can be used to solve a weighted problem by scaling the observations and design matrix. We simply multiply bothyiand theith row ofXbywi. InMatlab, this can be accomplished withX = diag(w)*X

y = diag(w)*y •One-norm: Minimize the sum of the absolute values of the residuals: ∥r∥1=m∑1|ri|.

This problem can be reformulated as a linear programming problem, but it is computationally more difficult than least squares. The resulting parameters are less sensitive to the presence of spurious data points oroutliers. •Infinity-norm: Minimize the largest residual: ∥r∥∞= maxi|ri|. This is also known as a Chebyshev fit and can be reformulated as a linear programming problem. Chebyshev fits are frequently used in the design of digital filters and in the development of approximations for use in mathemat- ical function libraries. TheMatlabOptimization and Curve Fitting Toolboxes include functions for one-norm and infinity-norm problems. We will limit ourselves to least squares in this book.5.3 censusgui

The NCM programcensusguiinvolves several different linear models. The data are the total population of the United States, as determined by the U.S. Census, for the years 1900 to 2010. The units are millions of people.5.3. censusgui5

ty1900 75.995

1910 91.972

1920 105.711

1930 123.203

1940 131.669

1950 150.697

1960 179.323

1970 203.212

1980 226.505

1990 249.633

2000 281.422

2010 308.748

The task is to model the population growth and predict the population whent=2020. The default model incensusguiis a cubic polynomial int:

y≈β1t3+β2t2+β3t+β4. There are four unknown coefficients, appearing linearly.19001920194019601980200020200 50100

150

200

250

300

350

400

Predict U.S. Population in 2020

Millions

341.125

Figure 5.1.censusgui.

Numerically, it's a bad idea to use powers oftas basis functions whentis around 1900 or 2000. The design matrix is badly scaled and its columns are nearly linearly dependent. A much better basis is provided by powers of a translated and scaledt: s= (t-1955)/55.6Chapter 5. Least Squares

y≈β1s3+β2s2+β3s+β4.The resulting design matrix is well conditioned.

Figure 5.1 shows the fit to the census data by the default cubic polynomial. The extrapolation to the year 2020 seems reasonable. The push buttons allow you to vary the degree of the polynomial. As the degree increases, the fit becomes more accurate in the sense that∥r∥decreases, but it also becomes less useful because the variation between and outside the observations increases. Thecensusguimenu also allows you to choose interpolation bysplineand pchipand to see the log-linear fit y≈Ket. Nothing in thecensusguitool attempts to answer the all-important question, "Which is the best model?" That's up to you to decide.5.4 Householder Reflections

Householder reflections are matrix transformations that are the basis for some of the most powerful and flexible numerical algorithms known. We will use Householder reflections in this chapter for the solution of linear least squares problems and in a later chapter for the solution of matrix eigenvalue and singular value problems. Formally, a Householder reflection is a matrix of the formH=I-ρuuT,

whereuis any nonzero vector andρ= 2/∥u∥2. The quantityuuTis a matrix of rank one where every column is a multiple ofuand every row is a multiple ofuT. The resulting matrixHis both symmetric and orthogonal, that is, H T=H and HTH=H2=I.

In practice, the matrixHis never formed. Instead, the application ofHto a vectorxis computed byτ=ρuTx,

Hx=x-τu.

Geometrically, the vectorxis projected ontouand then twice that projection is subtracted fromx. Figure 5.2 shows a vectoruand a line labeledu⊥that is perpendicular to u. It also shows two vectors,xandy, and their images,HxandHy, under the5.4. Householder Reflections7Householder reflection

xu Hx y Hy u?Figure 5.2.Householder re

ection. transformationH. The matrix transforms any vector into its mirror image in the lineu⊥. For any vectorx, the point halfway betweenxandHx, that is, the vector x-(τ/2)u, is actually on the lineu⊥. In more than two dimensions,u⊥is the plane perpen- dicular to the defining vectoru. Figure 5.2 also shows what happens ifubisects the angle betweenxand one of the axes. The resultingHxthen falls on that axis. In other words, all but one of the components ofHxare zero. Moreover, sinceHis orthogonal, it preserves length. Consequently, the nonzero component ofHxis±∥x∥. For a given vectorx, the Householder reflection that zeros all but thekth component ofxis given byσ=±∥x∥,

u=x+σek,ρ= 2/∥u∥2= 1/(σuk),

H=I-ρuuT.

In the absence of roundoff error, either sign could be chosen forσ, and the resulting Hxwould be on either the positive or the negativekth axis. In the presence of roundoff error, it is best to choose the sign so that signσ= signxk. Then the operationxk+σis actually an addition, not a subtraction.8Chapter 5. Least Squares

5.5 The QR Factorization

If all the parameters appear linearly and there are more observations than basis functions, we have a linear least squares problem. The design matrixXismbyn withm > n. We want to solveXβ≈y.

But this system is overdetermined - there are more equations than unknowns. So we cannot expect to solve the system exactly. Instead, we solve it in the least squares sense: min ∥Xβ-y∥. A theoretical approach to solving the overdetermined system begins by mul- tiplying both sides byXT. This reduces the system to a square,n-by-nsystem known as thenormal equations: XTXβ=XTy.

If there are thousands of observations and only a few parameters, the design matrix Xis quite large, but the matrixXTXis small. We have projectedyonto the space spanned by the columns ofX. Continuing with this theoretical approach, if the basis functions are independent, thenXTXis nonsingular andβ= (XTX)-1XTy.

This formula for solving linear least squares problems appears in most text- books on statistics and numerical methods. However, there are several undesirable aspects to this theoretical approach. We have already seen that using a matrix inverse to solve a system of equations is more work and less accurate than solving the system by Gaussian elimination. But, more importantly, the normal equations are always more badly conditioned than the original overdetermined system. In fact, the condition number is squared:κ(XTX) =κ(X)2.

With finite-precision computation, the normal equations can actually become sin- gular, and (XTX)-1nonexistent, even though the columns ofXare independent.As an extreme example, consider the design matrix

X=

1 1 δ0 Ifδis small, but nonzero, the two columns ofXare nearly parallel but are still linearly independent. The normal equations make the situation worse: XTX=(1 +δ21

1 1 +δ2)

5.5. The QR Factorization9

If|δ|<10-8, the matrixXTXcomputed with double-precision floating-point arith- metic is exactly singular and the inverse required in the classic textbook formula does not exist. Matlabavoids the normal equations. The backslash operator not only solves square, nonsingular systems, but also computes the least squares solution to rect- angular, overdetermined systems:β=X\y.

Most of the computation is done by anorthogonalizationalgorithm known as the QR factorization. The factorization is computed by the built-in functionqr. The NCM functionqrstepsdemonstrates the individual steps. The two versions of the QR factorization are illustrated in Figure 5.3. Both versions have X=QR. In the full version,Ris the same size asXandQis a square matrix with as many rows asX. In the economy-sized version,Qis the same size asXandRis a square matrix with as many columns asX. The letter "Q" is a substitute for the letter "O" in "orthogonal" and the letter "R" is for "right" triangular matrix. The Gram- Schmidt process described in many linear algebra texts is a related, but numerically less satisfactory, algorithm that generates the same factorization.X = Q RX = Q R

Figure 5.3.Full and economy QR factorizations.

A sequence of Householder reflections is applied to the columns ofXto pro- duce the matrixR: H n···H2H1X=R.10Chapter 5. Least Squares

Thejth column ofRis a linear combination of the firstjcolumns ofX. Conse- quently, the elements ofRbelow the main diagonal are zero. If the same sequence of reflections is applied to the right-hand side, the equa- tionsXβ≈y

becomeRβ≈z,

where H n···H2H1y=z. The firstnof these equations is a small, square, triangular system that can be solved forβby back substitution with the subfunctionbacksubsinbslashtx. The coefficients in the remainingm-nequations are all zero, so these equations are independent ofβand the corresponding components ofzconstitute the transformed residual. This approach is preferable to the normal equations because Householder reflections have impeccable numerical credentials and because the resulting trian- gular system is ready for back substitution.The matrixQin the QR factorization is

Q= (Hn···H2H1)T.

To solve least squares problems, we do not have to actually computeQ. In other uses of the factorization, it may be convenient to haveQexplicitly. If we compute just the firstncolumns, we have the economy-sized factorization. If we compute allmcolumns, we have the full factorization. In either case, Q TQ=I, soQhas columns that are perpendicular to each other and have unit length. Such a matrix is said to haveorthonormal columns. For the fullQ, it is also true that QQ T=I, so the fullQis anorthogonalmatrix. Let's illustrate this with a small version of the census example. We will fit the six observations from 1950 to 2000 with a quadratic: y(s)≈β1s2+β2s+β3. The scaled times = ((1950:10:2000)' - 1950)/50and the observationsyare sy0.0000 150.6970

0.2000 179.3230

0.4000 203.2120

0.6000 226.5050

0.8000 249.6330

1.0000 281.4220

5.5. The QR Factorization11

The design matrix isX = [s.*s s ones(size(s))].

00 1.0000

0.0400 0.2000 1.0000

0.1600 0.4000 1.0000

0.3600 0.6000 1.0000

0.6400 0.8000 1.0000

1.0000 1.0000 1.0000

The M-fileqrstepsshows the steps in the QR factorization. qrsteps(X,y) The first step introduces zeros below the diagonal in the first column ofX. -1.2516 -1.4382 -1.75780 0.1540 0.9119

0 0.2161 0.6474

0 0.1863 0.2067

0 0.0646 -0.4102

0 -0.1491 -1.2035

The same Householder reflection is applied toy.

-449.3721160.1447

126.4988

53.9004

-57.2197 -198.0353Zeros are introduced in the second column.

-1.2516 -1.4382 -1.75780 -0.3627 -1.3010

00 -0.2781

00 -0.5911

00 -0.6867

00 -0.5649

The second Householder reflection is also applied toy. -449.3721 -242.3136 -41.8356 -91.2045 -107.4973 -81.8878 Finally, zeros are introduced in the third column and the reflection applied toy. This produces the triangular matrixRand a modified right-hand sidez.12Chapter 5. Least Squares

R = -1.2516 -1.4382 -1.75780 -0.3627 -1.3010

00 1.1034

000 000 000 z = -449.3721 -242.3136168.2334

-1.3202 -3.08014.0048

The system of equationsRβ=zis the same size as the original, 6 by 3. We can solve the first three equations exactly (becauseR(1 : 3,1 : 3) is nonsingular). beta = R(1:3,1:3)\z(1:3) beta =5.7013

121.1341

152.4745

This is the same solutionbetathat the backslash operator computes with beta = R\z or beta = X\y The last three equations inRβ=zcannot be satisfied by any choice ofβ, so the last three components ofzrepresent the residual. In fact, the two quantities norm(z(4:6)) norm(X*beta - y) are both equal to5.2219. Notice that even though we used the QR factorization, we never actually computedQ. The population in the year 2010 can be predicted by evaluating1s2+β2s+β3

ats= (2010-1950)/50 = 1.2. This can be done withpolyval. p2010 = polyval(beta,1.2) p2010 =306.0453

The actual 2010 census found the population to be 308.748 million.5.6. Pseudoinverse13

5.6 Pseudoinverse

The definition of the pseudoinverse makes use of the Frobenius norm of a matrix: ∥A∥F=(∑ i∑ ja 2i;j) 1=2 TheMatlabexpressionnorm(X,'fro')computes the Frobenius norm.∥A∥Fis the same as the 2-norm of the long vector formed from all the elements ofA. norm(A,'fro') == norm(A(:)) TheMoore{Penrose pseudoinversegeneralizes and extends the usual matrix inverse. The pseudoinverse is denoted by a dagger superscript,Z=X†,

and computed by theMatlabpinv.Z = pinv(X)

IfXis square and nonsingular, then the pseudoinverse and the inverse are the same: X †=X-1. IfXismbynwithm > nandXhas full rank, then its pseudoinverse is the matrix involved in the normal equations: X †= (XTX)-1XT. The pseudoinverse has some, but not all, of the properties of the ordinary inverse.X†is a left inverse because X †X= (XTX)-1XTX=I is then-by-nidentity. ButX†is not a right inverse because XX †=X(XTX)-1XT only has ranknand so cannot be them-by-midentity. The pseudoinverse does get as close to a right inverse as possible in the sense that, out of all the matricesZthat minimize ∥XZ-I∥F,Z=X†also minimizes

∥Z∥F. It turns out that these minimization properties also define a unique pseudoinverse even ifXis rank deficient.14Chapter 5. Least Squares

Consider the 1-by-1 case. What is the inverse of a real (or complex) number x? Ifxis not zero, then clearlyx-1= 1/x. But ifxis zero,x-1does not exist. The pseudoinverse takes care of that because, in the scalar case, the unique number that minimizes both |xz-1|and|z| is x †={1/x:x̸= 0,0 :x= 0.

The actual computation of the pseudoinverse involves the singular value de- composition, which is described in a later chapter. You canedit pinvortype pinv to see the code.5.7 Rank Deciency

quotesdbs_dbs7.pdfusesText_13[PDF] fundamentals of corporate finance pdf

[PDF] fundamentos de administracion y gestion

[PDF] fundamentos de gestión empresarial

[PDF] fundamentos de gestion empresarial definicion

[PDF] fundamentos de gestion empresarial enfoque basado en competencias pdf

[PDF] fundamentos de gestion empresarial enfoque basado en competencias pdf gratis

[PDF] fundamentos de gestion empresarial julio garcia del junco pdf

[PDF] fundamentos de gestion empresarial libro

[PDF] fundamentos de gestion empresarial mc graw hill pdf

[PDF] fundamentos de gestión empresarial pearson pdf

[PDF] fundamentos de gestion empresarial unidad 1

[PDF] fundamentos de marketing kotler 13 edicion pdf

[PDF] fundamentos de marketing kotler 14 edicion pdf

[PDF] fundamentos de marketing kotler 8va edicion pdf